Reading view

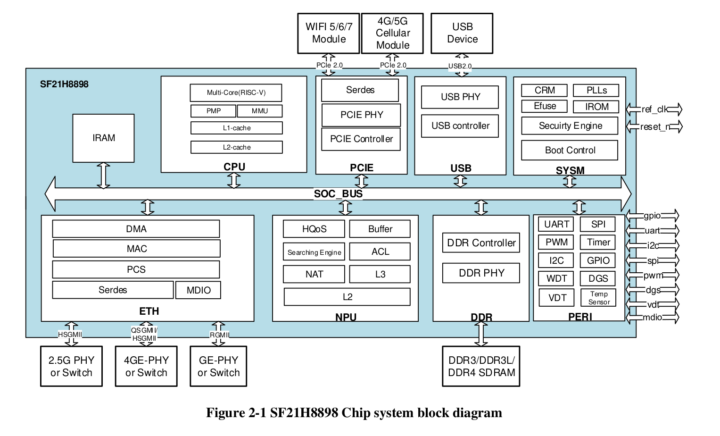

Siflower SF21H8898 is a quad-core 64-bit RISC-V SoC for industrial gateways, routers, and controllers

Siflower SF21H8898 SoC features a quad-core 64-bit RISC-V processor clocked at up to 1.25 GHz and a network processing unit (NPU) for handling traffic and is designed for industrial-grade gateways, controllers, and routers. The chip supports up to 2GB DDR3, DDR3L, or DDR4 memory, offers QSGMII (quad GbE), SGMII/HSGMII (GbE/2.5GbE), and RGMII (GbE) networking interfaces, and USB 2.0, PCIE 2.0, SPI, UART, I2C, and PWM interfaces. Siflower SF21H8898 specifications: CPU – Quad-core 64-bit RISC-V processor at up to 1.25 GHz Cache 32KB L1 I-Cache and 32KB L1 D-Cache per core Shared 256 KB L2 cache Memory – Up to 2GB 16-bit DDR3/3L up to 2133Mbps or DDR4 up to 2666Mbps Storage – NAND and NOR SPI flash support Networking 1x QSGMII interface (Serdes 5Gbps rate) for 4x external Gigabit Ethernet PHYs 1x SGMII/HSGMII interface (Serdes1.25/3.125Gbps rate) supporting Gigabit and 2.5Gbps modes 1x RGMII interface for Gigabit Ethernet 1x MDIO interface [...]

The post Siflower SF21H8898 is a quad-core 64-bit RISC-V SoC for industrial gateways, routers, and controllers appeared first on CNX Software - Embedded Systems News.

Simplify AI Development with the Model Context Protocol and Docker

This ongoing Docker Labs GenAI series explores the exciting space of AI developer tools. At Docker, we believe there is a vast scope to explore, openly and without the hype. We will share our explorations and collaborate with the developer community in real time. Although developers have adopted autocomplete tooling like GitHub Copilot and use chat, there is significant potential for AI tools to assist with more specific tasks and interfaces throughout the entire software lifecycle. Therefore, our exploration will be broad. We will be releasing software as open source so you can play, explore, and hack with us, too.

In December, we published The Model Context Protocol: Simplifying Building AI apps with Anthropic Claude Desktop and Docker. Along with the blog post, we also created Docker versions for each of the reference servers from Anthropic and published them to a new Docker Hub mcp namespace.

This provides lots of ways for you to experiment with new AI capabilities using nothing but Docker Desktop.

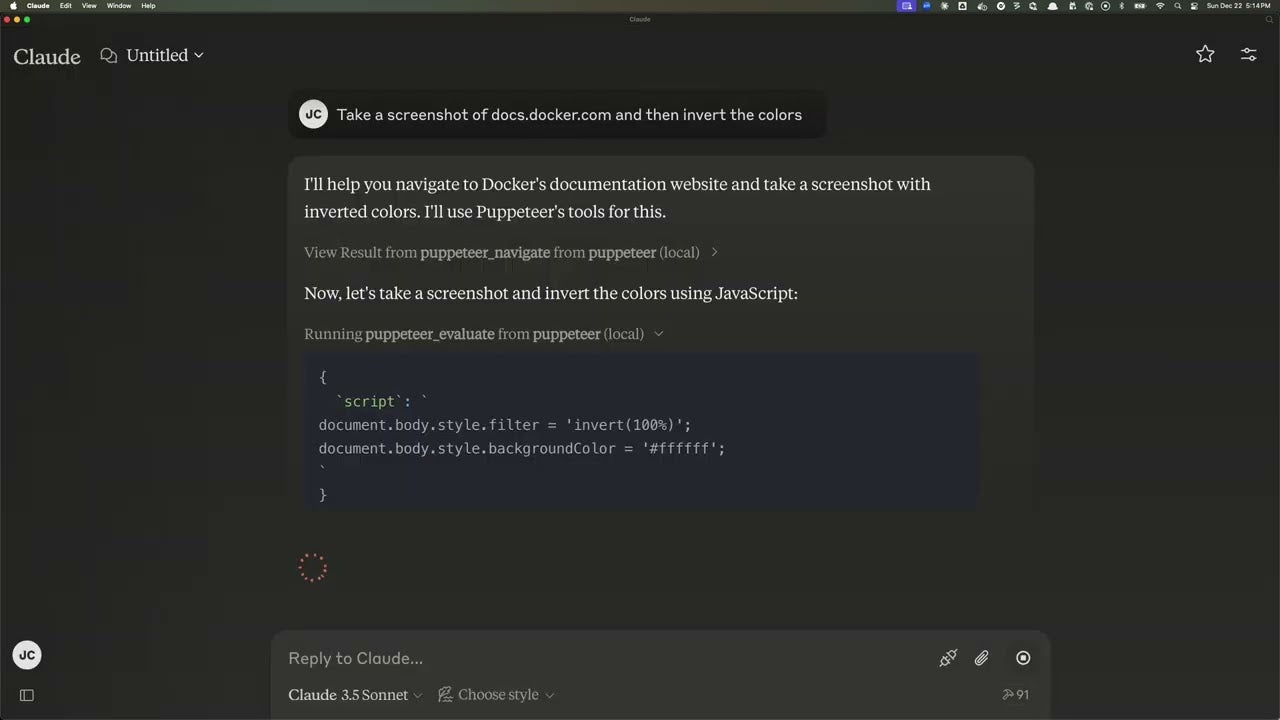

For example, to extend Claude Desktop to use Puppeteer, update your claude_desktop_config.json file with the following snippet:

"puppeteer": {

"command": "docker",

"args": ["run", "-i", "--rm", "--init", "-e", "DOCKER_CONTAINER=true", "mcp/puppeteer"]

}After restarting Claude Desktop, you can ask Claude to take a screenshot of any URL using a Headless Chromium browser running in Docker.

You can do the same thing for a Model Context Protocol (MCP) server that you’ve written. You will then be able to distribute this server to your users without requiring them to have anything besides Docker Desktop.

How to create an MCP server Docker Image

An MCP server can be written in any language. However, most of the examples, including the set of reference servers from Anthropic, are written in either Python or TypeScript and use one of the official SDKs documented on the MCP site.

For typical uv-based Python projects (projects with a pyproject.toml and uv.lock in the root), or npm TypeScript projects, it’s simple to distribute your server as a Docker image.

- If you don’t already have Docker Desktop, sign up for a free Docker Personal subscription so that you can push your images to others.

- Run

docker loginfrom your terminal. - Copy either this npm Dockerfile or this Python Dockerfile template into the root of your project. The Python Dockerfile will need at least one update to the last line.

- Run the build with the Docker CLI (instructions below).

The two Dockerfiles shown above are just templates. If your MCP server includes other runtime dependencies, you can update the Dockerfiles to include these additions. The runtime of your MCP server should be self-contained for easy distribution.

If you don’t have an MCP server ready to distribute, you can use a simple mcp-hello-world project to practice. It’s a simple Python codebase containing a server with one tool call. Get started by forking the repo, cloning it to your machine, and then following the following instructions to build the MCP server image.

Building the image

Most sample MCP servers are still designed to run locally (on the same machine as the MCP client, communication over stdio). Over the next few months, you’ll begin to see more clients supporting remote MCP servers but for now, you need to plan for your server running on at least two different architectures (amd64 and arm64). This means that you should always distribute what we call multi-platform images when your target is local MCP servers. Fortunately, this is easy to do.

Create a multi-platform builder

The first step is to create a local builder that will be able to build both platforms. Don’t worry; this builder will use emulation to build the platforms that you don’t have. See the multi-platform documentation for more details.

docker buildx create \

--name mcp-builder \

--driver docker-container \

--bootstrapBuild and push the image

In the command line below, substitute <your-account> and your mcp-server-name for valid values, then run a build and push it to your account.

docker buildx build \

--builder=mcp-builder \

--platform linux/amd64,linux/arm64 \

-t <your-docker-account>/mcp-server-name \

--push .Extending Claude Desktop

Once the image is pushed, your users will be able to attach your MCP server to Claude Desktop by adding an entry to claude_desktop_config.json that looks something like:

"your-server-name": {

"command": "docker",

"args": ["run", "-i", "--rm", "--pull=always",

"your-account/your-server-name"]

}This is a minimal set of arguments. You may want to pass in additional command-line arguments, environment variables, or volume mounts.

Next steps

The MCP protocol gives us a standard way to extend AI applications. Make sure your extension is easy to distribute by packaging it as a Docker image. Check out the Docker Hub mcp namespace for examples that you can try out in Claude Desktop today.

As always, feel free to follow along in our public repo.

For more on what we’re doing at Docker, subscribe to our newsletter.

Learn more

- Subscribe to the Docker Newsletter.

- Learn about accelerating AI development with the Docker AI Catalog.

- Read the Docker Labs GenAI series.

- Get the latest release of Docker Desktop.

- Have questions? The Docker community is here to help.

- New to Docker? Get started.

Introducing Red Hat OpenShift Virtualization Engine: OpenShift for your virtual machines

Linux 6.14 To Bring An Important Improvement For AMD Preferred Core

Arm: The Partner of Choice for Academic Engagements

At Arm, we recognize the importance of the latest research findings from academia and how they can help to shape future technology roadmaps. As a global semiconductor ambassador, we play a key role in academic engagements and refining the commercial relevance of their respective research.

Our approach is multifaceted, involving broad engagements with entire departments and focused collaborations with individual researchers. This ensures that we are not only advancing the field of computing but also fostering the talent that will lead the industry in the future.

Pioneering research and strategic investments in academia

A prime example of our broad engagement is our long-standing relationship with the University of Cambridge’s Depart of Computer Science and Technology. We’ve announced critical investment into the Department’s new CASCADE (Computer Architecture and Semiconductor Design) Centre. To realize the potential of AI through next-gen processor designs, this initiative will fund 15 PhD students over the next five years who will undertake groundbreaking work in intent-based programming.

Meanwhile, our work with the Morello program continues to push the boundaries of secure computing. This is a research initiative aimed at creating a more secure hardware architecture for future Arm devices. The program is based on the CHERI (Capability Hardware Enhanced RISC Instructions) model, which has been developed in collaboration with the University of Cambridge since 2015. By implementing CHERI architectural extensions, Morello aims to mitigate memory safety vulnerabilities and enhance the overall security of devices.

In the United States, our membership in the SRC JUMP2.0 program, a public-private partnership alongside DARPA (Defense Advanced Research Projects Agency) and other noted semiconductor companies, enables us to support pathfinding research across new and emerging technical challenges. One notable investment is the PRISM Centre (Processing with Intelligent Storage and Memory), which is led by the University of California San Diego, where we are deeply engaged in advancing the computing field.

Fuelling innovation through strategic PhD investments

Arm’s broad academic engagements are complemented by specific investments in emerging research areas, where the commercial impact is still being defined. PhD studentships are ideal for these exploratory studies, providing the necessary timeframe to progress ideas and early-stage concepts toward potential commercial viability. Examples include:

- A PhD at the University of Limerick developing next-generation automotive vision systems, supported by a cross-Arm collaboration.

- Ongoing funding to the Barcelona Supercomputing Centre for PhD students working on high-performance computing (HPC) and genomics workloads.

- A PhD studentship at the University of Utah exploring security and verification topics.

Shaping the future through research and technology

In areas where challenges are just being identified, Arm convenes workshops with academic thought leaders to scope future use cases and the fundamental experimental work needed to advance the field. Moreover, our white papers on Ambient Intelligence and the Metaverse are helping the academic community develop future research programs, acting as a springboard for further innovation.

Given our position in the ecosystem, we are often invited to provide thought leadership at academic conferences. Highlights from this year include:

- A keynote by Rob Dimond, a System Architect and Fellow at Arm, at the DATE conference in Valencia, a major event for our industry and academia.

- Simon Craske, a Lead Architect and Fellow at Arm, participated as a speaker at the inaugural IET REACH Computer Architecture Conference.

Reinforcing academic engagements by investing in future talent

Investing in PhDs is not just about research; it’s about nurturing the future talent pipeline for our industry. We also engage with governments and funding agencies to ensure that university research funding is targeted appropriately.

For instance, Andrea Kells sits on the EPSRC (UK) Science Advisory Team for Information and Communication Technologies and the Semiconductor Research Corporation (US) Scientific Advisory Board, which both link with the Arm Government Affairs team on research investments.

Check out Andrea’s webinar on advances and challenges in semiconductor design

Expanding global collaborations to drive technological marvels

Arm’s commitment to academic engagements spans the globe, reflecting our dedication to fostering innovation worldwide. In Asia for instance, we have initiated collaborations with leading institutions to explore new frontiers in semiconductor technology. Our partnership with the National University of Singapore focuses on developing power-efficient computing solutions, which are crucial for the next generation of mobile and IoT devices.

In Europe, beyond our engagements in the U.K. and Spain, we are also working with the Technical University of Munich on advanced research in quantum computing. This collaboration aims to address some of the most challenging problems in computing today, paving the way for breakthroughs that could revolutionize the industry.

Bridging academics and the industry for a brighter future

innovation and supporting the next generation of technology leaders. Our investments in academic engagements not only advance the field of semiconductor technology but also ensure that we remain at the forefront of technological progress.

As we continue to nurture upcoming talent, support groundbreaking research, and foster global collaborations, we are shaping the future of computing.

For more information

For more details about Arm’s academic engagements and partnerships contact Andrea Kells, Arm’s Research Ecosystem Director at Andrea.Kells@arm.com

The post Arm: The Partner of Choice for Academic Engagements appeared first on Arm Newsroom.

Xen Hypervisor Support Being Worked On For RISC-V

libvirt 11.0 Released For Open-Source Virtualization API

PiFi review: mobile wireless access solution

Jetway MTX-MTH1 thin Mini-ITX SBC features Intel Core Ultra 5/7 SoC, three 2.5GbE, four HDMI 2.1, PCIe Gen5 x8 slot

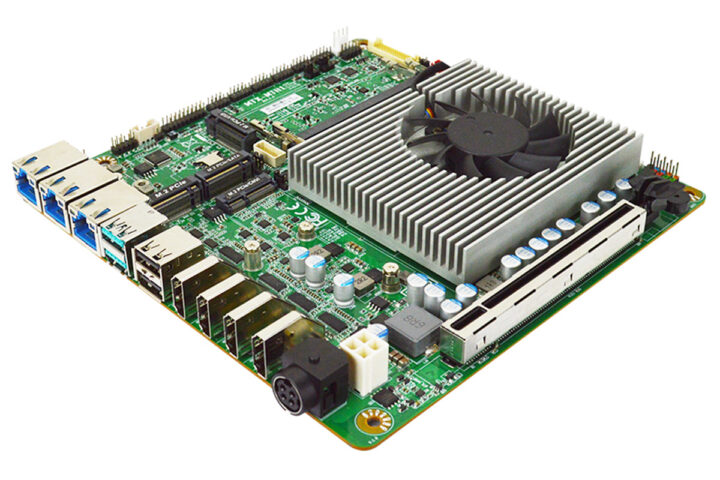

Jetway MTX-MTH1 is a thin industrial Mini-ITX SBC built around Intel Core Ultra 5/7 Meteor Lake processors with Intel Arc Graphics and support for up to 96GB of DDR5 memory. The most interesting features of this compact board are the three 2.5GbE jacks and four HDMI 2.1 video outputs for 4K and 8K displays. Additionally, it features multiple USB ports, a PCIe Gen 5 x8 slot, M.2 slots for storage and 4G LTE/5G, serial ports, and GPIO interfaces. With industrial environmental tolerance (-20°C to 60°C) this thin Mini-ITX motherboard is ideal for industrial automation, AI processing, edge computing, and embedded systems. Jetway MTX-MTH1 specifications: SoC (one or the other) Intel Core Ultra 7 Processor 155H (Meteor Lake, TDP 28W) with Intel Arc Graphics Intel Core Ultra 5 Processor 125H (Meteor Lake, TDP 28W) with Intel Arc Graphics System Memory – 2x DDR5 5600MHz SO-DIMM, up to 96GB Storage SATA III [...]

The post Jetway MTX-MTH1 thin Mini-ITX SBC features Intel Core Ultra 5/7 SoC, three 2.5GbE, four HDMI 2.1, PCIe Gen5 x8 slot appeared first on CNX Software - Embedded Systems News.

Re: OPNsense 25.1-BETA released

Python Launchpad 2025: Your Blueprint to Mastery and Beyond

Introduction

Python is everywhere, from data science to web development. It’s beginner-friendly and versatile, making it one of the most sought-after skills for 2025 and beyond. This article outlines a practical, step-by-step roadmap to master Python and grow your career.

Learning Time Frame

The time it takes to learn Python depends on your goals and prior experience. Here’s a rough timeline:

- 1-3 Months: Grasp the basics, like syntax, loops, and functions. Start small projects.

- 4-12 Months: Move to intermediate topics like object-oriented programming and essential libraries. Build practical projects.

- Beyond 1 Year: Specialize in areas like web development, data science, or machine learning.

Consistency matters more than speed. With regular practice, you can achieve meaningful progress in a few months.

Steps for Learning Python Successfully

- Understand Your Motivation

- Define your goals. Whether for a career change, personal projects, or academic growth, knowing your “why” keeps you focused.

- Start with the Basics

- Learn Python syntax, data types, loops, and conditional statements. This foundation is key for tackling more complex topics.

- Master Intermediate Concepts

- Explore topics like object-oriented programming, file handling, I/O operations and libraries such as pandas and NumPy.

- Learn by Doing

- Apply your skills through coding exercises and small projects. Real practice strengthens understanding.

- Build a Portfolio

- Showcase your skills with projects like web apps, or basic data analysis dashboard. A portfolio boosts job prospects.

- Challenge Yourself Regularly

- Stay updated with Python advancements and take on progressively harder tasks to improve continuously.

4. Python Learning Plan

Month 1-3

- Focus on basics: syntax, data types, loops, and functions.

- Start using libraries like pandas and NumPy for data manipulation.

Month 4-6

- Dive into intermediate topics: object-oriented programming, file handling, and data visualization with matplotlib.

- Experiment with APIs using the FastAPIy and Postman

Month 7 and Beyond

- Specialize based on your goals:

- Web Development: Learn Flask or Django for backend

- Data Science: Explore TensorFlow, Scikit-learn, and Kaggle

- Automation: Work with tools like Selenium for Web Scraping

This timeline is flexible—adapt it to your pace and priorities.

5. Top Tips for Effective Learning

- Choose Your Focus

- Decide what interests you most—web development, data science, or automation. A clear focus helps you navigate the vast world of Python.

- Practice Regularly

- Dedicate time daily or weekly to coding. Even short, consistent practice sessions with platforms like HackerRank will build your skills over time

- Work on Real Projects

- Apply your learning to practical problems. Train a ML model, automate a task, or analyze a dataset. Projects reinforce knowledge and make learning fun.

- Join a Community

- Engage with Python communities online or locally. Networking with others can help you learn faster and stay motivated.

- Take Your Time

- Don’t rush through concepts. Understanding the basics thoroughly is essential before moving to advanced topics.

- Revisit and Improve

- Go back to your old projects and refine them. Optimization teaches you new skills and helps you see your progress.

Best Ways to Learn Python in 2025

1. Online Courses

Platforms like Youtube, Coursera and Udemy offer structured courses for all levels, from beginners to advanced learners.

2. Tutorials

Hands-on tutorials from sites like Real Python and Python.org are great for practical, incremental learning.

3. Cheat Sheets

Keep cheat sheets for quick references to libraries like pandas, NumPy, and Matplotlib. These are invaluable when coding.

4. Projects

Start with simple projects like to-do lists apps. Gradually, take on more complex projects such as web apps or machine learning models.

5. Books

For beginners, Automate the Boring Stuff with Python by Al Sweigart simplifies learning. Advanced learners can explore Fluent Python by Luciano Ramalho.

To understand how Python is shaping careers in tech, read The Rise of Python and Its Impact on Careers in 2025.

Conclusion

Python is more than just a programming language; it’s a gateway to countless opportunities in tech. With a solid plan, consistent practice, and real-world projects, anyone can master it. Whether you’re a beginner or looking to advance your skills, Python offers something for everyone.

If you’re ready to fast-track your learning, consider enrolling in OpenCV University’s 3-Hour Python Bootcamp, designed for beginners to get started quickly and efficiently.

Start your Python journey today—your future self will thank you!

The post Python Launchpad 2025: Your Blueprint to Mastery and Beyond appeared first on OpenCV.

LACT Linux GPU Control Panel Adds Support For Intel Graphics

Intel "Performance Tips" Published For Optimal Linux Graphics

New Product Post – Vision AI Sensor, Supporting LoRaWAN and RS485

Halloo Everyone, welcome to this blog!

The main topic is NEW PRODUCTS! And the keywords are LoRaWAN and Vision AI. You would wonder, “your devices has a camera and it is LoRaWAN?” Yes indeed! I know, I know! You would tell me “You can’t send images or videos via LoRaWAN because the ‘message’ is too large”, or someone else would say “Technically you can, but you need to ‘slice’ the large images or videos to send them ‘bit by bit’ and ‘piece them together’ aferward. Then why bother?”

What if I tell you, you don’t have to send the images or videos? Think about it, the goal is to get the results captured by the camera, not tons of irrelevant footage. In this case, as long as you can get the results, there is no need to send large images or videos.

Without further ado, here comes the intro of our main cast of this post – SenseCAP A1102, an IP66-rated LoRaWAN® Vision AI Sensor, ideal for low-power, long-range TinyML Edge AI applications. It comes with 3 pre-deployed models (human detection, people counting, and meter reading) by default. Meanwhile, with SenseCraft AI platform [Note 1], you can use the pre-trained models or train your customized models conveniently within a few clicks. Of course, SenseCAP A1102 also supports TensorFlow Lite and PyTorch.

This Device consists of two main parts: the AI Camera and the LoRaWAN Data Logger. While different technologies are integrated in this nifty device, I would like to highlight 4 key aspects:

- Advanced AI Processor

As a vision AI Sensor, SenseCAP A1102 proudly adopted the advanced Himax WiseEye2 HX6538 processor featuring a dual-core Arm Cortex-M55 and integrated Arm Ethos-U55. (The same AI processor as in Grove Vision AI v2 Kit.) This ensures a high performance in vision processing.

Meanwhile, please keep in mind that lighting and distance will affect the performance, which is common for applications that involve cameras. According to our testing, SenseCAP A1102 can achieve 70% confidence for results within 1 ~ 5 meters in normal lighting.

- Low-Power Consumption, Long-Range Communication

SenseCAP A1102 is built with Wio E5 Module featuring STM32WLE5JC, ARM Cortex M4 MCU, and Semtech SX126X. This ensures low-power consumption and long-range communication, as in SenseCAP S210X LoRaWAN Environmental sensors. Supporting a wide range of 863MHz – 928MHz frequency, you can order the same device for different stages of your projects in multiple continents, saving time, manpower, and costs in testing, inventory management, and shipment, etc.

SenseCAP A1102 opens up new possibilities to perceive the world. The same hardware with different AI models, you have different sensors for detecting “objects” (fruits, poses, and animals) or reading meters (in scale or digits), and many more. With the IP66 rating (waterproof and dustproof), it can endure long-term deployment in outdoor severe environments.

We understand interoperability is important. As a standard LoRaWAN device, SenseCAP A1102 can be used with any standard LoRaWAN gateways. When choosing SenseCAP Outdoor Gateway or SenseCAP M2 Indoor Gateway, it is easier for configuration and provisioning.

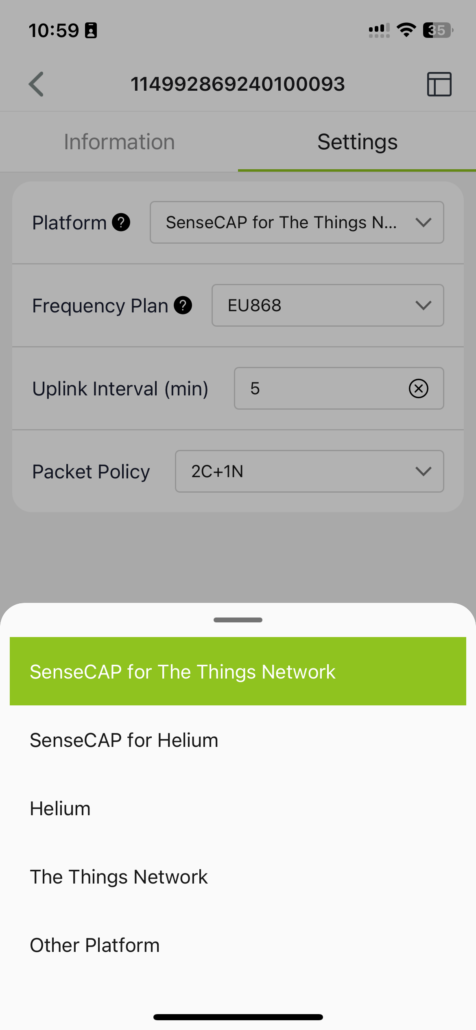

- Easy Set-up and Configure with SenseCraft App

We provide SenseCraft App and SenseCAP Web Portal for you to set up, configure, and manage the devices & data more easily.

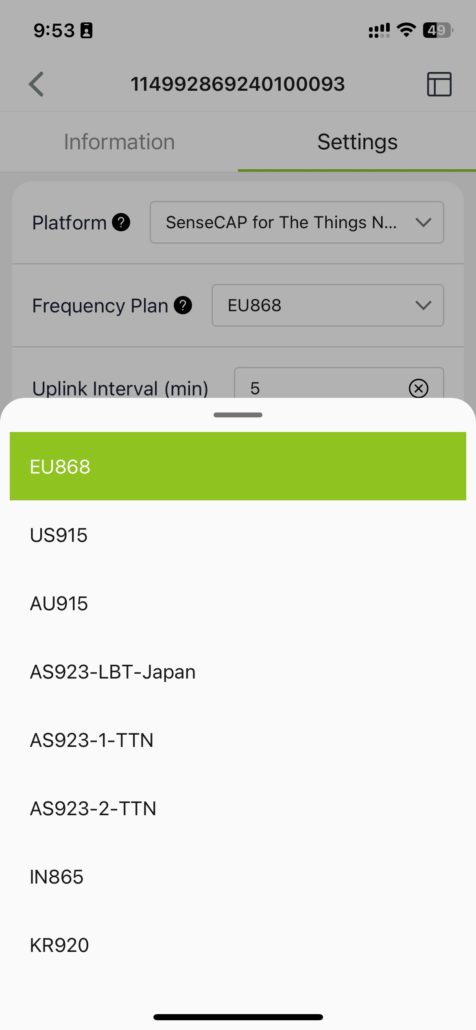

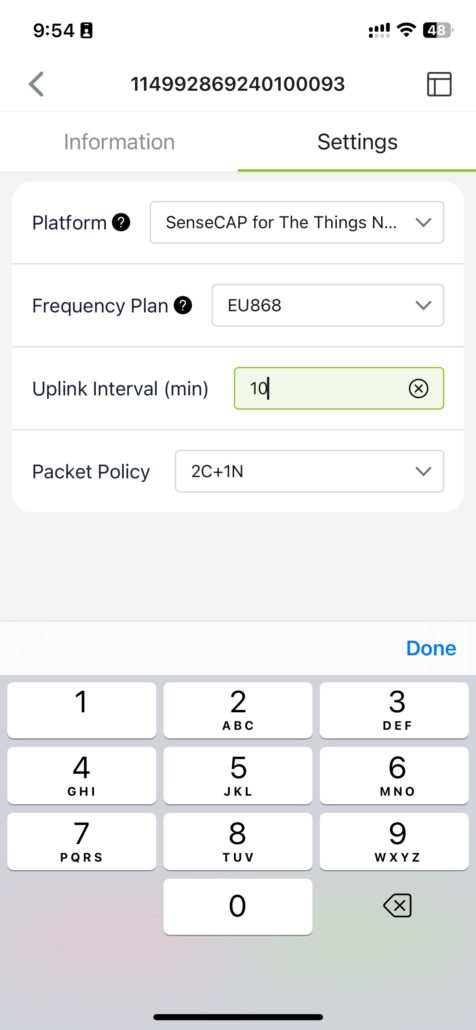

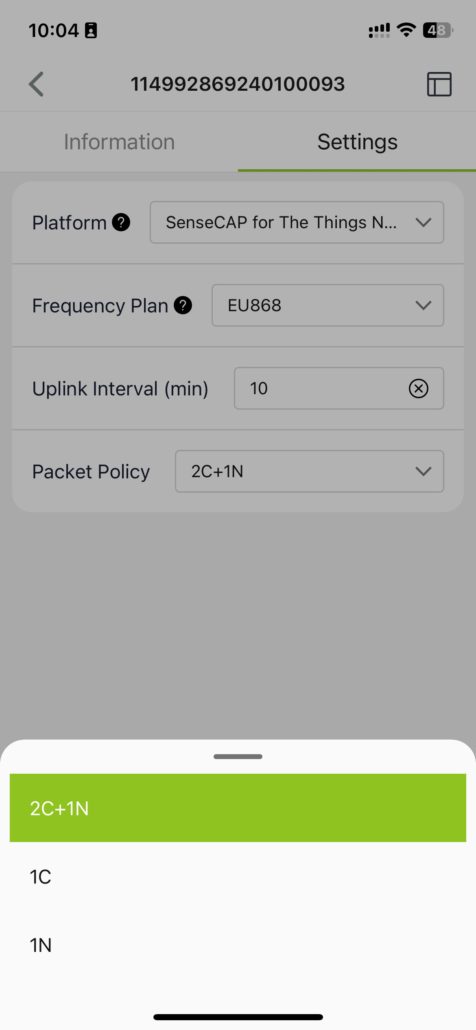

At SenseCraft App, you can change settings with simple clicks such as choose the platform, change the frequency for the specific region, change data upload intervals (5 ~ 1440min), or packet policy (2C+1N, 1C, 1N) and other settings.

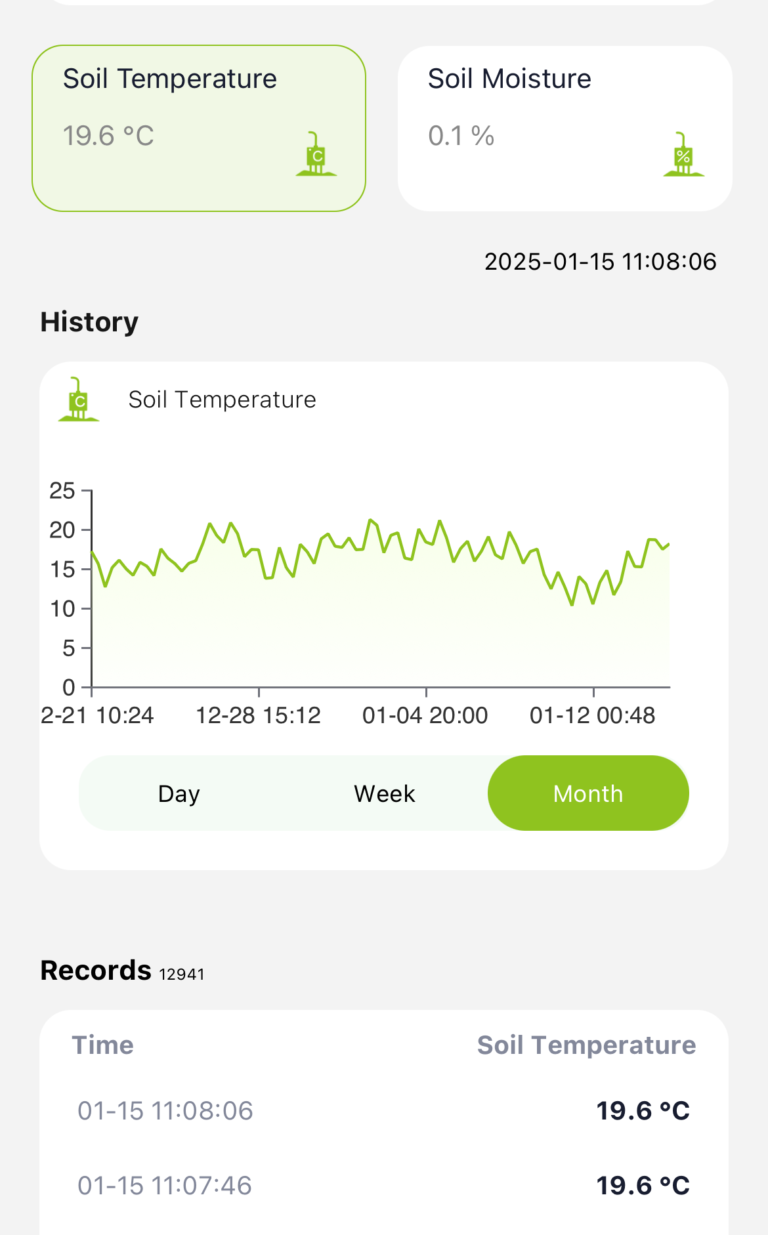

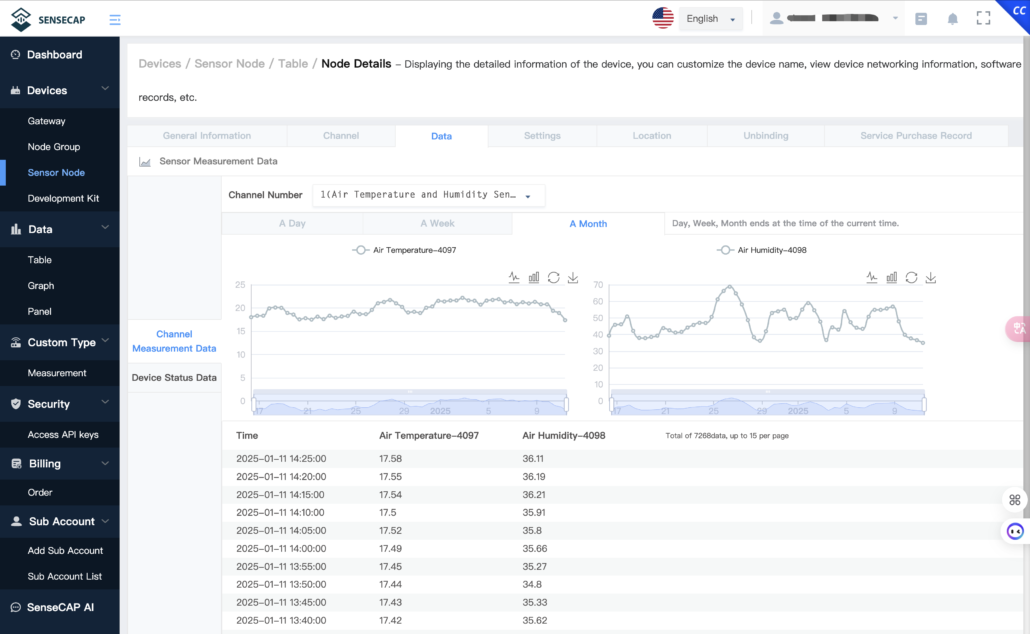

You can see the live and historical data of your devices on both SenseCraft App and SenseCAP Web Portal easily.

When using SenseCAP Sensors and SenseCAP gateways, you can also choose to use SenseCAP cloud platform, which is free for 6 months for each devices and then it is 0.99usd/device/month, or you can choose to use your own platform or other 3rd-party platforms. We offer API supporting MQTT and HTTP.

- Wi-Fi Connectivity for Transmitting Key Frames

Inside the AI Camera part of this device sits a tiny-yet-powerful XIAO ESP32C3, which is powered by new RISC-V architecture. This adds Wi-Fi connectivity to SenseCAP A1102. In the applications, you can get the reference results via LoRaWAN and at the same time get the key frames via Wi-Fi to validate or further analyze.

While we always demonstrate how much we love LoRaWAN for its ultra-low-power consumption and ultra-long-range communication by continuously adding more and more products in the portfolio of our LoRaWAN products family. We also understand some people might prefer other communication protocols. Rest assured, we have options for you. As mentioned above, we value interoperability a lot. Here comes to the intro of another important cast of this post: RS485 Vision AI Camera!

RS485 Vision AI Camera is a robust vision AI sensor that supports MODBUS-RS485 [Note 2] protocol and Wi-Fi Connectivity. Simply put, it is the camera part of the SenseCAP A1102, which adopts the Himax AI Processor for AI performance. Its IP66 rating makes it suitable for both indoor and outdoor applications.

You can use RS485 Vision AI Camera with SenseCAP Sensor Hub 4G Data Logger to transmit the reference results via 4G. If your existing devices or systems support MODBUS-RS485, you can connect it with this RS485 Vision AI Camera for your applications.

In this post, we introduced SenseCAP A1102 and RS485 Vision AI Camera. I hope you like them and will get your hands on these devices for your projects soon! I already envision this device used in different applications from smart home, office, and building managetment to smart agriculture, biodiversity conservation, and many others. And we look forward to seeing your applications!

If you’ve been using Seeed products or following our updates, you might have noticed that the expertise of Seeed products and services is in smart sensing and edge computing. While we’ve developed a rich collection of products in (1) sensor networks that collect different real-world data and transmit via different communication protocols, and (2) edge computing that brings computing power and AI capabilities to the edge. I think we can say that Seeed is strong in smart sensing, communication, and edge computing.

There are many more new products on the Seeed roadmap for us to get a deeper perception of the world with AI-powered insight and actions. Stay tuned!

Last but not the least, we understand that you might have requirements in product features, functionalities, or form factors for your specific applications, we offer a wide range of customization services based on the existing standard products. Please do not hesitate to reach out to us, to share your experience, your thoughts about new products, wishes for new features, or ideas for cooperation possibilities! Reach us at iot[at]seeed[dot]cc! Thank you!

[Note 1: If you do not know it yet, SenseCraft AI is a web-base platform for AI applications. No-code. Beginner-friendly. I joined the livestream on YouTube with dearest Meilily to introduce this platform last week. If you are interested, check the recording here.]

[Note 2: MODBUS RS485 is a widely-used protocol for many industrial applications. You can learn more here about MODBUS and RS485, and you can explore the full range of all RS485 products here.]

The post New Product Post – Vision AI Sensor, Supporting LoRaWAN and RS485 appeared first on Latest Open Tech From Seeed.

Rsync 3.4 Released Due To Multiple, Significant Security Vulnerabilities

SONOFF MINI-D Review – A Matter-enabled dry contact WiFi switch tested with eWeLink, Home Assistant, and Apple Home

SONOFF sent us a sample of the MINI-D Wi-Fi smart switch with a dry contact design for review. If you’re familiar with the larger SONOFF 4CH Pro model, which features four channels, the MINI-D operates similarly but is smaller in size and comes with the latest software features. The principle of a dry contact is that the relay contacts are not directly connected to the device’s power supply circuit. Instead, the contacts are isolated and require an external power source to supply power to the load. Make it flexible to use the SONOFF Mini-D in various scenarios such as controlling garage doors, thermostats, or high-current electrical devices through a contactor, like water pumps. It can also manage low-power DC devices such as solenoid valves or small electric motors (<8W). Because the power supplied to the MINI-D and the power passed through its relay can come from different sources, it offers [...]

The post SONOFF MINI-D Review – A Matter-enabled dry contact WiFi switch tested with eWeLink, Home Assistant, and Apple Home appeared first on CNX Software - Embedded Systems News.

Intel Arc B580 Linux Graphics Driver Performance One Month After Launch

GNOME 48 Desktop Introducing An Official Audio Player: Decibels

ESP32-based Waveshare DDSM Driver HAT (B) for Raspberry Pi supports DDSM400 hub motors

Waveshare has recently launched DDSM Driver HAT (B), a compact Raspberry Pi DDSM (Direct Drive Servo Motor) motor driver designed specifically to drive the DDSM400 hub motors. This board is built around an ESP32 MCU and supports wired (USB and UART) and wireless (2.4GHz WiFi) communication. Additionally, the board features a physical toggle switch, which lets it choose between the ESP32 control or USB control modes. On ESP32 control mode you can control the device through a built-in web application. In the USB control mode, the motor driver can be controlled via USB from a host computer sending JSON commands. An XT60 connector is used to power the board, and programming is done through a USB-C port that connects to the ESP32. The board is suitable for robotics projects, especially for mobile robots in 6×6 or 4×4 configurations. Waveshare DDSM Driver HAT (B) specifications: Wireless MCU – Espressif Systems ESP32-WROOM-32E ESP32 [...]

The post ESP32-based Waveshare DDSM Driver HAT (B) for Raspberry Pi supports DDSM400 hub motors appeared first on CNX Software - Embedded Systems News.