Reading view

AMD Linux Graphics Driver Now Allows Display Support With Modern GPUs On LoongArch

Docker Desktop 4.36: New Enterprise Administration Features, WSL 2, and ECI Enhancements

Key features of the Docker Desktop 4.36 release include:

- New administration features for Docker Business subscription:

- Enforce sign-in with macOS configuration profiles (Early Access Program)

- Enforce sign-in for more than one organization at a time (Early Access Program)

- Deploy Docker Desktop for Mac in bulk with the PKG installer (Early Access Program)

- Use Desktop Settings Management to manage and enforce defaults via Admin Console (Early Access Program)

- Enhanced Container Isolation (ECI) improvements

- Additional improvements:

Docker Desktop 4.36 introduces powerful updates to simplify enterprise administration and enhance security. This release features streamlined macOS sign-in enforcement via configuration profiles, enabling IT administrators to deploy tamper-proof policies at scale, alongside a new PKG installer for efficient, consistent deployments. Enhancements like the unified WSL 2 mono distribution improve startup speeds and workflows, while updates to Enhanced Container Isolation (ECI) and Desktop Settings Management allow for greater flexibility and centralized policy enforcement. These innovations empower organizations to maintain compliance, boost productivity, and streamline Docker Desktop management across diverse enterprise environments.

Sign-in enforcement: Streamlined alternative for organizations for macOS

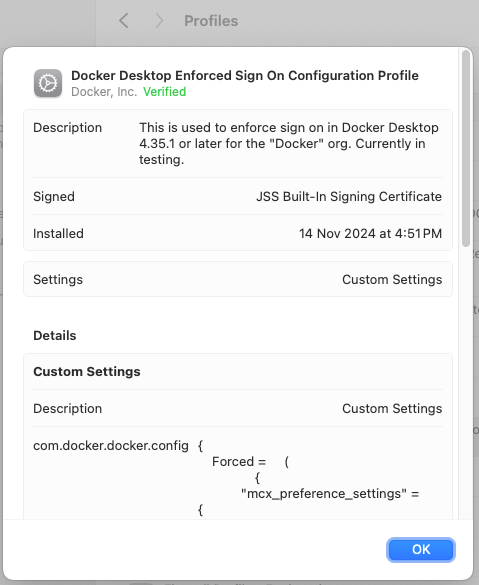

Recognizing the need for streamlined and secure ways to enforce sign-in protocols, Docker is introducing a new sign-in enforcement mechanism for macOS configuration profiles. This Early Access update delivers significant business benefits by enabling IT administrators to enforce sign-in policies quickly, ensuring compliance and maximizing the value of Docker subscriptions.

Key benefits

- Fast deployment and rollout: Configuration profiles can be rapidly deployed across a fleet of devices using Mobile Device Management (MDM) solutions, making it easy for IT admins to enforce sign-in requirements and other policies without manual intervention.

- Tamper-proof enforcement: Configuration profiles ensure that enforced policies, such as sign-in requirements, cannot be bypassed or disabled by users, providing a secure and reliable way to manage access to Docker Desktop (Figure 1).

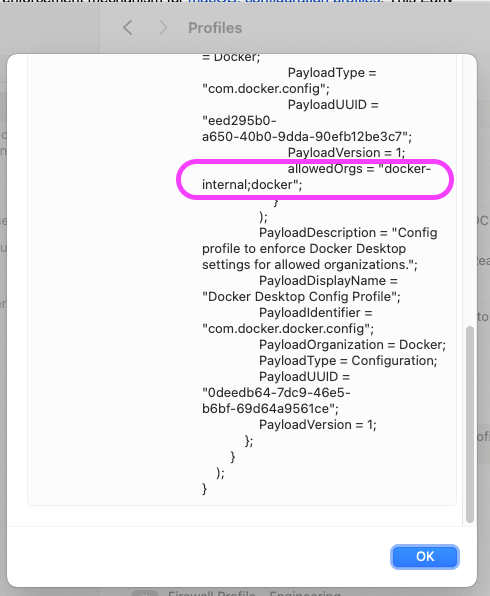

- Support for multiple organizations: More than one organization can now be defined in the

allowedOrgsfield, offering flexibility for users who need access to Docker Desktop under multiple organizational accounts (Figure 2).

How it works

macOS configuration profiles are XML files that contain specific settings to control and manage macOS device behavior. These profiles allow IT administrators to:

- Restrict access to Docker Desktop unless the user is authenticated.

- Prevent users from disabling or bypassing sign-in enforcement.

By distributing these profiles through MDM solutions, IT admins can manage large device fleets efficiently and consistently enforce organizational policies.

allowedOrgs visible.Configuration profiles, along with the Windows Registry key, are the latest examples of how Docker helps streamline administration and management.

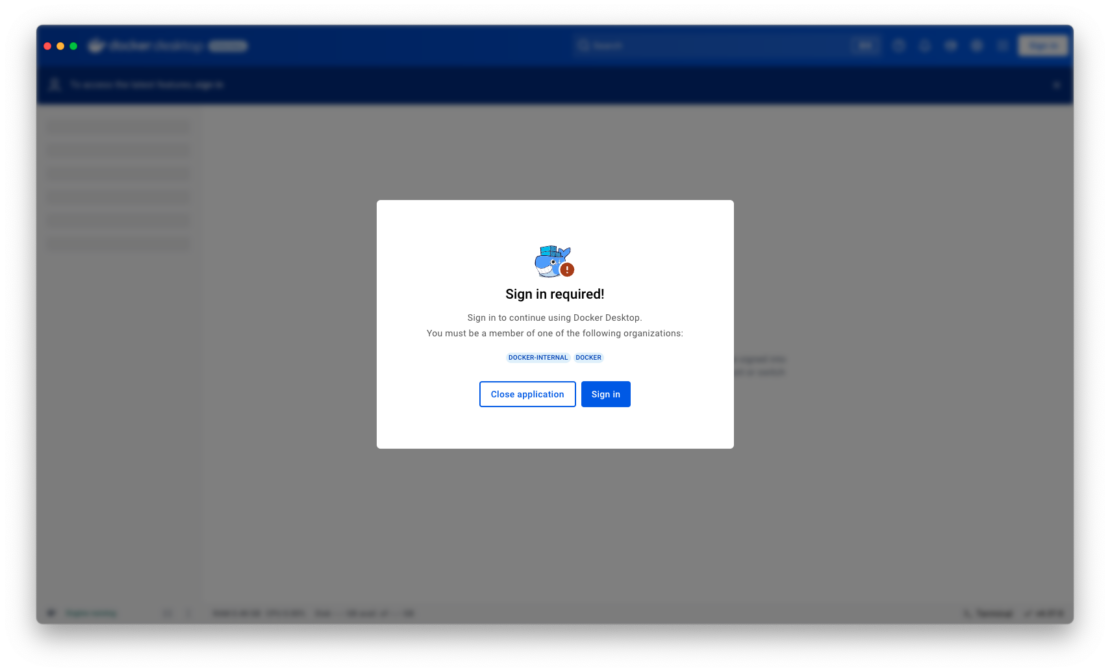

Enforce sign-in for multiple organizations

Docker now supports enforcing sign-in for more than one organization at a time, providing greater flexibility for users working across multiple teams or enterprises. The allowedOrgs field now accepts multiple strings, enabling IT admins to define more than one organization via any supported configuration method, including:

- registry.json

- Windows Registry key

- macOS plist

- macOS configuration profile

This enhancement makes it easier to enforce login policies across diverse organizational setups, streamlining access management while maintaining security (Figure 3).

Learn more about the various sign-in enforcement methods.

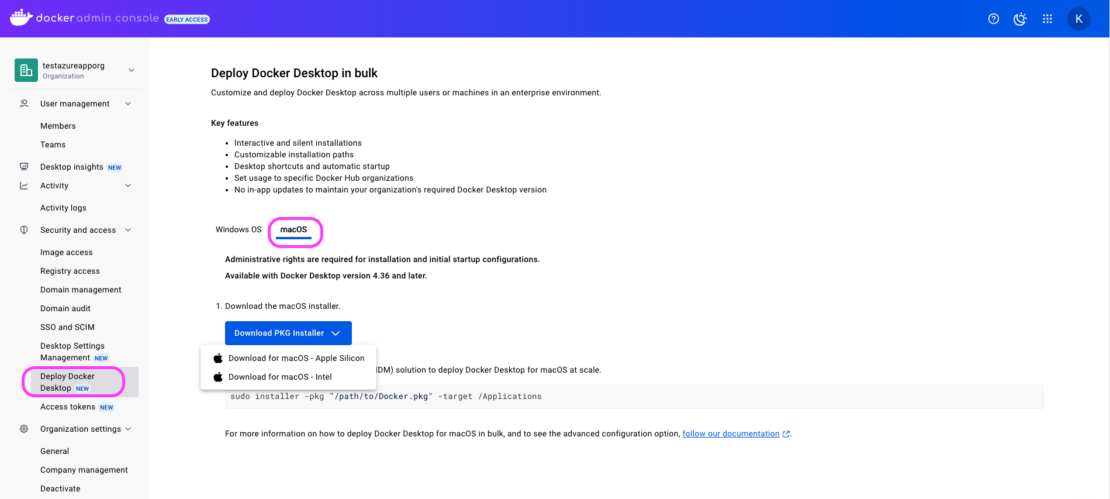

Deploy Docker Desktop for macOS in bulk with the PKG installer

Managing large-scale Docker Desktop deployments on macOS just got easier with the new PKG installer. Designed for enterprises and IT admins, the PKG installer offers significant advantages over the traditional DMG installer, streamlining the deployment process and enhancing security.

- Ease of use: Automate installations and reduce manual steps, minimizing user error and IT support requests.

- Consistency: Deliver a professional and predictable installation experience that meets enterprise standards.

- Streamlined deployment: Simplify software rollouts for macOS devices, saving time and resources during bulk installations.

- Enhanced security: Benefit from improved security measures that reduce the risk of tampering and ensure compliance with enterprise policies.

You can download the PKG installer via Admin Console > Security and Access > Deploy Docker Desktop > macOS. Options for both Intel and Arm architectures are also available for macOS and Windows, ensuring compatibility across devices.

Start deploying Docker Desktop more efficiently and securely today via the Admin Console (Figure 4).

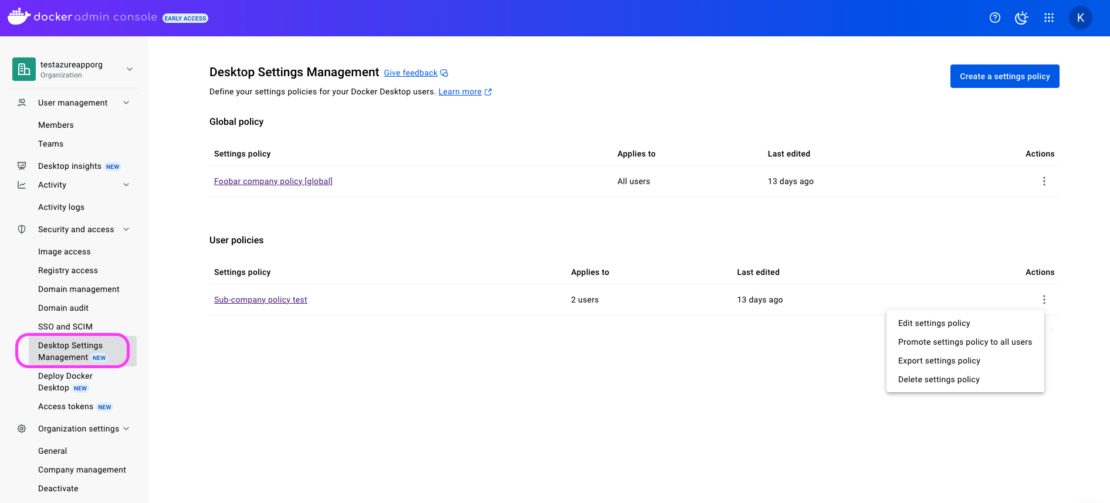

Desktop Settings Management (Early Access)

Managing Docker Desktop settings at scale is now easier than ever with the new Desktop Settings Management, available in Early Access for Docker Business customers. Admins can centrally deploy and enforce settings policies for Docker Desktop directly from the cloud via the Admin Console, ensuring consistency and efficiency across their organization.

Here’s what’s available now:

- Admin Console policies: Configure and enforce default Docker Desktop settings from the Admin Console.

- Quick import: Import existing configurations from an

admin-settings.jsonfile for seamless migration. - Export and share: Export policies as JSON files to easily share with security and compliance teams.

- Targeted testing: Roll out policies to a smaller group of users for testing before deploying globally.

What’s next?

Although the Desktop Settings Management feature is in Early Access, we’re actively building additional functionality to enhance it, such as compliance reporting and automated policy enforcement capabilities. Stay tuned for more!

This is just the beginning of a powerful new way to simplify Docker Desktop management and ensure organizational compliance. Try it out now and help shape the future of settings management: Admin Console > Security and Access > Desktop Settings Management (Figure 5).

Streamlining data workflow with WSL 2 mono distribution

Simplify the Windows Subsystem for Linux (WSL 2) setup by eliminating the need to maintain two separate Docker Desktop WSL distributions. This update streamlines the WSL 2 configuration by consolidating the previously required dual Docker Desktop WSL distributions into a single distribution, now available on both macOS and Windows operating systems.

The simplification of Docker Desktop’s WSL 2 setup is designed to make the codebase easier to understand and maintain. This enhances the ability to handle failures more effectively and increases the startup speed of Docker Desktop on WSL 2, allowing users to begin their work more quickly.

The value of streamlining data workflows and relocating data to a different drive on macOS and Windows with the WSL 2 backend in Docker Desktop encompasses these key areas:

- Improved performance: By separating data and system files, I/O contention between system operations and data operations is reduced, leading to faster access and processing.

- Enhanced storage management: Separating data from the main system drives allows for more efficient use of space.

- Increased flexibility with cross-platform compatibility: Ensuring consistent data workflows across different operating systems (macOS and Windows), especially when using Docker Desktop with WSL 2.

- Enhanced Docker performance: Docker performs better when processing data on a drive optimized for such tasks, reducing latency and improving container performance.

By implementing these practices, organizations can achieve more efficient, flexible, and high-performing data workflows, leveraging Docker Desktop’s capabilities on both macOS and Windows platforms.

Enhanced Container Isolation (ECI) improvements

- Allow any container to mount the Docker socket: Admins can now configure permissions to allow all containers to mount the Docker socket by adding

*or*:*to the ECI Docker socket mount permission image list. This simplifies scenarios where broad access is required while maintaining security configuration through centralized control. Learn more in the advanced configuration documentation. - Improved support for derived image permissions: The Docker socket mount permissions for derived images feature now supports wildcard tags (e.g.,

alpine:*), enabling admins to grant permissions for all versions of an image. Previously, specific tags likealpine:latesthad to be listed, which was restrictive and required ongoing maintenance. Learn more about managing derived image permissions.

These enhancements reduce administrative overhead while maintaining a high level of security and control, making it easier to manage complex environments.

Upgrade now

The Docker Desktop 4.36 release introduces a suite of features designed to simplify enterprise administration, improve security, and enhance operational efficiency. From enabling centralized policy enforcement with Desktop Settings Management to streamlining deployments with the macOS PKG installer, Docker continues to empower IT administrators with the tools they need to manage Docker Desktop at scale.

The improvements in Enhanced Container Isolation (ECI) and WSL 2 workflows further demonstrate Docker’s commitment to innovation, providing solutions that optimize performance, reduce complexity, and ensure compliance across diverse enterprise environments.

As businesses adopt increasingly complex development ecosystems, these updates highlight Docker’s focus on meeting the unique needs of enterprise teams, helping them stay agile, secure, and productive. Whether you’re managing access for multiple organizations, deploying tools across platforms, or leveraging enhanced image permissions, Docker Desktop 4.36 sets a new standard for enterprise administration.

Start exploring these powerful new features today and unlock the full potential of Docker Desktop for your organization.

Learn more

- Subscribe to the Docker Newsletter.

- Authenticate and update to receive your subscription level’s newest Docker Desktop features.

- Learn about our sign-in enforcement options.

- Learn more about host networking support.

- New to Docker? Create an account.

- Have questions? The Docker community is here to help.

AMD Bus Lock Trap Support Merged For Linux 6.13

Doing more with less: LLM quantization (part 2)

Sched_Ext Changes Merged For Linux 6.13 With LLC & NUMA Awareness

XFS With Linux 6.13 Sees Major Rework To Real-Time Volumes

Intel NPU Library v1.4 Adds Turbo Mode & Tensor Operations

New Sound Hardware Support In Linux 6.13

The Official Raspberry Pi Camera Module Guide out now: build amazing vision-based projects

We are enormously proud to reveal The Official Raspberry Pi Camera Module Guide (2nd edition), which is out now. David Plowman, a Raspberry Pi engineer specialising in camera software, algorithms, and image-processing hardware, authored this official guide.

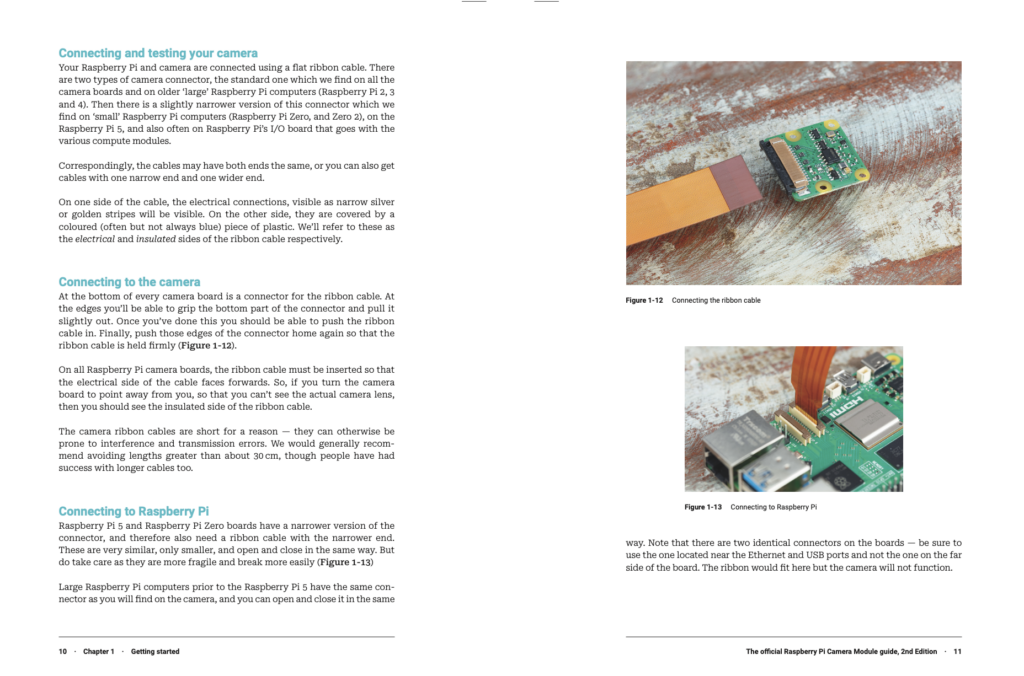

This detailed book walks you through all the different types of Camera Module hardware, including Raspberry Pi Camera Module 3, High Quality Camera, Global Shutter Camera, and older models; discover how to attach them to Raspberry Pi and integrate vision technology with your projects. This edition also covers new code libraries, including the latest PiCamera2 Python library and rpicam command-line applications, as well as integration with the new Raspberry Pi AI Kit.

Save time with our starter guide

Our starter guide has clear diagrams explaining how to connect various Camera Modules to the new Raspberry Pi boards. It also explains how to fit custom lenses to HQ and GS Camera Modules using C-CS adaptors. Everything is outlined in step-by-step tutorials with diagrams and photographs, making it quick and easy to get your camera up and running.

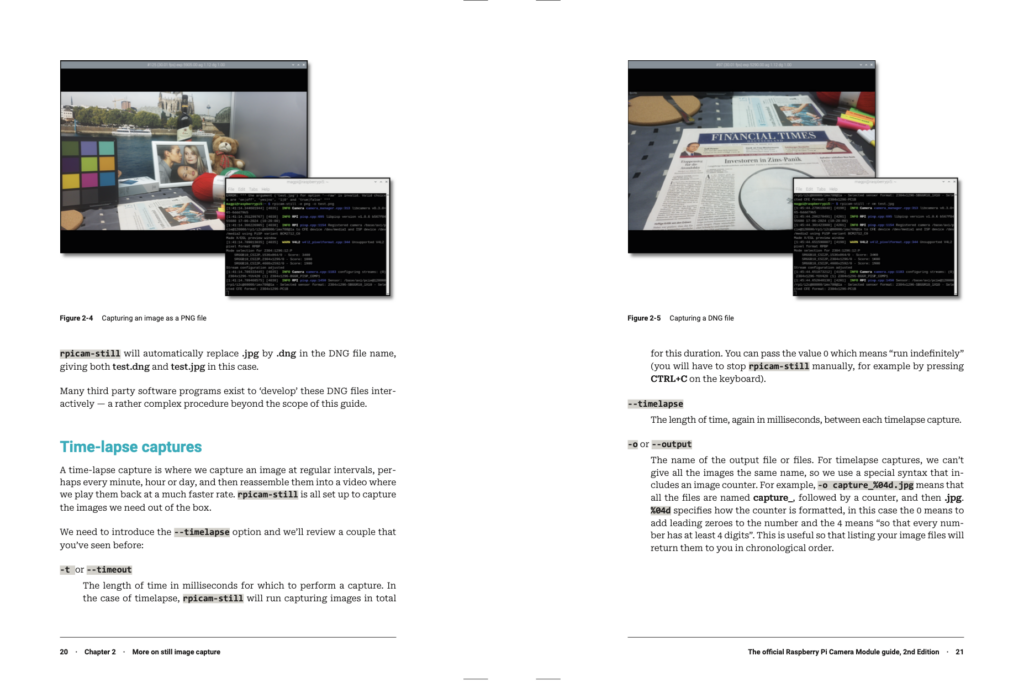

Test your camera properly

You’ll discover how to connect your camera to a Raspberry Pi and test it using the new rpicam command-line applications — these replace the older libcam applications. The guide also covers the new PiCamera2 Python library, for integrating Camera Module technology with your software.

Get more from your images

Discover detailed information about how Camera Module works, and how to get the most from your images. You’ll learn how to use RAW formats and tuning files, HDR modes, and preview windows; custom resolutions, encoders, and file formats; target exposure and autofocus; shutter speed, and gain, enabling you to get the very best out of your imaging hardware.

Build smarter projects with AI Kit integration

A new chapter covers the integration of the AI Kit with Raspberry Pi Camera Modules to create smart imaging applications. This adds neural processing to your projects, enabling fast inference of objects captured by the camera.

Boost your skills with pre-built projects

The Official Raspberry Pi Camera Module Guide is packed with projects. Take selfies and stop-motion videos, experiment with high-speed and time-lapse photography, set up a security camera and smart door, build a bird box and wildlife camera trap, take your camera underwater, and much more! All of the code is tested and updated for the latest Raspberry Pi OS, and is available on GitHub for inspection.

Click here to pick up your copy of The Official Raspberry Pi Camera Module Guide (2nd edition).

The post The Official Raspberry Pi Camera Module Guide out now: build amazing vision-based projects appeared first on Raspberry Pi.

Arm Tech Symposia: AI Technology Transformation Requires Unprecedented Ecosystem Collaborations

The Arm Tech Symposia 2024 events in China, Japan, South Korea and Taiwan were some of the biggest and best attended events ever held by Arm in Asia. The size of all the events was matched by the enormity of the occasion that is being faced by the technology industry.

As Chris Bergey, SVP and GM of Arm’s Client Line of Business, said in the Tech Symposia keynote presentation in Taiwan: “This is the most important moment in the history of technology.”

There are significant opportunities for AI to transform billions of lives around the world, but only if the ecosystem works together like never before.

A re-thinking of silicon

At the heart of these ecosystem collaborations is a broad re-think of how the industry approaches the development and deployment of technologies. This is particularly applicable to the semiconductor industry, with silicon no longer a series of unrelated components but instead becoming “the new motherboard” to meet the demands of AI.

This means multiple components co-existing within the same package, providing better latency, increased bandwidth and more power efficiency.

Silicon technologies are already transforming the everyday lives of people worldwide, enabling innovative AI features on smartphones, like the real-time translation of languages and text summarization, to name a few.

As James McNiven, VP of Product Management for Arm’s Client Line of Business, stated in the South Korea Tech Symposia keynote: “AI is about making our future better. The potential impact of AI is transformative.”

The importance of the Arm Compute Platform

The Arm Compute Platform is playing a significant role in the growth of AI. This combines hardware and for best-in-class technology solutions for a wide range of markets, whether that’s AI smartphones, software-defined vehicles or data centers.

This is supported by the world’s largest software ecosystem, with more than 20 million software developers writing software for Arm, on Arm. In fact, all the Tech Symposia keynotes made the following statement: “We know that hardware is nothing without software.”

How software “drives the technology flywheel”

Software has always been an integral part of the Arm Compute Platform, with Arm delivering the ideal platform for developers to “make their dreams (applications) a reality” through three key ways.

Firstly, Arm’s consistent compute platform touches 100 percent of the world’s connected population. This means developers can “write once and deploy everywhere.”

The foundation of the platform is the Arm architecture and its continuous evolution through the regular introduction of new features and instruction-sets that accelerate key workloads to benefit developers and the end-user.

SVE2 is one feature that is present across AI-enabled flagship smartphones built on the new MediaTek Dimensity 9400 chipset. It incorporates vector instructions to improve video and image processing capabilities, leading to better quality photos and longer-lasting video.

Secondly, through having acceleration capabilities to deliver optimized performance for developers’ applications. This is not just about high-end accelerator chips, but having access to AI-enabled software to unlock performance.

One example of this is Arm Kleidi, which seamlessly integrates with leading frameworks to ensure AI workloads run best on the Arm CPU. Developers can then unlock this accelerated performance with no additional work required.

At the Arm Tech Symposia Japan event, Dipti Vachani, SVP and GM of Arm’s Automotive Line of Business, said: “We are committed to abstracting away the hardware from the developer, so they can focus on creating world changing applications without having to worry about any technical complexities around performance or integration.”

This means that when the new version of Meta’s Llama, Google AI Edge’s MediaPipe and Tencent’s Hunyuan come online, developers can be confident that no performance is being left on the table with the Arm CPU.

Kleidi integrations are set to accelerate billions of AI workloads on the Arm Compute Platform, with the recent PyTorch integration leading to 2.5x faster time-to-first token on Arm-based AWS Graviton processors when running the Llama 3 large language model (LLM).

Finally, developers need a platform that is easy to access and use. Arm has made this a reality through significant software investments that ensure developing on the Arm Compute Platform is a simplified, seamless experience that “just works.”

As each Arm Tech Symposia keynote speaker summarized: “The power of Arm and our ecosystem is that we deliver what developers need to simplify the process, accelerate time-to-market, save costs and optimize performance.”

The role of the Arm ecosystem

The importance of the Arm ecosystem in making new technologies a reality was highlighted throughout the keynote presentations. This is especially true for new silicon designs that require a combination of core expertise across many different areas.

As Dermot O’Driscoll, VP, Product Management for Arm’s Infrastructure Line of Business, said at the Arm Tech Symposia event in Shanghai, China: “No one company will be able to cover every single level of design and integration alone.”

Empowering these powerful ecosystem collaborations is a core aim of Arm Total Design, which enables the ecosystem to accelerate the development and deployment of silicon solutions that are more effective, efficient and performant. The program is growing worldwide, with the number of members doubling since the program was launched in late 2023. Each Arm Total Design partner offers something unique that accelerates future silicon designs, particularly those that are built on Arm Neoverse Compute Subsystems (CSS).

One company that exemplifies the spirit and value of Arm Total Design is South Korea-based Rebellions. Recently, it announced the development of a new large-scale AI platform, the REBEL AI platform, to drive power efficiency for AI workloads. Built on Arm Neoverse V3 CSS, the platform uses a 2nm process node and packaging from Samsung Foundry and leverages design services from ADtechnology. This demonstrates true ecosystem collaboration, with different companies offering different types of highly valuable expertise.

Dermot O’Driscoll said: “The AI era requires custom silicon, and it’s only made possible because everyone in this ecosystem is working together, lifting each other up and making it possible to quickly and efficiently meet the rising demands of AI.”

Arm Total Design is also helping to enable a new thriving chiplet ecosystem that already involves over 50 leading technology partners who are working with Arm on the Chiplet System Architecture (CSA). This is creating the framework for standards that will enable a thriving chiplet market, which is key to meeting ongoing silicon design and compute challenges in the age of AI.

The journey to 100 billion Arm-based devices running AI

All the keynote speakers closed their Arm Tech Symposia keynotes by reinforcing the commitment that Arm CEO Rene Haas made at COMPUTEX in June 2024: 100 billion Arm-based devices running AI by the end of 2025.

However, this goal is only possible if ecosystem partners from every corner of the technology industry work together like never before. Fortunately, as explained in all the keynotes, there are already many examples of this work in action.

The Arm Compute Platform sits at the center of these ecosystem collaborations, providing the technology foundation for AI that will help to transform billions of lives around the world.

The post Arm Tech Symposia: AI Technology Transformation Requires Unprecedented Ecosystem Collaborations appeared first on Arm Newsroom.

Mercury X1 wheeled humanoid robot combines NVIDIA Jetson Xavier NX AI controller and ESP32 motor control boards

Elephant Robotics Mercury X1 is a 1.2-meter high wheeled humanoid robot with two robotic arms using an NVIDIA Jetson Xavier NX as its main controller and ESP32 microcontrollers for motor control and suitable for research, education, service, entertainment, and remote operation. The robot offers 19 degrees of freedom, can lift payloads of up to 1kg, work up to 8 hours on a charge, and travel at up to 1.2m/s or about 4.3km/h. It’s based on the company’s Mercury B1 dual-arm robot and a high-performance mobile base. Mercury X1 specifications: Main controller – NVIDIA Jetson Xavier NX CPU – 6-core NVIDIA Carmel ARM v8.2 64-bit CPU with 6MB L2 + 4MB L3 caches GPU – 384-core NVIDIA Volta GPU with 48 Tensor Cores AI accelerators – 2x NVDLA deep learning accelerators delivering up to 21 TOPS at 15 Watts System Memory – 8 GB 128-bit LPDDR4x @ 51.2GB/s Storage – 16 [...]

The post Mercury X1 wheeled humanoid robot combines NVIDIA Jetson Xavier NX AI controller and ESP32 motor control boards appeared first on CNX Software - Embedded Systems News.

(D241122) godns webhook coturn and dynamic IP address on FreeBSD

This is for FreeBSD. It may not work on Linux because sh and sed on FreeBSD are not the same as Linux (bash and other sed).

We're running a STUN/TURN server, it work well but we don't have a static IP address. Everytime we got a new IP address we need to change coturn config and restart the service.

There is a way to get it work by a cron job that run a shell script check public ip every 5 minutes and restart service if the public IP address has changed.

#!/bin/bash

# Linux only, doesn't work on FreeBSD

current_external_ip_config=$(cat /etc/turnserver.conf | grep "^external-ip" | cut -d'=' -f2)

current_external_ip=$(dig +short <MY_DOMAIN>)

if [[ -n "$current_external_ip" ]] && [[ $current_external_ip_config != $current_external_ip ]]; then

sed -i "/^external-ip=/ c external-ip=$current_external_ip" /etc/turnserver.conf

systemctl restart coturn

firef: set up with dynamic ip address

Since we're running a godns daemon to update our IP to Cloudflare DNS server we also want godns send a webhook to coturn server whenever it update IP to Cloudflare. That may be more effective.

So this is what we have:

$ cat /etc/godns/config.json

{

"provider": "Cloudflare",

"login_token": "YOUR_TOKEN",

"domains": [

{

"domain_name": "yourdomain.com",

"sub_domains": [

"@"

]

}

],

"ip_urls": [

"https://api.ipify.org"

],

"ip_type": "IPv4",

"interval": 300,

"resolver": "8.8.8.8",

"webhook": {

"enabled": true,

"url": "http://your.coturn.webhook.endpoint:9000/hooks/godns",

"request_body": "{ \"domain\": \"{{.Domain}}\", \"ip\": \"{{.CurrentIP}}\", \"ip_type\": \"{{.IPType}}\" }"

}

}$ cat /usr/local/etc/webhook.yaml

---

# See https://github.com/adnanh/webhook/wiki for further information on this

# file and its options. Instead of YAML, you can also define your

# configuration as JSON. We've picked YAML for these examples because it

# supports comments, whereas JSON does not.

#

# In the default configuration, webhook runs as user nobody. Depending on

# the actions you want your webhooks to take, you might want to run it as

# user root. Set the rc.conf(5) variable webhook_user to the desired user,

# and restart webhook.

# An example for a simple webhook you can call from a browser or with

# wget(1) or curl(1):

# curl -v 'localhost:9000/hooks/samplewebhook?secret=geheim'

- id: godns

execute-command: "/usr/local/etc/godns.sh"

command-working-directory: "/usr/local/etc"

pass-arguments-to-command:

- source: payload

name: domain

- source: payload

name: ip

- source: payload

name: ip_type

trigger-rule:

and:

- match:

type: value

value: "your.domain.com"

parameter:

source: payload

name: domainshell script

$ cat /usr/local/etc/godns.sh

#!/bin/sh

# write ip log to a file

now="$(date +'%y%m%d%H%M%S%N')"

echo $now $1 $2 $3 >> godns.txt

# restart coturn when ip changed

turnserver_config="/usr/local/etc/turnserver.conf"

current_external_ip_config=$(cat $turnserver_config | grep "^external-ip" | cut -d'=' -f2)

current_external_ip_webhook=$2

if [ -n "$current_external_ip_webhook" ] && [ $current_external_ip_config != $current_external_ip_webhook ]; then

sed -i .old -e "s/external-ip=$current_external_ip_config/external-ip=$current_external_ip_webhook/g" $turnserver_config

service turnserver restart

fiIt may not work well enough, if something happen and godns can not send webhook to coturn server. But for now we stick with it.

ASUSTOR Flashstor Gen2 NAS features AMD Ryzen Embedded V3C14, 10GbE networking, up to 12x NVMe SSD sockets

ASUSTOR Flashstor 6 Gen2 and Flashtor 6 Pro Gen2 are NAS systems based on AMD Ryzen Embedded V3C14 quad-core processor with up to two 10GbE RJ45 ports and taking up to 6 or 12 M.2 NVMe SSDs respectively. The Flashstor Gen2 models are updated to the ASUSTOR Flashtor NAS launched last year with similar specifications including 10GbE and up to 12 M.2 SSDs, but based on a relatively low-end Intel Celeron N5105 quad-core Jasper Lake processor. The new Gen2 NAS family features a more powerful AMD Ryzen Embedded V3C14 SoC, support for up to 64GB RAM with ECC, and USB4 ports. The downside is that it lacks video output, so it can’t be used for 4K/8K video consumption like its predecessor. Flashstor Gen2 NAS specifications: SoC – AMD Ryzen Embedded V3C14 quad-core/8-thread processor @ 2.3/3.8GHz; TDP: 15W System Memory Flashstor 6 Gen2 (FS6806X) – 8 GB DDR5-4800 Flashstor 12 Pro [...]

The post ASUSTOR Flashstor Gen2 NAS features AMD Ryzen Embedded V3C14, 10GbE networking, up to 12x NVMe SSD sockets appeared first on CNX Software - Embedded Systems News.

Important Announcement: Free Shipping Policy Adjustment and Service Enhancements

Thank you for your continued support! To further enhance your shopping experience, we are rolling out a series of new features designed to provide more efficient and localized services. Additionally, we will be updating our current free shipping policy. Please take note of the following important updates:

Recent Logistics Enhancements

Between June and October 2024, we implemented several key logistics upgrades to enhance service quality and lay the groundwork for upcoming policy adjustments:

1. Expanded Shipping Options

- US Warehouse: Added UPS-2 Day, FedEx, and UPS-Ground for more fast shipping choices.

- CN Warehouse: Introduced Airtransport Direct Line small package service, reducing delivery times from 20-35 days to just 7-10 days.

2. Optimized Small Parcel Shipping and Cost Control

- Adjusted packaging specifications for CN warehouse shipments, significantly lowering costs while improving shipping efficiency.

3. Accelerated Overall Delivery Times

- Streamlined export customs clearance from Shenzhen and synchronized handoffs with European and American couriers, reducing delivery times to just 3.5 days.

Enhanced Local Services for a Better Shopping Experience

To meet the diverse needs of our global users, we’ve implemented several improvements in local purchasing, logistics, and tax services:

1. Local Warehouse Pre-Order

- Launch Date: Already Live

- Highlights: Pre-order popular products from our US Warehouse and DE Warehouse. If immediate stock is needed, you can switch to the CN Warehouse for purchase.

2. Enhanced VAT Services for EU Customers

- Launch Date: Already Live

- Highlights: New VAT ID verification feature allows EU customers to shop tax-free with a valid VAT ID.

3. US Warehouse Sales Tax Implementation

- Launch Date: January 1, 2025

- Highlights: Automatic calculation of sales tax to comply with US local tax regulations.

Free Shipping Policy Adjustment

Starting December 31, 2024, our current free shipping policy (CN warehouse orders over $150, US & DE warehouse orders over $100) will no longer apply.

We understand that this change may cause some inconvenience in the short term. However, our aim is to offer more flexible and efficient shipping options, ensuring your shopping experience is more personalized and seamless.

Listening to Your Suggestions for Continuous Improvement

We understand that excellent logistics service stems from listening to every customer’s needs. During the optimization process, we received valuable suggestions, such as:

- Adding a local warehouse in Australia to provide faster delivery for customers in that region.

- Improving packaging designs to enhance protection during transit.

- Supporting flexible delivery schedules, allowing customers to choose delivery times that work best for them.

We welcome your continued input! Starting today, submit your feedback via our Feedback Form, and receive coupon rewards for all adopted suggestions.

Important Reminder: Free Shipping Policy End Date

- Current free shipping policy will officially end on December 31, 2024.

- Plan your purchases in advance to enjoy the remaining free shipping benefits!

We are also working on future logistics enhancements and may introduce region-specific free shipping or special holiday promotions, so stay tuned!

Thank You for Your Support

Your trust and support inspire Seeed Studio to keep innovating. We remain focused on improving localized services, listening to your needs, and delivering a more convenient and efficient shopping experience.

If you have any questions, please don’t hesitate to contact our customer support team. Together, let’s move towards a smarter, more efficient future!

The post Important Announcement: Free Shipping Policy Adjustment and Service Enhancements appeared first on Latest Open Tech From Seeed.

Nice File Performance Optimizations Coming With Linux 6.13

Friday Five — November 22, 2024

NVIDIA JetPack 6.1 Boosts Performance and Security through Camera Stack Optimizations and Introduction of Firmware TPM

NVIDIA JetPack has continuously evolved to offer cutting-edge software tailored to the growing needs of edge AI and robotic developers. With each release,...

NVIDIA JetPack has continuously evolved to offer cutting-edge software tailored to the growing needs of edge AI and robotic developers. With each release,...

NVIDIA JetPack has continuously evolved to offer cutting-edge software tailored to the growing needs of edge AI and robotic developers. With each release, JetPack has enhanced its performance, introduced new features, and optimized existing tools to deliver increased value to its users. This means that your existing Jetson Orin-based products experience performance optimizations by upgrading to…