Third Eye assistive vision | The MagPi #149

This #MagPiMonday, we take a look at Md. Khairul Alam’s potentially life-changing project, which aims to use AI to assist people living with a visual impairment.

Technology has long had the power to make a big difference to people’s lives, and for those who are visually impaired, the changes can be revolutionary. Over the years, there has been a noticeable growth in the number of assistive apps. As well as JAWS — a popular computer screen reader for Windows — and software that enables users to navigate phones and tablets, there are audio-descriptive apps that use smart device cameras to read physical documents and recognise items in someone’s immediate environment.

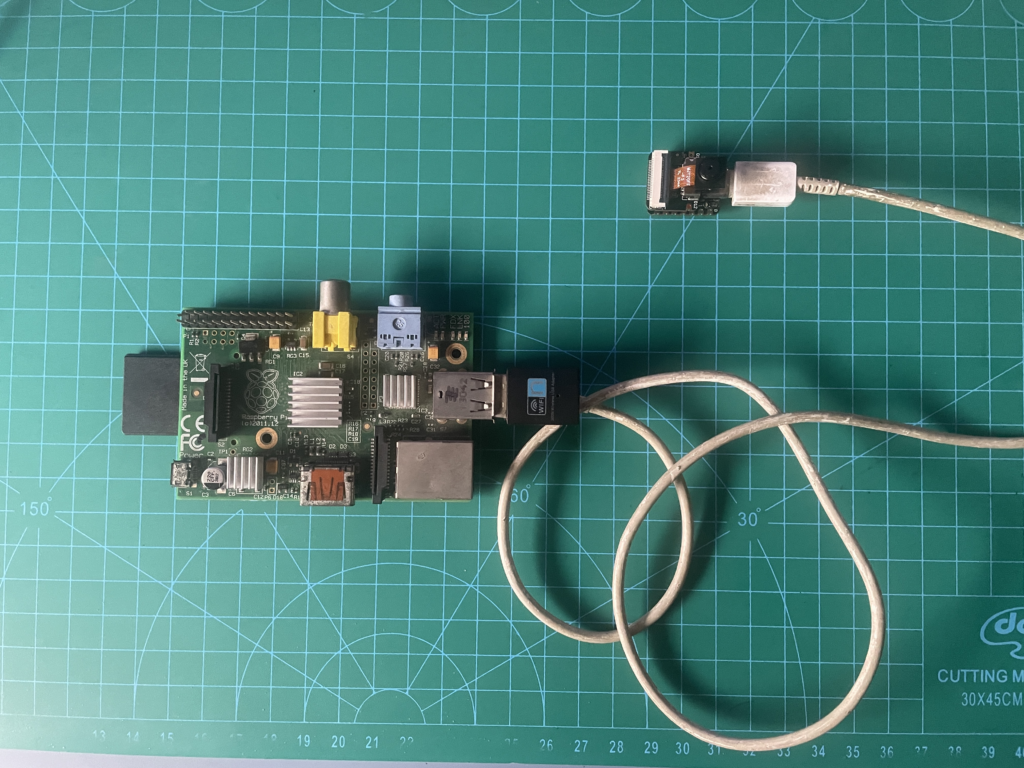

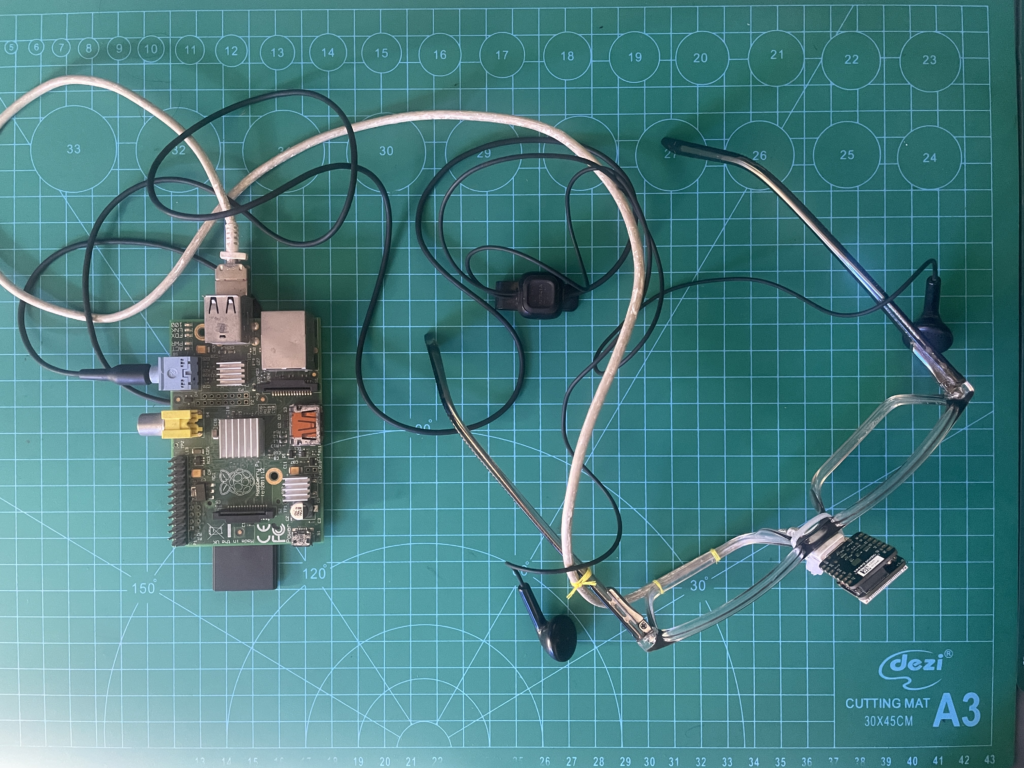

Understanding the challenges facing people living with a visual impairment, maker and developer Md. Khairul Alam has sought to create an inexpensive, wearable navigation tool that will free up the user’s hands and describe what someone would see from their own eyes’ perspective. Based around a pair of spectacles, it uses a small camera sensor that gathers visual information which is then sent to a Raspberry Pi 1 Model B for interpretation. The user is able to hear an audio description of whatever is being seen.

There’s no doubting the positive impact this project could have on scores of people around the world. “Globally, around 2.2 billion don’t have the capability to see, and 90% of them come from low-income countries,” Khairul says. “A low-cost solution for people living with a visual impairment is necessary to give them flexibility so they can easily navigate and, having carried out research, I realised edge computer vision can be a potential answer to this problem.”

Cutting edge

Edge computer vision is potentially transformative. It gathers visual data from edge devices such as a camera before processing it locally, rather than sending it to the cloud. Since information is being processed close to the data source, it allows for fast, real-time responses with reduced latency. This is particularly vital when a user is visually impaired and needs to be able to make rapid sense of the environment.

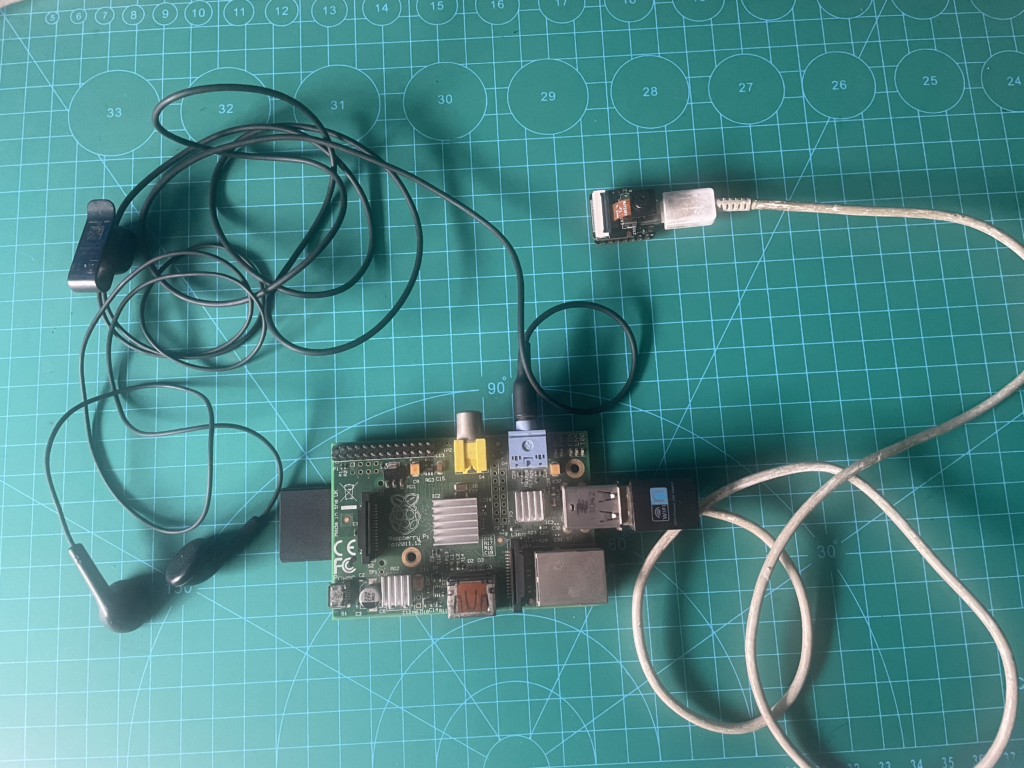

For his project, Khairul chose to use the Xiao ESP32S3 Sense module which, aside from a camera sensor and a digital microphone, has an integrated Xtensa EPS32-S3R8 SoC processor, 8MB of flash memory, and a microSD card slot. This was mounted onto the centre of a pair of spectacles and connected to a Raspberry Pi computer using a USB-C cable, with a pair of headphones then plugged into Raspberry Pi’s audio out port. With those connections made, Khairul could concentrate on the project’s software.

As you can imagine, machine learning is an integral part of this project; it needs to accurately detect and identify objects. Khairul used Edge Impulse Studio to train his object detection model. This tool is well equipped for building datasets and, in this case, one needed to be created from scratch. “When I started working on the project, I did not find any ready-made dataset for this specific purpose,” he tells us. “A rich dataset is very important for good accuracy, so I made a simple dataset for experimental purposes.”

Object detection

Khairul initially concentrated on six objects, uploading 188 images to help identify chairs, tables, beds, and basins. The more images he could take of an object, the greater the accuracy — but it posed something of a challenge. “For this type of work, I needed a unique and rich dataset for a good result, and this was the toughest job,” he explains. Indeed, he’s still working on creating a larger dataset, and these things take a lot of time; but upon uploading the model to the Xiao ESP32S3 Sense, it has already begun to yield some positive results.

When an object is detected, the module returns the object’s name and position. “After detecting and identifying the object, Raspberry Pi is then used to announce its name — Raspberry Pi has built-in audio support, and Python has a number of text-to-speech libraries,” Khairul says. The project uses a free software package called Festival, which has been written by The Centre for Speech Technology Research in the UK. This converts the text to speech, which can then be heard by the user.

For convenience, all of this is currently being powered by a small rechargeable lithium-ion battery, which is connected by a long wire to enable it to sit in the user’s pocket. “Power consumption has been another important consideration,” Khairul notes, “and because it’s a portable device, it needs to be very power efficient.” Since Third Eye is designed to be worn, it also needs to feel right. “The form factor is a considerable factor — the project should be as compact as possible,” Khairul adds.

Going forward

Third Eye is still in a proof-of-concept stage, and improvements are already being identified. Khairul knows that the Xiao ESP32S3 Sense will eventually fall short of fulfilling his ambitions for the project as it expands in the future and, with a larger machine learning model proving necessary, Raspberry Pi is likely to take on more of the workload.

“To be very honest, the ESP32S3 Sense module is not capable enough to respond using a big model. I’m just using it for experimental purposes with a small model, and Raspberry Pi can be a good alternative,” he says. “I believe for better performance, we may use Raspberry Pi for both inferencing and text-to-speech conversions. I plan to completely implement the system inside a Raspberry Pi computer in the future.”

Other potential future tweaks are also stacking up. “I want to include some control buttons so that users can increase and decrease the volume and mute the audio if required,” Khairul reveals. “A depth camera would also give the user important information about the distance of an object.” With the project shared on Hackster, it’s hoped the Raspberry Pi community could also assist in pushing it forward. “There is huge potential for a project such as this,” he says.

The MagPi #149 out NOW!

You can grab the new issue right now from Tesco, Sainsbury’s, Asda, WHSmith, and other newsagents, including the Raspberry Pi Store in Cambridge. It’s also available at our online store, which ships around the world. You can also get it via our app on Android or iOS.

You can also subscribe to the print version of The MagPi. Not only do we deliver it globally, but people who sign up to the six- or twelve-month print subscription get a FREE Raspberry Pi Pico W!

The post Third Eye assistive vision | The MagPi #149 appeared first on Raspberry Pi.