Exporting Google Photos with Takeout

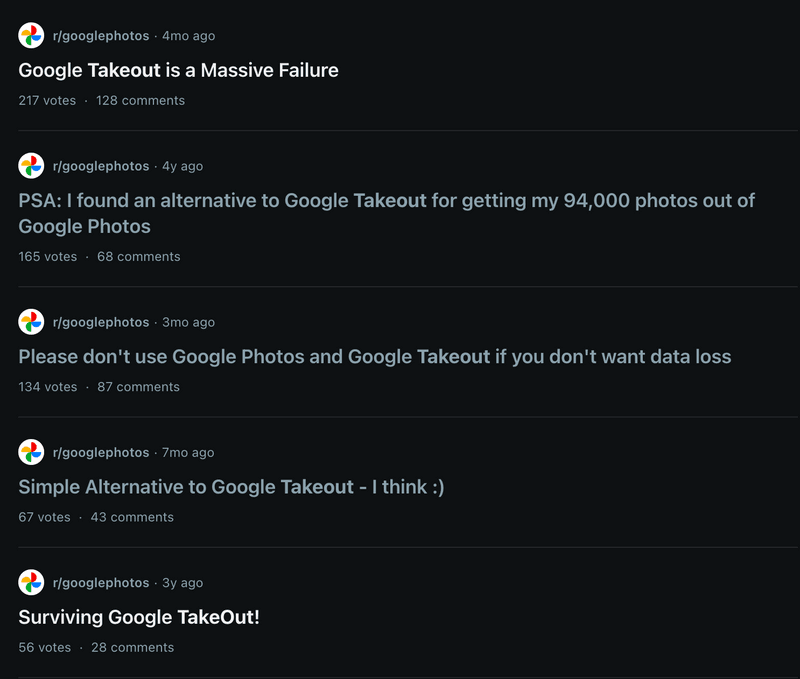

Google Photos is an incredibly successful product with over 100M+ paying customers storing a lifetime of memories. However, there are many possible circumstances in which you want to takeout your memories from Google Photos. Unfortunately, the tool that Google offers to do this is not the best and most reliable (we ranted about this some time back), as one can see from all the Reddit posts complaining about Google Takeout

This document, therefore, is intended to be a guidebook to help you export your memories from Google Photos for different use cases like

- Migrating your memories to Apple iCloud

- Keeping a backup copy of your memories

- Exporting all your memories to a different service

Lets get started

What is Google Takeout?

Google Takeout is Google’s data portability project which allows users of most of their services (including Google Photos, Chrome, Maps, Drive, etc.) to export their data. We had written about their history here in case you are interested. There are two ways that Google Takeout works

- Download the data in multiple zips of max 50GB and then use it how you want

- Transfer directly to a selected set of services - Apple iCloud, Flickr, SmugMug, and OneDrive

For most of the use cases, we will have to go through the zip route.

Migrating from Google Photos to Apple iCloud

This is a fairly easy and straight forward process where you don’t have to do a lot except set it up, and wait for your photos to be transferred to the Apple ecosystem. Lets go through the exact steps

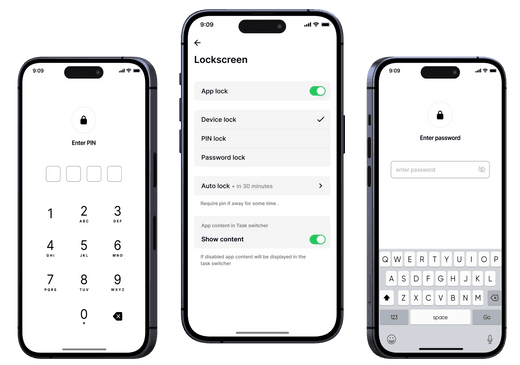

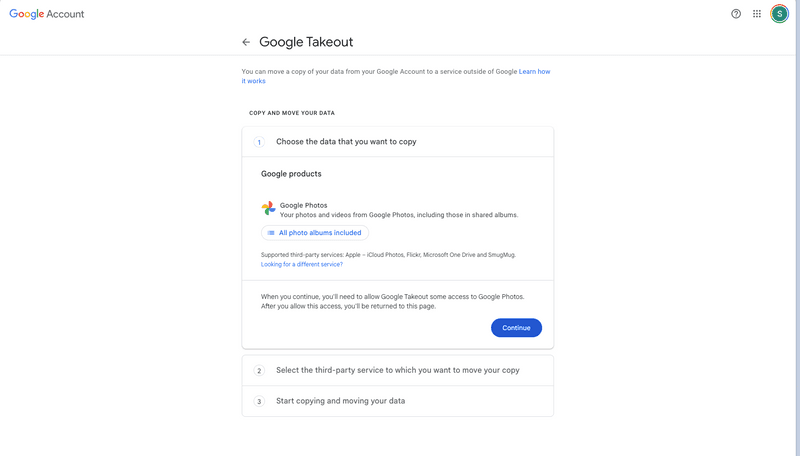

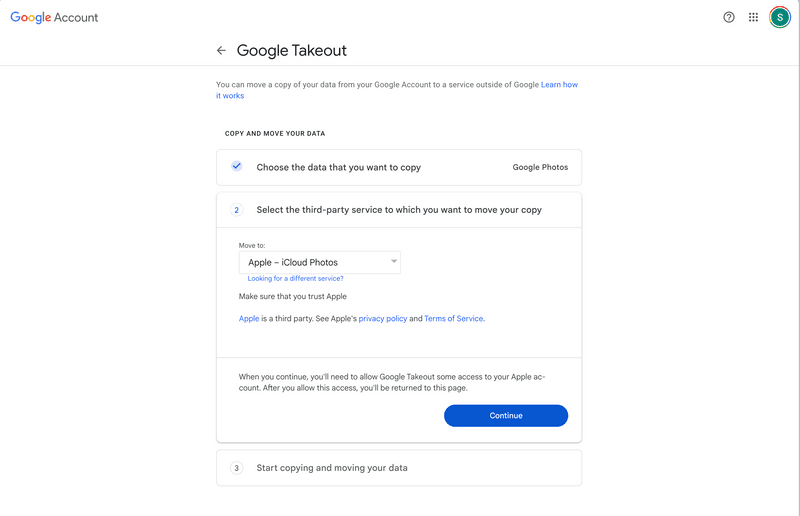

- Open https://takeout.google.com/takeout/transfer/custom/photos. You will see a screen as shown below

- Next, make sure you’re signed into the right Google account that you’re using for Google Photos. You can verify and switch account, if needed by clicking on the top right most icon in the above screen

- Before clicking on Continue, also verify the albums you want to move to Apple iCloud. By default “All photo albums included” is selected. You can click on that and select particular albums as well. Photos that are not in any album on Google Photos are usually albumised by year, like “Photos from 2023”, etc.

- Click on Continue. Before you go on to select which service to transfer your memories to, Google will ask you to verify your account.

- This step is about setting up the service you want to transfer your memories to. The options available are iCloud Photos, Flickr, OneDrive and SmugMug. The exact same steps should work for all the service, but for this article, we will restrict ourselves to Apple iCloud

- Select Apple iCloud in the “Move to:” dropdown. And then click on Continue

- This will trigger a sign in flow for Apple iCloud. Please complete the same, after which you will come back to the Google Takeout page.

- Before moving forwards, do make sure you have enough storage capacity on your iCloud account, so that you don’t run into any issues while transferring

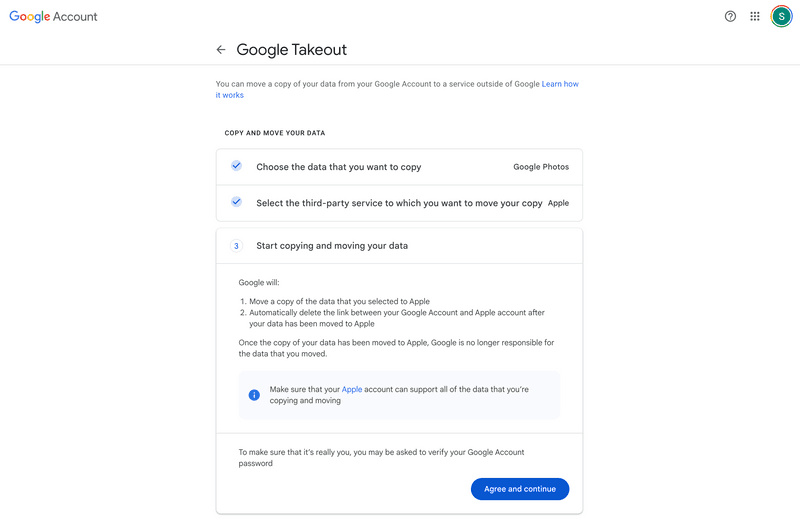

- You can now click on “Agree and continue”. This will start the export process, and you will receive e-mails from both Google and Apple notifying you of this transfer

- The export process can take a few hours or even more than a day, depending on the size of your collection. However, you dont have to do anything else. Both Google and Apple with e-mail you once the process is completed. After which you can see the photos in your Apple iCloud

- Note that your memories will continue to exist on Google Photos, and new photos taken from your devices will continue to be uploaded to Google Photos. You will have to actively delete your data and/or stop upload to Google Photos to solve this.

- Some issues we found while doing this on our personal accounts

- For Live Photos, Google just transfers the image file to Apple, and not the video file, making Apple treat it like a regular photo instead of a Live photo

- If you have some photos that are both in your Apple Photos and Google Photos account, it would lead to deduplication. You can use “Duplicates” in the Utilities section of the iOS app, to detect and merge them. However, it does a less than perfect job, so a lot of duplicates would have to be manually deleted.

- A bunch of files which were not supported by iCloud Photos (few raw images, AVI, MPEG2 videos, etc.) were moved to iCloud Drive. So your data isnt lost, but some part of the Google Photos library wouldn’t be available on iCloud photos

Keeping a backup copy of your memories from Google Photos

There are many ways to do this. The path we are focussing on here is for those who use Google Photos as their primary cloud storage, and want to keep a copy of all their photos somewhere else like a hard disk or another cloud storage.

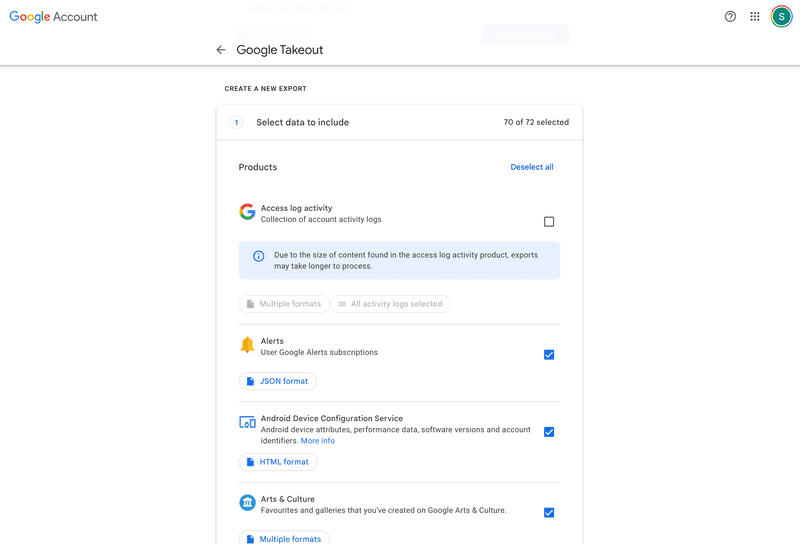

- Go to https://takeout.google.com/. This is a place where you can export your data for most of Google’s services

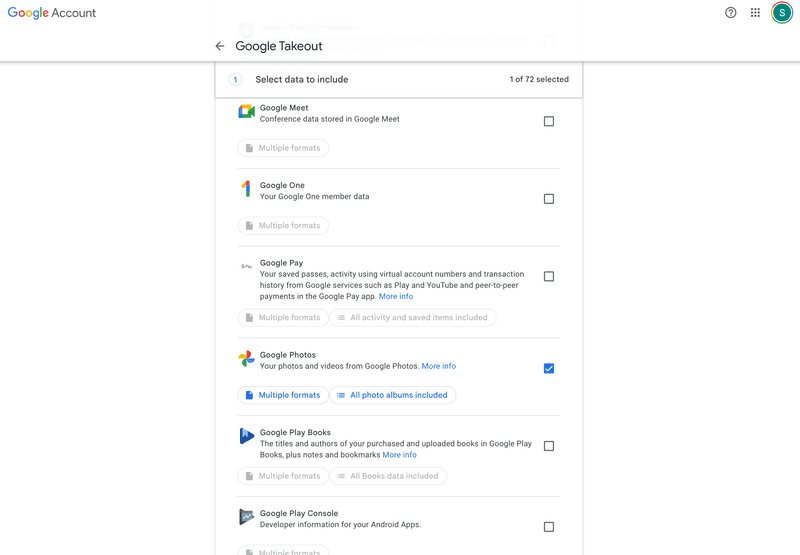

- By default, a large number of services are selected for export. Click on “Deselect all”, and then scroll down to Google Photos, and click on the selection box next to Google Photos. On the top right it should show 1 of xx selected.

- Review which of your memories are selected for export. For backups, you would want to select all the memories on Google Photos. Click on “Multiple formats” to make sure all formats are selected. Similarly make sure that “All photo albums included”. You can also choose which specific formats or albums to export in case you want that

- Now you can scroll to the bottom of the page, and click on “Next step”. This next step is about various options for your export

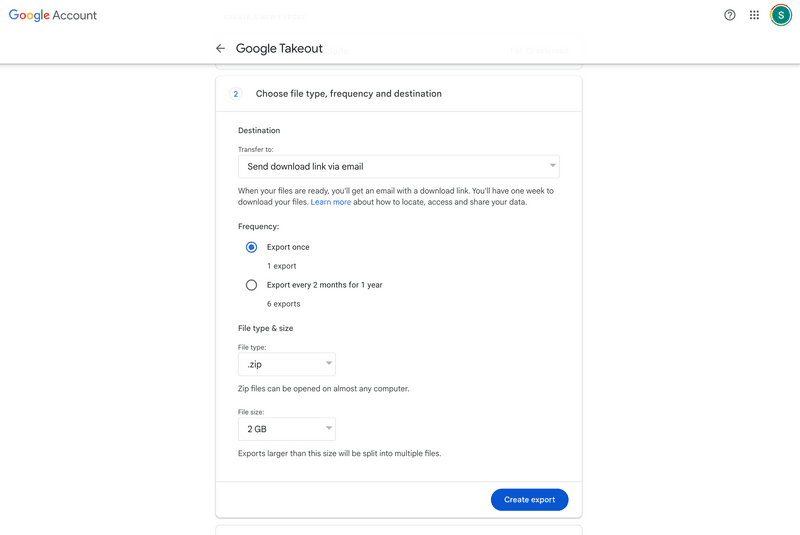

- There are 3 sections here you have to choose the options that works for you

- Destination - Where your exported files are going to go

- If your backup location is one of OneDrive, Dropbox or Box chose that. Make sure you have enough storage space on these services

- If you want to backup on a hard disk, or other cloud storage provider you can choose either Google Drive or download link via email. If you’re choosing Google Drive make sure you have enough storage space on Drive

- Frequency

- Given its a backup copy while you want to continue using Google

Photos, you should choose “Export every 2 months for 1 year”. Two

important things to note here

- Google Takeout doesnt support incremental backups. So the export after 2 months is going to be for your entire library. So to save storage space, you will have to delete the old backup where ever you’re storing this, once the new exports are available

- The export period is only 1 year, so you will have to do this again every year to ensure your backup copy has all the latest memories stored in Google Photos

- If you have other usecase - like moving into a different service or ecosystem and stop using Google Photos, you can do a “Export once” backup.

- Given its a backup copy while you want to continue using Google

Photos, you should choose “Export every 2 months for 1 year”. Two

important things to note here

- File type and size

- Google gives two options for file types - .zip and .tgz. I personally prefer .zip as decompression is supported on most devices. However, .tgz is also fine as long as you know how to decompress them

- For size, Google gives options ranging from 2GB to 50GB. Note that if your library size is large, you will get multiple zip files to cover your entire library. For large libraries, we would recommend keeping the size to 50GB. However, if you have a bad network connection, downloading these 50GB files might take multiple attempts.

- Destination - Where your exported files are going to go

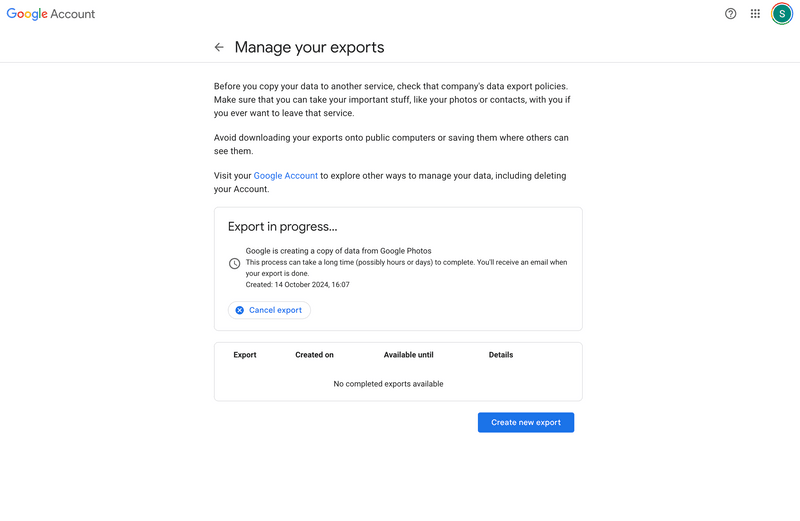

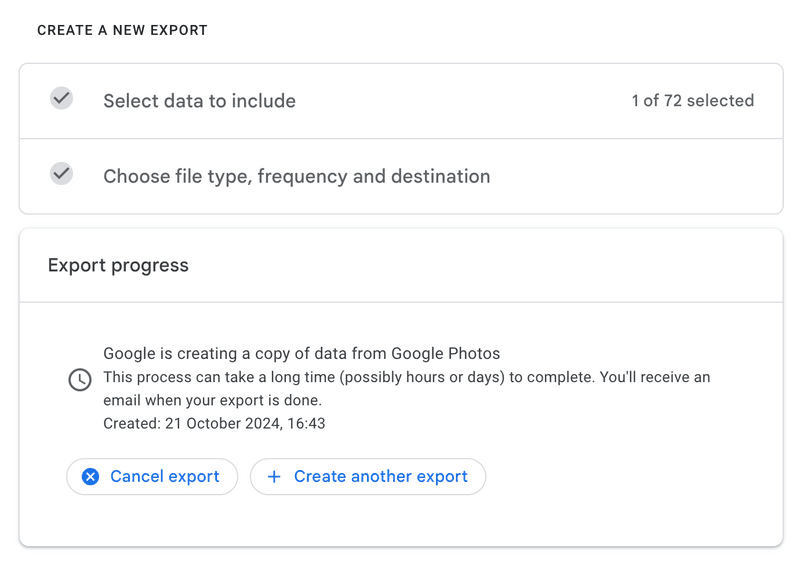

- Once you have made the selection, you can click on “Create export”. The page will now show a “Export progress” section

- Google Takeout will send you an email once the export is completed. You can download the zips from the link provided in the email (if you had selected “Download link via email” as destination) or go to the selected destination (Google Drive, OneDrive, etc.) to download the zip files. Note that you only need to download the zips if you want to have the backup in a different location than what you chose as destination

- Before downloading this, make sure your device has enough free storage. These zip files contain your entire photo and video library and therefore might require a large amount of device storage

- Once you have downloaded the zips, you can move/upload the zips wherever you want to act as a backup for Google Photos. This could be a hard disk or another cloud provider

- If you had selected “Export every 2 months for 1 year” as frequency, you will get an email from Google Takeout every 2 months. And you will have to repeat the download and upload process everytime. Note that you can delete old zips once latest backups are available as every 2 months you will get a full backup and not an incremental backup. Otherwise you will consume a lot more storage space than required.

- Please note that the above process keeps the zip files as backup. If you want to unzip the files so that the actual photos are available, see the next section

Moving all your memories to another service

The last section mostly covered keeping an extra backup while you’re still using Google Photos. That’s why we kept the zip files as backup, as you don’t need to unzip and keep the uncompressed folders for backup purposes.

However, there are definitely usecases where you would want to uncompress the zips. For e.g., if you want to move your entire library to your hard disk, or another cloud, you would want to make uncompress the zips, ensure your metadata is intact and then upload it.

So how do we do this?

- Make sure you have enough storage space (atleast 2 times your Google Photos storage) on your device

- Follow the steps of the previous section to download the zip files from Google Takeout

- Uncompress the zip files one by one

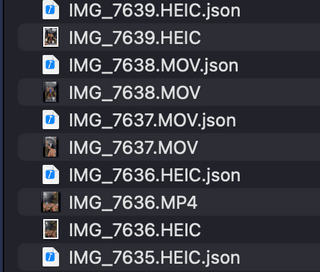

- When you open the uncompressed folders you will notice the following

- The base folder name is “Takeout”, and within that there would be another folder called “Google Photos”

- Inside “Google Photos”, you would have folders corresponding to albums on Google Photos. Photos which are not part of any albums on Google Photos are automatically added to year wise albums like “Photos from 2022”, etc.

- Inside each album, you will see the files associated with your photos and

videos

- Your photo or video media files. These would be jpeg, heic, mov, or mp4, etc.

- A JSON file with the same name as your photo/video containing all the metadata of your memories like location, camera info, tags, etc.

- There are a few issues, however, with the export files though

- If your library is distributed across multiple zips, the albums can reside across multiple zips (and uncompressed folders) which needs to be combined together

- The media file and the corresponding metadata JSON file can also be in different zips

- Because of this, when you import these uncompressed folders to another service directly one by one, it might lead to loss of metadata associated with your photos. It might also create incorrect folder/album structure

- Thankfully, there are ways to fix these issues

- Metadatafixer

- All you need to do is add all your zip files to this tool, and it will do its work - combine all the photos/videos and their corresponding metadata together so its readable by any other serice

- Unfortunately, this is a paid tool that costs $24

- GooglePhotosTakeoutHelper

- If you have some tech know how or dont want to pay the $24 above, then this is a library that will come to your rescue

- It works pretty much the same way as Metadatafixer, except you need to uncompress the zips, and move them to a single folder. You can find all the instructions here

- Metadatafixer

- Once these fixes are done, you can import the output to any other service or your local storage without the loss of any metadata. This will also work if you want to move your photos from one Google account to another

Moving your memories from Google Photos to Ente

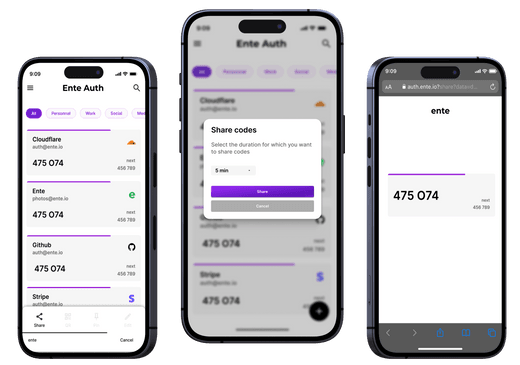

If you want to move away from Google Photos to another service, Ente Photos is the way to go. It offers the same set of features as Google Photos in a privacy friendly product which makes it impossible for anyone to see or access your photos. Migration to Ente is quite easy as well.

- Download the zip files from Google Takeout as explained above. Uncompress all the zip files into a single folder

- Create an account at web.ente.io, and download the desktop app from https://ente.io/download

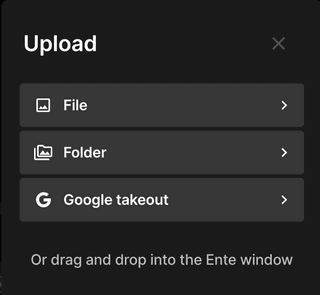

- Open the desktop, and click on Upload on the top right

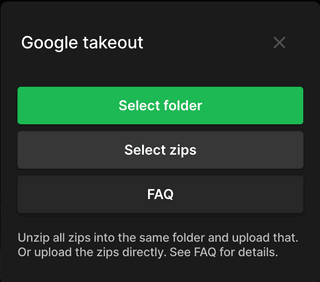

- Click on “Google Takeout”, then “Select Folder”, and then select the folder you have just created in the earlier step

- Wait for the Ente to upload all the files

- That’s it

Bonus: Permanently delete memories in Google Photos after exporting

If you’re exporting Google Photos for a backup, and you intend to continue using Google Photos, then you don’t need to delete your memories.

However, there are many use cases for which you should delete your memories from Google Photos after exporting so you dont get charged for it.

- Moving to a different service like Apple Photos or Ente

- Using your hard disk for storing memories

- Partially clearing Google Photos so you can continue using Gmail

- Deleting a few unimportant memories so you can reduce your cloud costs

Deletion should be fairly straightforward

- Open the Google Photos app or open the web app at photos.google.com

- Select all the photos and albums (which includes all the photos inside) you want to delete

- Click on the Delete icon at the bottom

- This will move your photos to “Bin”, where it will held for 60 days before its permanently deleted

- If you want to permanently delete these memories right away, go to “Collections” tab from the bottom bar

- You will see “Bin” on the top right. Click on that. Review the memories there and ensure that you want to delete them permanently.

- Clicking on the ellipsis on the top right would show an option to “Empty Bin”. Clicking on this would delete all your memories from the bin.

- Please ensure you have checked everything before tapping on “Empty Bin” as this is a permanent operation without any recovery methods.

You can refer this detailed guide for deletion as well