Ente Photos v0.9.98

We made one last stop before hitting v1.0, to ship some major improvements.

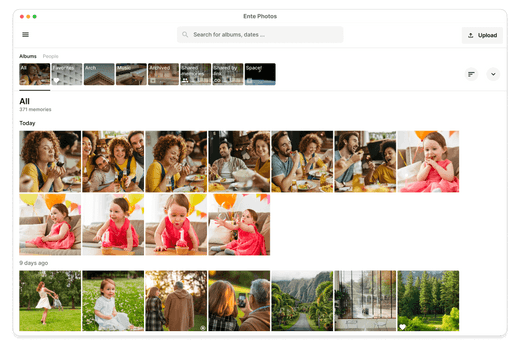

Light mode on Desktop

Desktop had been brooding in dark mode since forever, so we took some time out to put a fresh coat of paint. While repainting, we also improved the way we present information, making the app simpler to navigate.

Now since Ente is end-to-end encrypted, we have no access to your photos and have to generate thumbnails on your devices. Performing this operation reliably is a fairly complex challenge and this release introduces a robust thumbnail generator.

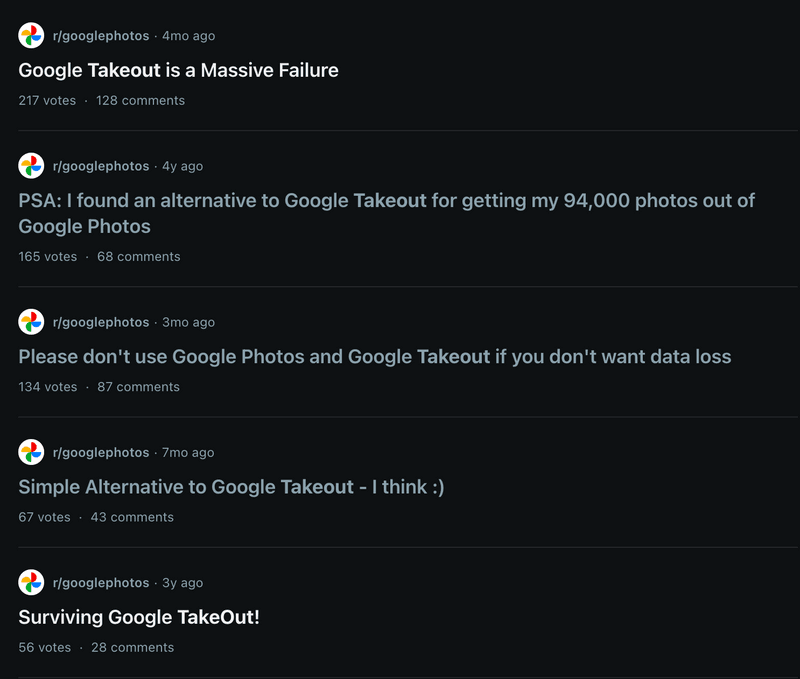

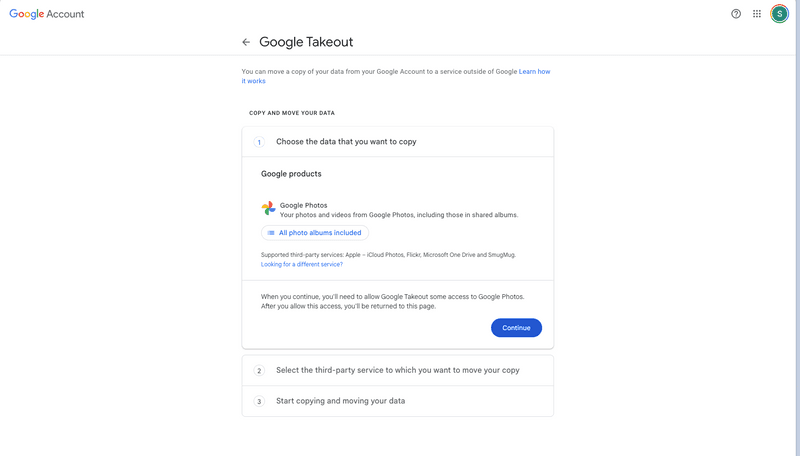

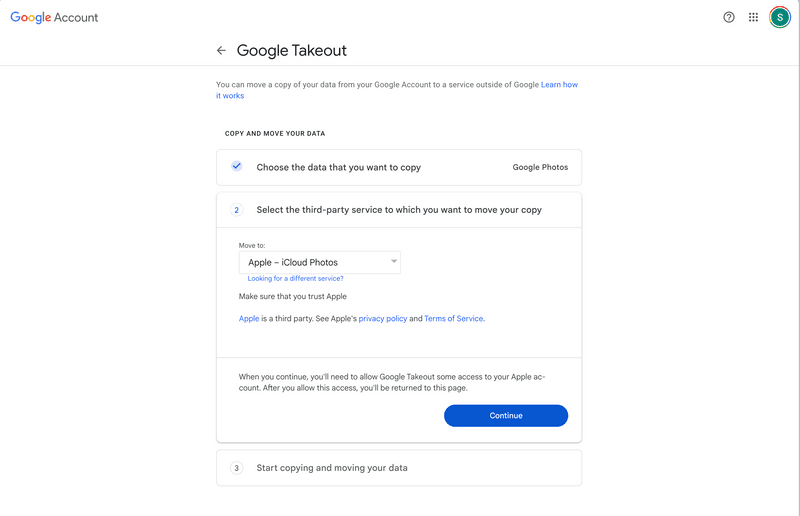

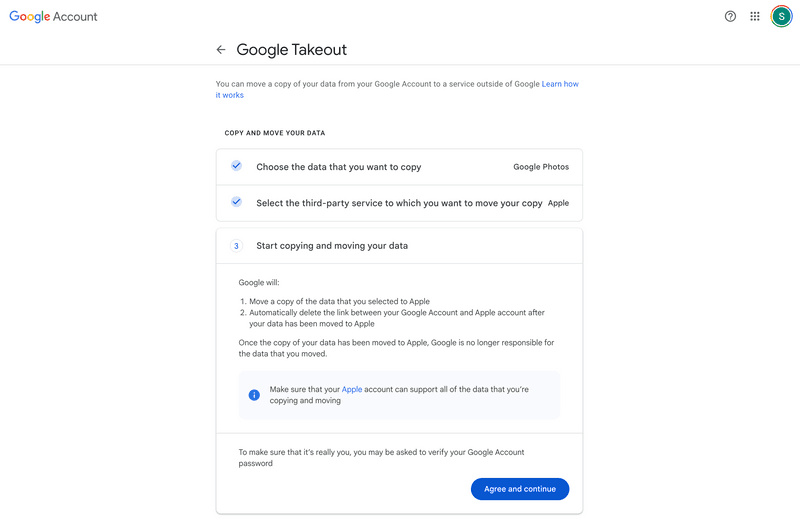

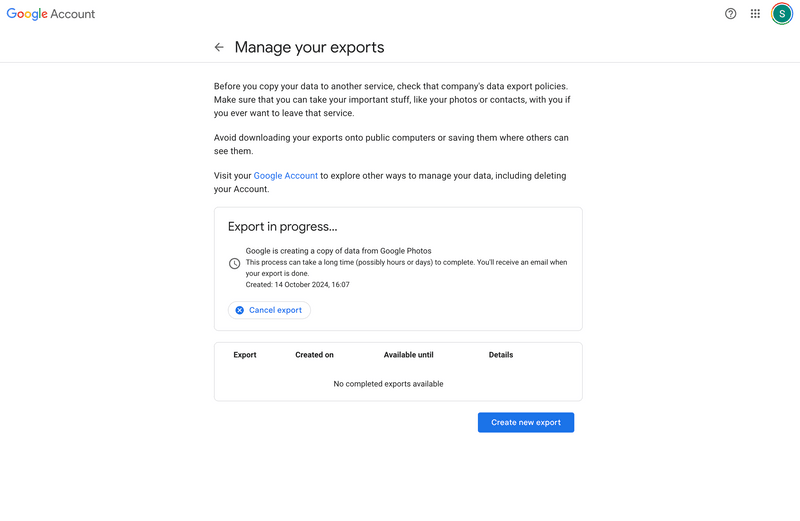

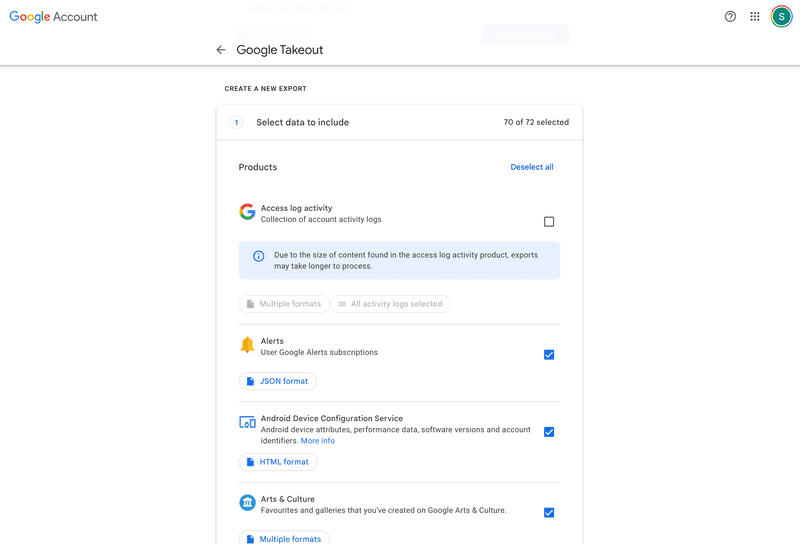

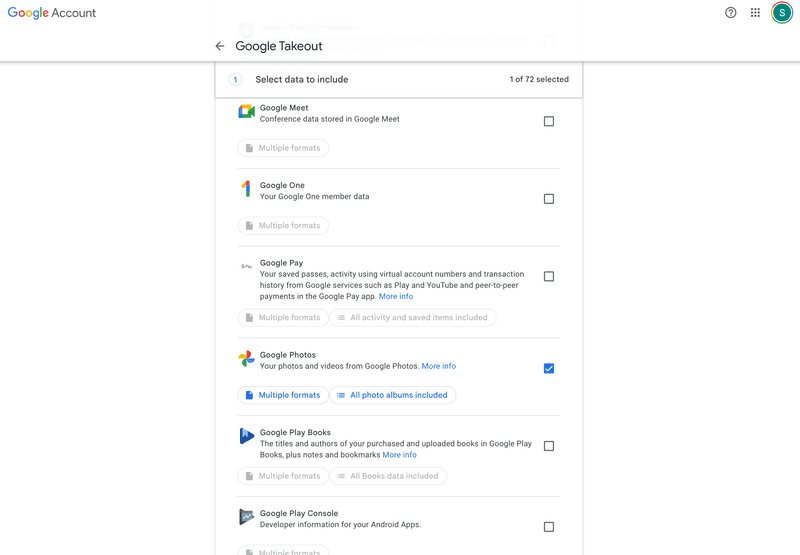

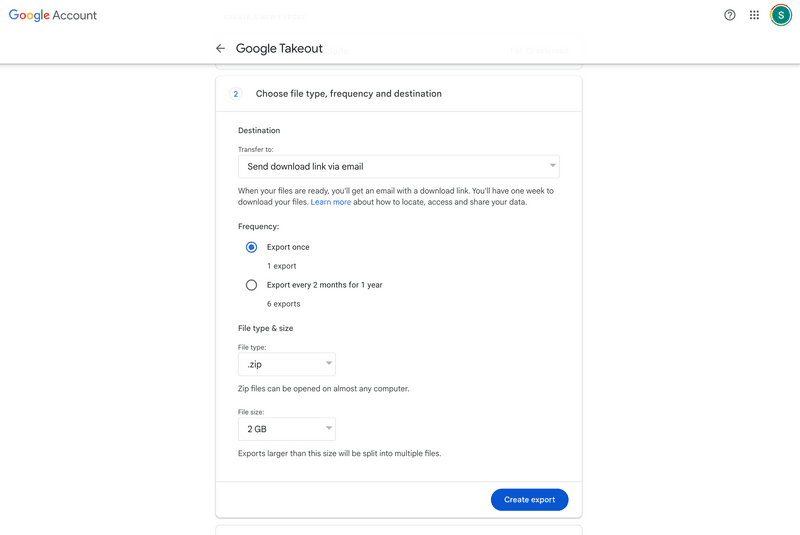

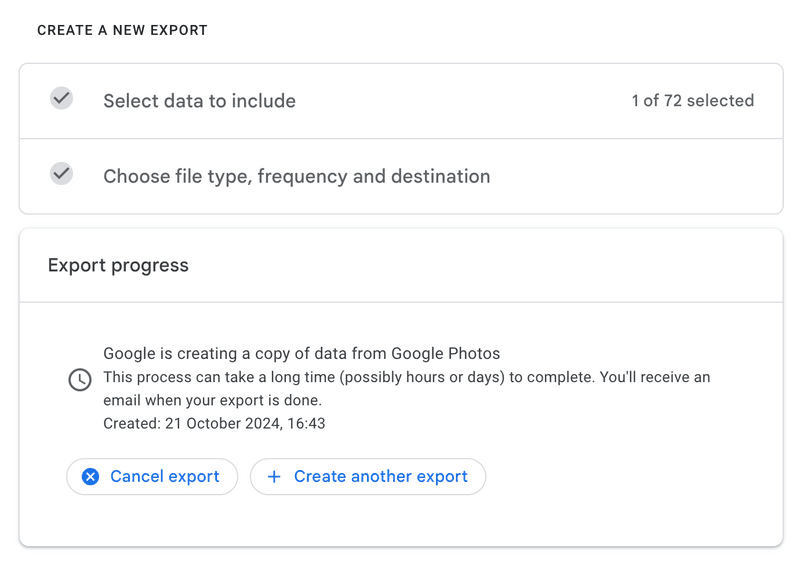

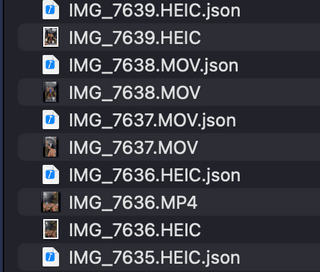

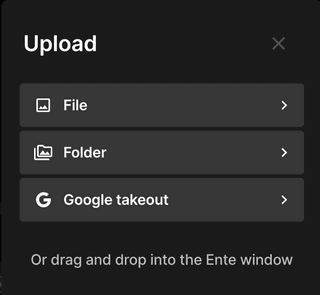

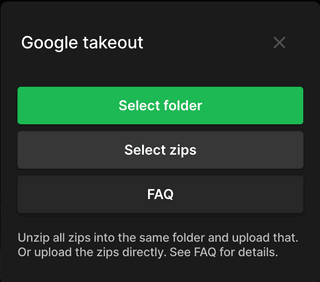

Importing from Google Photos is another common use case our desktop app serves. Stitching together your data Google breaks is a cat-and-mouse game we've gotten better at. This release includes a bunch of improvements that will provide a hassle free migration from Google Photos.

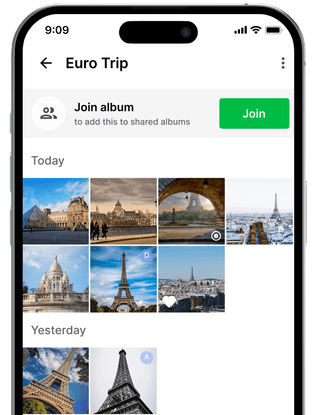

Album deep links

Links to albums (like this one) will now open on our mobile apps instead of your browser.

You will have an option to join these albums, and doing so will bring the photos to your timeline, and you will stay updated on any changes to the album. Also, in cases of links where the album owner has enabled photo uploads, you will be able to add your photos from Ente, with ease.

Search shared photos

Ente's image search is incredibly powerful.

What it has lacked so far was the ability to search for photos that were shared with you by someone else. We've fixed that now.

Machine learning indexes for shared photos will now be shared with you, end-to-end encrypted. So you can search for faces, descriptions, etc. within shared photos as well.

Since machine learning runs on device, we've often heard customers complain about how their family members on low-end devices have libraries that are too large to be indexed quickly. We've solved that problem.

Our desktop app can now index shared libraries on behalf of family members who shared their libraries with you. So you can use your beefy machine to index shared libraries, and let your family experience the best of Ente's search features.

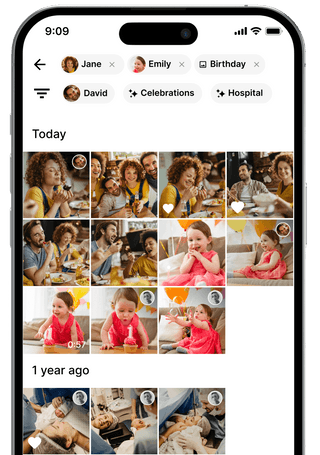

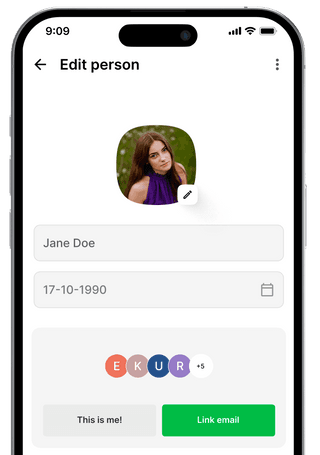

Faces to contacts

One of the major product areas we're working on is sharing. Internally, we are yet to find a platform that provides a safe space to share and collaborate on our memories, and we wish for Ente to fill that gap in our lives.

Unlike other "social" apps, Ente does not have access to your phone book. Building a contacts app is an idea our community has been pitching to us for a while. But until we embark on that journey, we have to simplify sharing with what we have - email addresses. But email addresses lack warmth.

So to make sharing more human we decided to hide email addresses behind names and avatars. The only question was - do we set our own names and avatars, or do our contacts set it for us?

We felt there was beauty in following traditional phone books - letting us assign names and photos that remind us most of our contacts. There's an argument to be made in the other direction as well. But for the path Ente is taking, where we want to be social, not public, we felt it is best to stick to how we remember each other in the real world.

As a side effect, this simplifies life for those who have a hard time reducing themselves to a single avatar.

Finally, since we frequently share our photos with those who appear in them, connecting their faces to our contacts made sense.

We believe this is a baby step towards a larger exercise of providing a simple, wonderful sharing experience within closed circles.

Video streaming (beta)

End-to-end encrypted video streaming is a hard problem in software engineering that we've had to venture into, and we're happy with the progress we've made so far. Video streaming is now available in beta on all our mobile apps.

Our apps will generate compressed video streams, that can be played on any

platform - with support for random seeks. Please check out the feature from

Settings > General > Advanced > Video streaming, and let us know if you have

any feedback!

We will publish a document about the underlying technical details soon. Meanwhile, you can find more information about the feature here.

That's all for now, see you at v1.0.