A Comprehensive Guide to NVIDIA’s AI Stack for Deep Learning Projects

3 Trillion Dollars – Yes, You Read That Right.

That staggering number isn’t just a milestone; it’s the current valuation of NVIDIA. From pioneering the GPU to spearheading the revolution in artificial intelligence, NVIDIA’s ascent to a three trillion-dollar empire encapsulates not just exceptional growth but a relentless drive to innovate and redefine the boundaries of what technology can achieve. When it comes to deep learning and artificial intelligence, NVIDIA has solidified its reputation as the leader in hardware and software solutions. From powering data centers to enabling edge AI in robotics, NVIDIA has built an ecosystem that supports every stage of AI development.

This blog delves into NVIDIA’s AI stack—a comprehensive suite of tools designed to accelerate innovation. Whether you’re training complex neural networks or deploying scalable AI solutions, NVIDIA provides the resources you need to start with.

Why NVIDIA Became a $3-Trillion Company

NVIDIA didn’t just ride the wave of AI; it built the wave. By focusing on both hardware and software, NVIDIA created an integrated platform that’s hard to match. Its dominance is driven by innovations like CUDA programming, the TensorRT inference engine, and cutting-edge GPUs such as the H100 and Blackwell. These technologies cater to researchers, developers, and businesses, making NVIDIA the backbone of the AI industry.

Key statistics highlight their impact:

- Over 90% of AI workloads globally are powered by NVIDIA GPUs.

- NVIDIA GPUs are a top choice for training large language models (LLMs) like GPT-4 and Llama

This commitment to innovation has made NVIDIA indispensable, contributing to its market dominance and growing valuation.

Understanding NVIDIA’s AI Ecosystem

NVIDIA’s AI stack isn’t just about hardware; it’s a symphony of hardware and software working together seamlessly.

Hardware:

- High-performance GPUs for parallel processing.

- AI supercomputers like DGX systems for advanced research.

- Edge devices like Jetson for IoT and robotics.

Software:

- CUDA for accelerated computing.

- TensorRT for deployment.

- RAPIDS for data science workflows.

By providing a comprehensive suite of accelerated libraries and technologies, NVIDIA empowers developers to innovate efficiently while leveraging their hardware ecosystem effectively.

Must Read: Demystifying GPU Architecture – Part 1

NVIDIA’s Hardware for Deep Learning

NVIDIA’s hardware forms the backbone of deep learning workflows, catering to every need from high-performance training to edge AI deployment. With unmatched speed, scalability, and efficiency, NVIDIA’s hardware solutions power groundbreaking innovations across industries.

1. GPUs: The AI Powerhouse

Graphics Processing Units (GPUs) are the heart of NVIDIA’s dominance in deep learning. Unlike CPUs, which are optimized for sequential tasks, GPUs excel at parallel processing, making them ideal for the massive computations required in AI.

- Popular GPUs for Deep Learning:

- RTX 40-Series (Ada Lovelace): Geared toward individuals and small teams, these GPUs offer excellent performance for training small- to mid-scale models.

- A100 (Ampere): Widely used in enterprises and research labs, the A100 is designed for large-scale AI tasks like training deep learning models or running complex simulations.

- H100 (Hopper): The latest addition to NVIDIA’s lineup, the H100 delivers groundbreaking performance with features like Transformer Engine for faster language model training and NVLink Switch System for seamless multi-GPU scalability.

Why GPUs Matter in Deep Learning:

- Parallelism: GPUs handle thousands of computations simultaneously, making them ideal for matrix operations in neural networks.

- Efficiency: Reduce training times significantly, enabling faster iteration and experimentation.

- Scalability: Compatible with multi-GPU systems for distributed training.

Example: Training a large transformer model on the H100 GPU reduces training time by 70% compared to previous-generation GPUs, enabling faster breakthroughs in AI research.

Nvidia’s Latest 40 Series GPU: Source

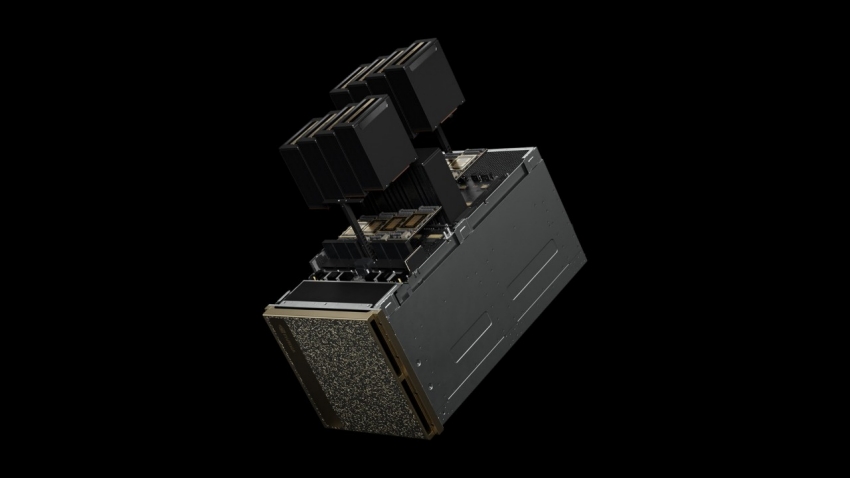

2. DGX Systems: Purpose-Built AI Supercomputers

NVIDIA’s DGX systems are end-to-end AI supercomputers designed for enterprises and researchers working on advanced AI problems. Each DGX system integrates multiple high-performance GPUs, optimized storage, and networking for seamless deep learning workflows.

- Key Models:

- DGX A100: Ideal for large-scale model training, boasting 5 petaflops of performance.

- DGX H100: Tailored for next-generation workloads like LLMs, with enhanced scalability and speed.

Use Cases:

- Training massive language models like GPT or BERT.

- Conducting climate simulations for research.

- Building complex recommendation systems for e-commerce platforms.

Why Choose DGX Systems?

- Scalability: Supports multiple GPUs in one system, ideal for large datasets.

- Ease of Use: Pre-installed with optimized AI software, including NVIDIA’s AI Enterprise Suite.

- Flexibility: Designed for on-premise deployment or cloud integration.

Example: OpenAI leveraged DGX systems to train GPT-4, enabling faster experimentation and better results.

NVIDIA DGX Platform: Source

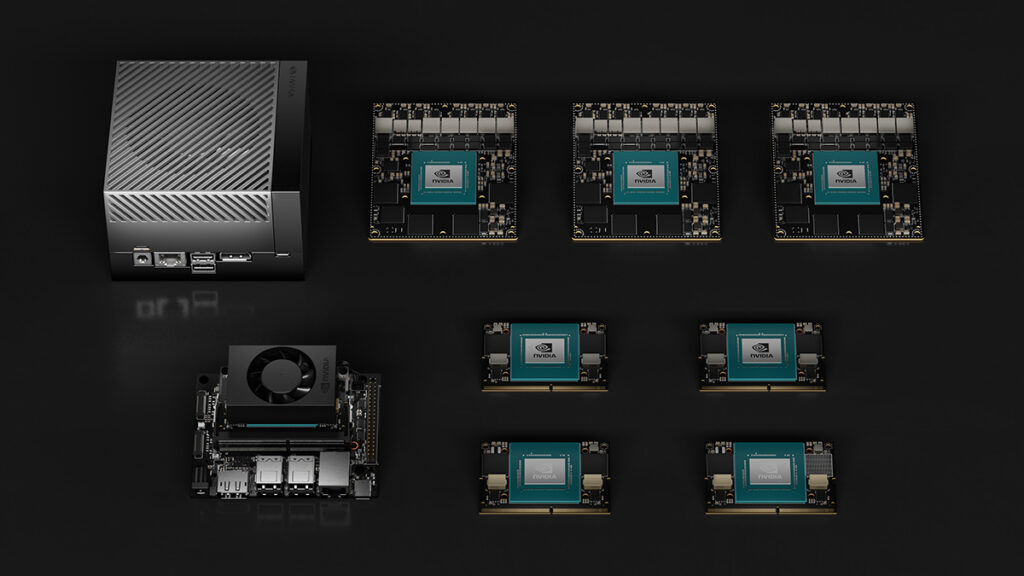

3. Jetson Modules: AI at the Edge

NVIDIA Jetson modules bring AI to edge devices, enabling real-time applications in robotics, IoT, and embedded systems. These compact modules pack powerful AI capabilities into a small form factor, making them ideal for scenarios requiring low latency.

- Key Modules:

- Jetson Nano: Entry-level module for hobbyists and developers.

- Jetson Xavier NX: Compact yet powerful, perfect for industrial robots and drones.

- Jetson AGX Orin: NVIDIA’s most advanced edge AI module, delivering up to 275 TOPS (trillion operations per second).

- Jetson Orin Nano Super: This Christmas, NVIDIA introduced the best gift you can give for $249—a generative AI computer offering 70 TOPS. It’s the world’s most affordable generative AI computer!

Benefits of Jetson Modules:

- Low Power Consumption: Ideal for battery-operated devices like drones.

- High Performance: Handles real-time AI tasks such as object detection and SLAM (Simultaneous Localization and Mapping).

- Scalability: Supports applications ranging from prototyping to large-scale deployment.

Example: A delivery robot equipped with a Jetson Orin module can process environmental data in real time, avoiding obstacles and navigating complex routes efficiently.

Key Benefits of NVIDIA Hardware

- Speed: GPUs and DGX systems reduce training time for AI models, enabling faster experimentation and deployment.

- Scalability: From single GPUs to multi-node clusters, NVIDIA hardware grows with your project needs.

- Energy Efficiency: Jetson modules and optimized GPUs deliver high performance without excessive power consumption.

- Versatility: Suited for diverse applications, from cloud-based training to edge AI.

Nvidia’s Jetson Module Family: Source

Must Read: Demystifying GPU Architecture – part 2

NVIDIA’s Software Stack: Powering the AI Revolution

NVIDIA’s software stack is the backbone of its AI ecosystem, providing developers with the tools to harness the full power of NVIDIA hardware. With libraries, frameworks, and developer tools optimized for deep learning and data science, the software stack simplifies workflows, accelerates development, and ensures cutting-edge performance across diverse AI applications.

1. CUDA Toolkit: The Heart of GPU Computing

CUDA (Compute Unified Device Architecture) is NVIDIA’s parallel computing platform that unlocks the full potential of GPUs. It’s the foundation of NVIDIA’s AI ecosystem and is used to accelerate everything from neural network training to scientific simulations.

Key Features of CUDA Toolkit:

- Enables GPU acceleration for deep learning frameworks like TensorFlow and PyTorch.

- Supports parallel programming models, allowing developers to execute thousands of tasks simultaneously.

- Includes tools for debugging, optimization, and performance monitoring.

Example Use Case:

Training a convolutional neural network (CNN) on large image datasets is computationally intensive. CUDA reduces training time by distributing computations across multiple GPU cores, making it practical to train models that would otherwise take days or weeks.

2. cuDNN: Optimized Neural Network Libraries

The CUDA Deep Neural Network (cuDNN) library is specifically designed to enhance the performance of neural network layers during training and inference. It provides highly optimized implementations for operations like convolutions, pooling, and activation functions with techniques like kernel fusion and fused operators

Why cuDNN Matters:

- Maximizes GPU performance for deep learning tasks.

- Reduces training time for complex models like LSTMs and Transformers.

- Seamlessly integrates with popular frameworks like TensorFlow, PyTorch, and MXNet.

Example Use Case:

An AI team building a natural language processing (NLP) model for real-time translation uses cuDNN to optimize the Transformer architecture, enabling faster training and smoother deployment.

3. TensorRT: High-Performance Inference Engine

TensorRT is NVIDIA’s deep learning inference optimizer and runtime library. It focuses on deploying trained models with reduced latency while maintaining accuracy.

Key Features of TensorRT:

- Model optimization through techniques like layer fusion and precision calibration (e.g., FP32 to INT8).

- Real-time inference for low-latency applications like self-driving cars and AR/VR systems.

- Supports deployment across platforms, including edge devices and data centers.

Example Use Case:

A self-driving car requires real-time object detection to navigate safely. TensorRT optimizes the model to ensure split-second decisions with minimal latency.

4. NVIDIA AI Enterprise Suite

This comprehensive software suite is tailored for enterprises looking to deploy scalable AI solutions. It combines the power of NVIDIA’s hardware with tools and frameworks optimized for production environments.

Key Features:

- Simplifies AI workflows for businesses.

- Supports Kubernetes-based deployments for scalability.

- Provides enterprise-grade support and security.

Example Use Case:

A retail company uses the NVIDIA AI Enterprise Suite to integrate computer vision with real-time customer data analysis, delivering personalized product recommendations and enhancing shopping experiences.

5. RAPIDS: GPU-Accelerated Data Science

RAPIDS is a collection of open-source libraries designed to accelerate data science workflows using GPUs. It supports tasks like data preparation, visualization, and machine learning.

Why RAPIDS is Revolutionary:

- Integrates with popular Python libraries like pandas and scikit-learn.

- Reduces the time spent on preprocessing large datasets.

- Optimized for end-to-end data pipelines, including ETL, training, and deployment.

Example Use Case:

A financial analyst uses RAPIDS to process gigabytes of transaction data for fraud detection, completing a task that typically takes hours in just minutes.

6. NVIDIA Pre-Trained Models and Model Zoo

NVIDIA provides a library of pre-trained models that developers can use for tasks like computer vision, robotics, and Generative AI. These models simplify transfer learning and reduce the time required to build custom solutions.

Benefits:

- Saves time by starting with pre-trained weights.

- Reduces the need for massive datasets.

- Covers a wide range of applications, from healthcare to autonomous driving.

Example Use Case:

A healthcare startup uses a pre-trained SegFormer model from NVIDIA’s Model Zoo to develop a chest X-ray diagnostic tool, cutting development time significantly.

7. NVIDIA Triton Inference Server

Triton simplifies the deployment of AI models at scale by supporting multiple frameworks and automating model management. It’s designed for high-performance inference in production environments.

Key Features:

- Supports TensorFlow, PyTorch, ONNX, and more.

- Built-in model versioning for seamless updates.

- Multi-model serving to maximize resource utilization.

Example Use Case:

A logistics company uses Triton to deploy object detection models across its warehouses, ensuring efficient inventory tracking and management.

8. NVIDIA Omniverse for AI Projects

Omniverse is a platform for real-time 3D simulation and AI training. It allows developers to create highly realistic simulations for robotics, gaming, and digital twins.

Why It’s Unique:

- Enables collaboration across teams in real time.

- Provides a virtual environment for training and testing AI models.

- Supports synthetic data generation for AI training.

Example Use Case:

Ola Electric utilized its Ola Digital Twin platform, developed on NVIDIA Omniverse, to create comprehensive digital replicas of warehouse setups. This enabled the simulation of failures in dynamic environments, enhancing operational efficiency and resilience.

Key Benefits of NVIDIA’s Software Stack

- End-to-End Integration: Seamless compatibility with NVIDIA hardware.

- Performance Optimization: Libraries like cuDNN and TensorRT maximize efficiency.

- Scalability: From edge devices to data centers, NVIDIA’s software stack supports diverse deployment needs.

- Community Support: A vast ecosystem of developers and extensive documentation.

Deep Learning Frameworks Optimized for NVIDIA

NVIDIA’s AI stack is designed to work seamlessly with leading deep learning frameworks, making it the backbone of AI innovation.

Supported Frameworks

- TensorFlow: One of the most popular frameworks for building and training deep learning models, optimized with CUDA and cuDNN for maximum performance.

- PyTorch: Favored by researchers for its flexibility and ease of experimentation. NVIDIA GPUs enhance PyTorch’s computational efficiency, speeding up model training and evaluation.

- MXNet: Ideal for scalable deep learning models, often used in cloud environments.

- JAX: Known for high-performance machine learning, JAX benefits from NVIDIA’s powerful GPU acceleration.

Example Use Case:

A team using TensorFlow trains a deep neural network for medical image analysis. By leveraging NVIDIA’s GPUs, they reduce training time from days to hours, enabling faster iterations.

NVIDIA Pre-Trained Models and Model Zoo

NVIDIA’s Model Zoo offers a library of pre-trained models for tasks like image classification, object detection, and natural language processing.

- Transfer Learning: Developers can fine-tune pre-trained models to suit specific tasks, saving time and resources.

- Customizable Models: Models like ResNet, YOLO, and BERT are available, optimized for NVIDIA hardware.

Example Use Case:

A startup uses a pre-trained YOLOv5 model from the Model Zoo to build a real-time object detection system for retail analytics, significantly speeding up development.

Developer Tools for NVIDIA’s AI Stack

NVIDIA provides a comprehensive suite of tools to simplify and optimize the development process.

1. NVIDIA Nsight Tools

Nsight tools are essential for profiling and debugging deep learning workloads. They help developers identify performance bottlenecks and optimize GPU usage.

- Nsight Compute: Analyze kernel performance and optimize code execution.

- Nsight Systems: Understand application behavior to improve performance.

Example Use Case:

A data scientist uses Nsight Systems to debug a complex neural network, reducing runtime by 30%.

2. NVIDIA DIGITS

DIGITS is a user-friendly interface for training, visualizing, and evaluating deep learning models.

- Features:

- Simplifies hyperparameter tuning.

- Provides real-time training metrics.

- Supports image classification and object detection.

Example Use Case:

A beginner uses DIGITS to train a CNN for recognizing handwritten digits, gaining insights without writing extensive code.

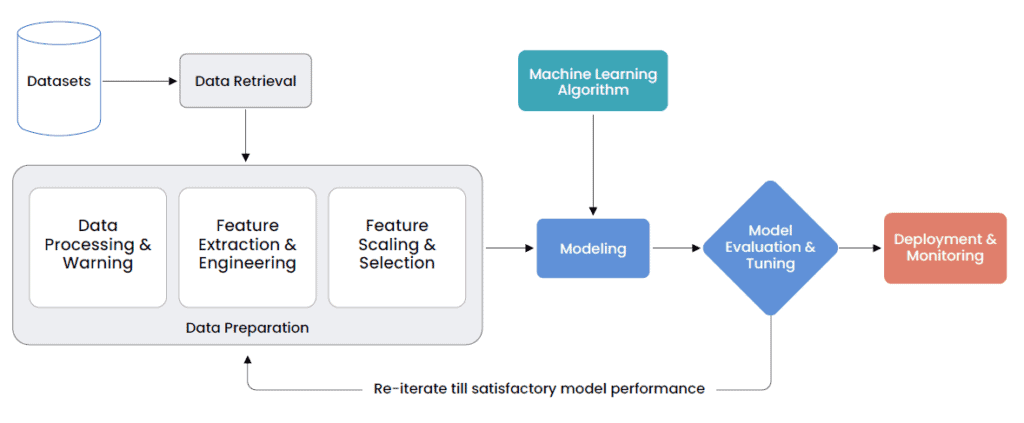

End-to-End Workflow with NVIDIA’s AI Stack

NVIDIA’s AI stack offers a streamlined workflow for every stage of an AI project:

1. Data Preparation

- Use RAPIDS to process, clean, and visualize large datasets with GPU acceleration.

Example: A retail company analyzes millions of transactions in hours instead of days, identifying customer behavior trends.

2. Model Training

- Train deep learning models using CUDA and cuDNN for optimized performance.

Example: Researchers train a GAN (Generative Adversarial Network) for generating realistic artwork, cutting training time by 40%.

3. Deployment

- Deploy optimized models with TensorRT for low-latency applications.

Example: An autonomous vehicle system uses TensorRT to process real-time sensor data, ensuring split-second decision-making.

4. Monitoring and Optimization

- Use Nsight Tools to monitor performance and identify areas for improvement.

Example: A data scientist tunes a model’s hyperparameters to achieve 10% better accuracy.

Real-World Applications Powered by NVIDIA

NVIDIA’s AI stack drives innovation across diverse industries:

1. Autonomous Vehicles

- NVIDIA’s GPUs and TensorRT power AI models for self-driving cars, enabling real-time object detection, path planning, and collision avoidance.

- Tesla’s Autopilot uses NVIDIA hardware to process live video feeds and make driving decisions.

Autonomous Driving Vehicles: Source

2. Healthcare

- AI-enhanced imaging tools, powered by NVIDIA GPUs, accelerate diagnostics and enable precision medicine.

3. Gaming and Graphics

- NVIDIA GPUs drive real-time ray tracing and AI-enhanced graphics for immersive gaming experiences.

- Video games use NVIDIA DLSS (Deep Learning Super Sampling) for smoother gameplay with higher resolutions.

4. Enterprise AI

- Enterprises deploy NVIDIA’s AI stack to enhance customer service, optimize logistics, and analyze market trends.

Challenges and Considerations

While NVIDIA’s AI stack is undeniably powerful, leveraging it effectively comes with its own set of challenges. Here are the key considerations to keep in mind:

1. High Hardware Costs

NVIDIA’s cutting-edge GPUs like the A100 and H100 are unmatched in performance, but they come with a high price tag.

- Impact: This makes them less accessible to startups, small teams, or individual developers with limited budgets.

- Workaround: Cloud-based solutions like NVIDIA’s GPU cloud or AWS instances with NVIDIA GPUs allow developers to access powerful hardware without upfront investment.

2. Complexity of CUDA Programming

CUDA provides immense flexibility and optimization potential, but it requires specialized knowledge in parallel programming.

- Impact: Beginners may face a steep learning curve, especially when integrating CUDA into deep learning frameworks.

- Workaround: NVIDIA offers extensive documentation, tutorials, and courses through the Deep Learning Institute (DLI) to help developers master CUDA programming.

3. Dependency on NVIDIA Ecosystem

By adopting NVIDIA’s AI stack, developers often become heavily reliant on its ecosystem of tools and hardware.

- Impact: Switching to non-NVIDIA platforms can be challenging due to compatibility issues.

- Workaround: Stay updated on industry trends and consider hybrid solutions that allow partial independence, such as using open-source frameworks alongside NVIDIA tools.

4. Energy Consumption

High-performance GPUs are energy-intensive, raising concerns about sustainability and operational costs.

- Impact: This is particularly challenging for organizations managing large data centers.

- Workaround: NVIDIA’s newer GPUs, like the H100, focus on energy efficiency, reducing power consumption while delivering exceptional performance.

Understanding these challenges upfront helps developers make informed decisions and plan their projects effectively, ensuring they maximize the benefits of NVIDIA’s stack while addressing potential roadblocks.

How to Get Started with NVIDIA’s AI Stack

To unlock the full potential of NVIDIA’s AI stack, follow this structured approach:

1. Setting Up Your Environment

- Download and Install CUDA Toolkit: This is the foundation for GPU-accelerated development.

- Ensure your system meets the minimum requirements, including compatible hardware and drivers.

- Install cuDNN: This library enhances the performance of neural network training and inference.

- Tip: Download the version that matches your CUDA installation for seamless compatibility.

- Choose Your Framework: Popular options like TensorFlow, PyTorch, and JAX are optimized for NVIDIA GPUs. Install the GPU-compatible versions of these frameworks.

2. Leverage Learning Resources

NVIDIA provides a wealth of learning materials to help developers master its tools:

- NVIDIA Deep Learning Institute (DLI): Offers hands-on free courses on topics like CUDA programming, TensorRT optimization, and RAPIDS for data science.

- Online Tutorials and Community Forums: Platforms like NVIDIA Developer Forums and Stack Overflow provide solutions to common challenges.

Pro Tip: Start with beginner-friendly projects and gradually move to more complex tasks as you gain confidence.

3. Choosing the Right Hardware

- For Individual Developers: GPUs like the RTX 40-series are affordable and ideal for small-scale projects.

- For Research Teams or Startups: The A100 GPU or DGX Station provides scalability and performance.

- For Enterprises: DGX Systems and cloud-based NVIDIA GPUs ensure maximum efficiency for large-scale AI deployments.

4. Build a Simple Project

- Start with small projects like training a CNN for image classification or running a pre-trained model from NVIDIA’s Model Zoo.

- Use tools like RAPIDS to prepare data and TensorRT for deployment to get a feel for the end-to-end workflow.

5. Explore Advanced Tools

Once comfortable with the basics, explore advanced tools like:

- Nsight for Profiling and Debugging: Optimize performance by identifying bottlenecks in your code.

- Triton Inference Server: Simplify deployment for production-ready AI applications.

- Omniverse: Experiment with real-time 3D simulation and synthetic data generation for cutting-edge projects.

Explore Nvidia’s Projects Here

Conclusion

NVIDIA’s AI stack is the ultimate toolkit for developers and researchers. From cutting-edge hardware to optimized software, it accelerates every step of the AI pipeline. By integrating NVIDIA’s ecosystem into your workflow, you can unlock the full potential of deep learning and drive impactful innovation.

Let 2025 be the year you harness the power of NVIDIA and take your projects to the next level.

The post A Comprehensive Guide to NVIDIA’s AI Stack for Deep Learning Projects appeared first on OpenCV.

Programming Skills: If you’re already familiar with languages like Python or C++, you’re on the right track. These languages are widely used in computer vision, particularly Python, due to libraries like OpenCV and TensorFlow. Even a basic understanding of coding can be a great starting point since many tutorials and projects will build on what you already know.

Programming Skills: If you’re already familiar with languages like Python or C++, you’re on the right track. These languages are widely used in computer vision, particularly Python, due to libraries like OpenCV and TensorFlow. Even a basic understanding of coding can be a great starting point since many tutorials and projects will build on what you already know.

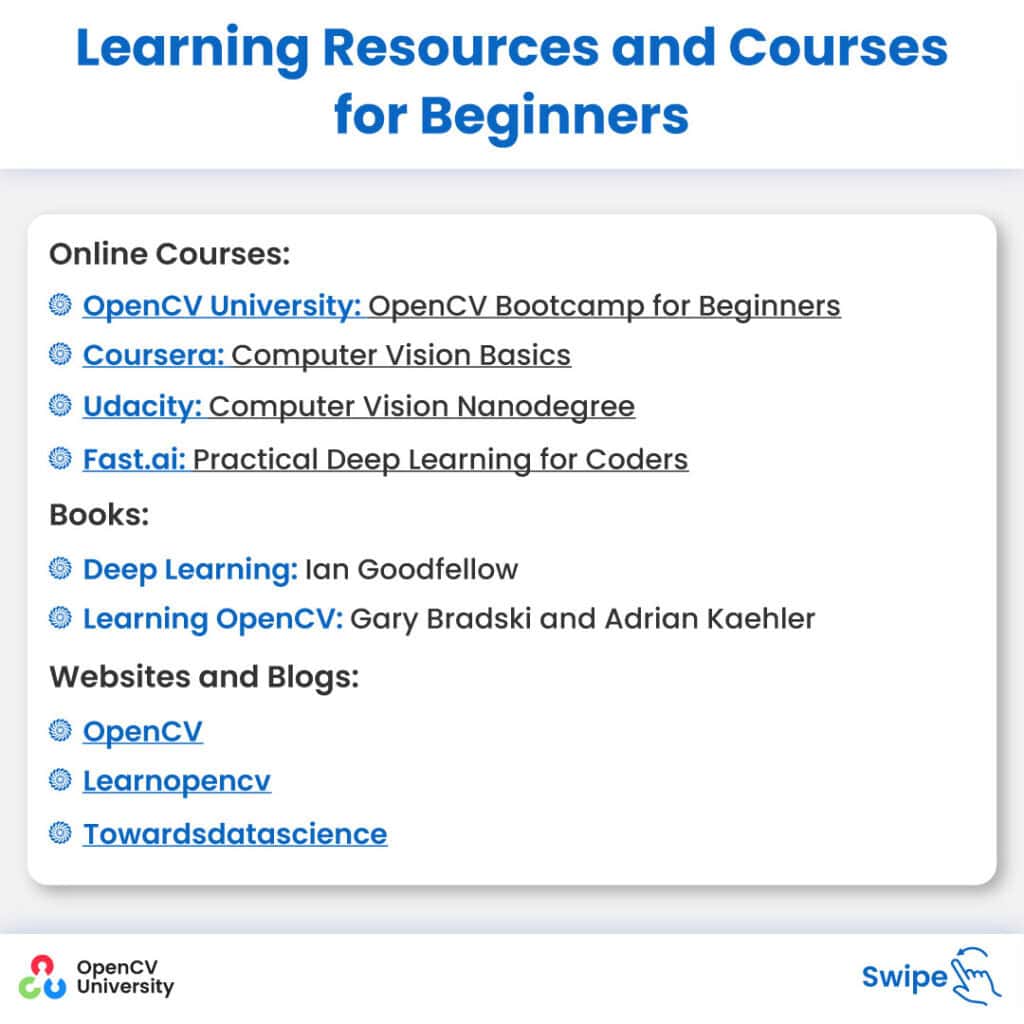

Online Courses:

Online Courses:

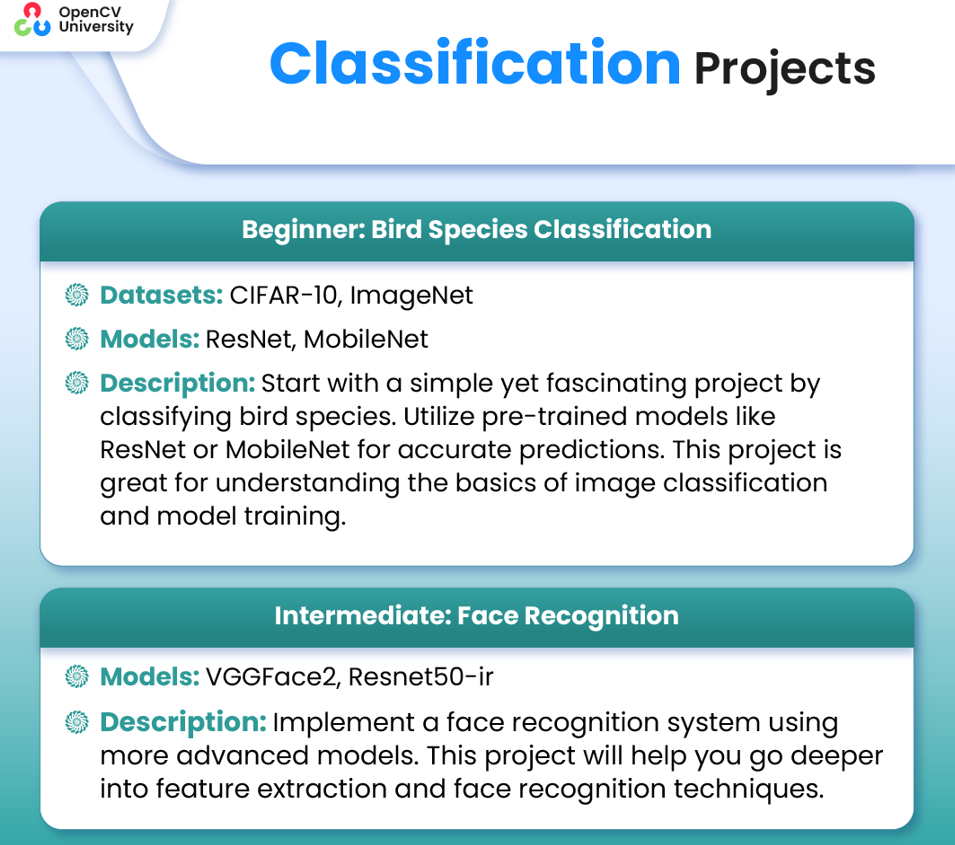

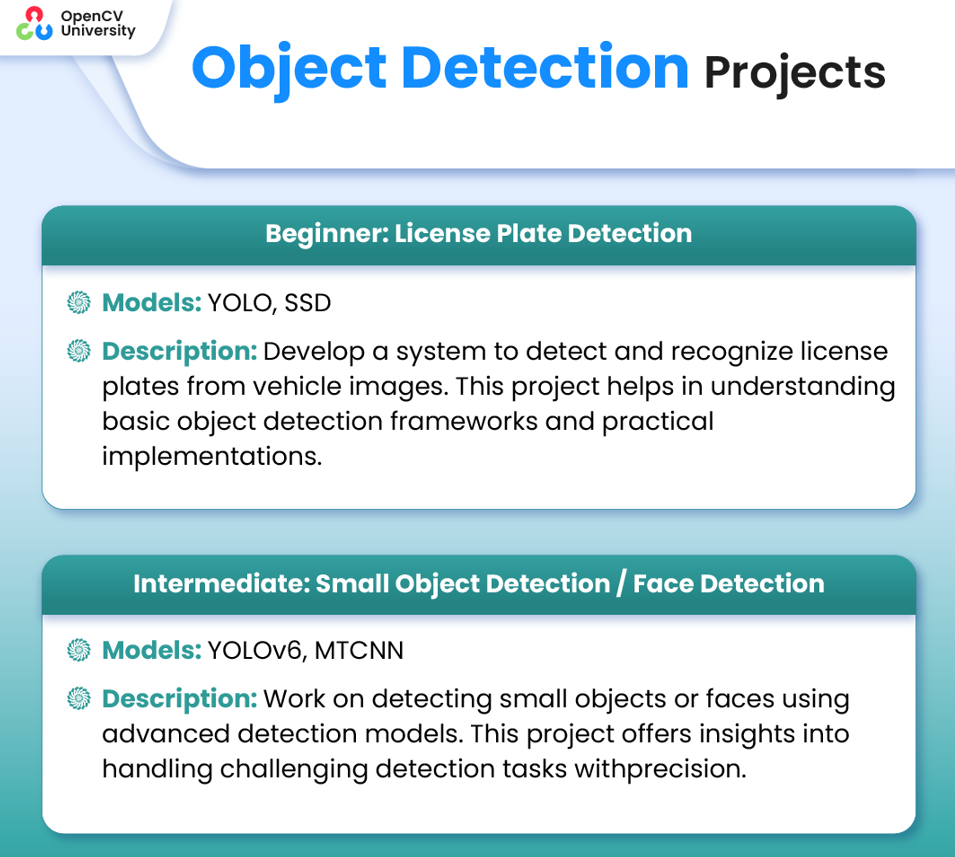

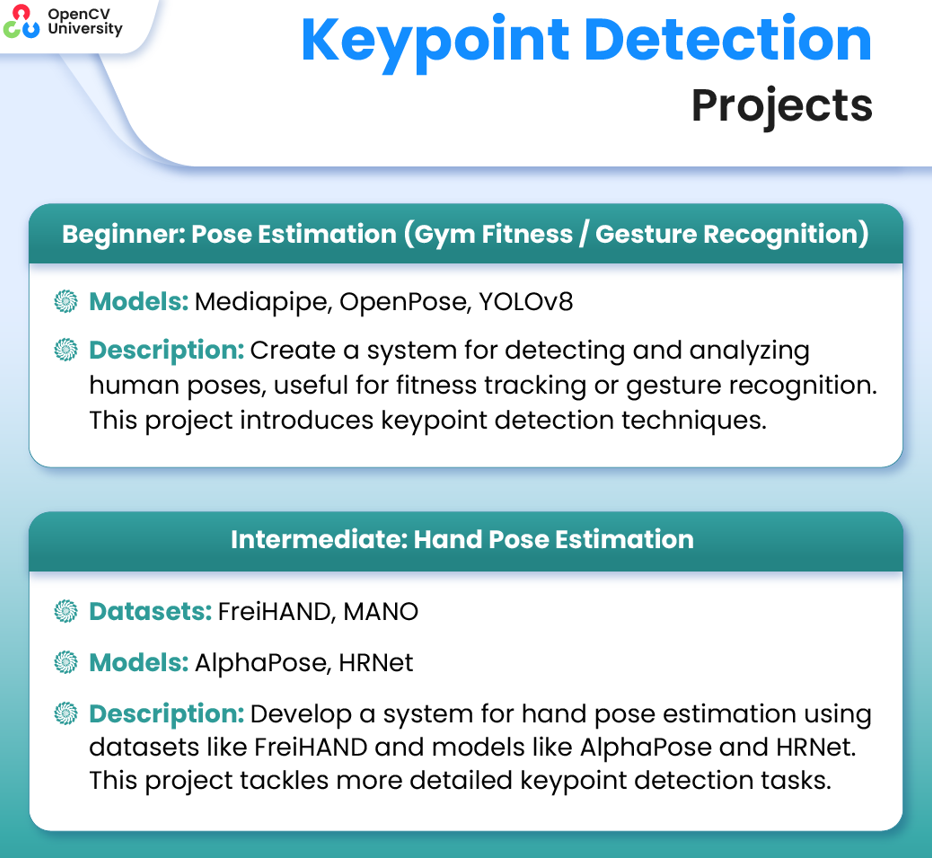

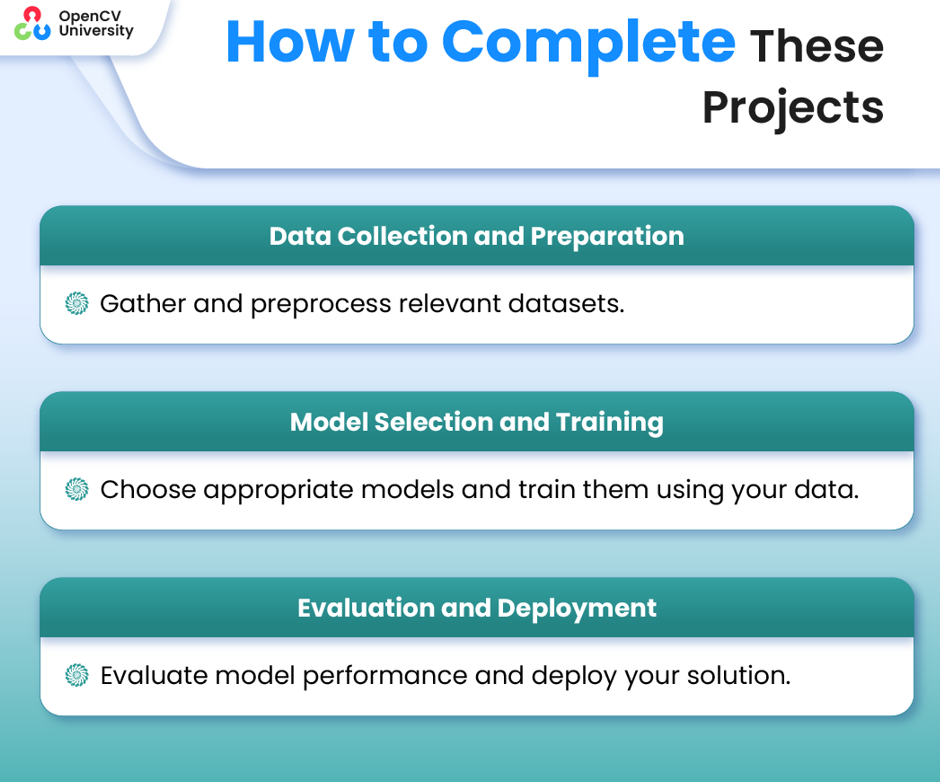

Start Small: Begin with basic projects, such as image classification or object detection. These foundational tasks are relatively simple but show your ability to work with computer vision tools and datasets. You can find plenty of tutorials and datasets online to guide you through your first projects.

Start Small: Begin with basic projects, such as image classification or object detection. These foundational tasks are relatively simple but show your ability to work with computer vision tools and datasets. You can find plenty of tutorials and datasets online to guide you through your first projects.

Join Professional Networks: IEEE, ACM, and local AI meetups.

Join Professional Networks: IEEE, ACM, and local AI meetups.

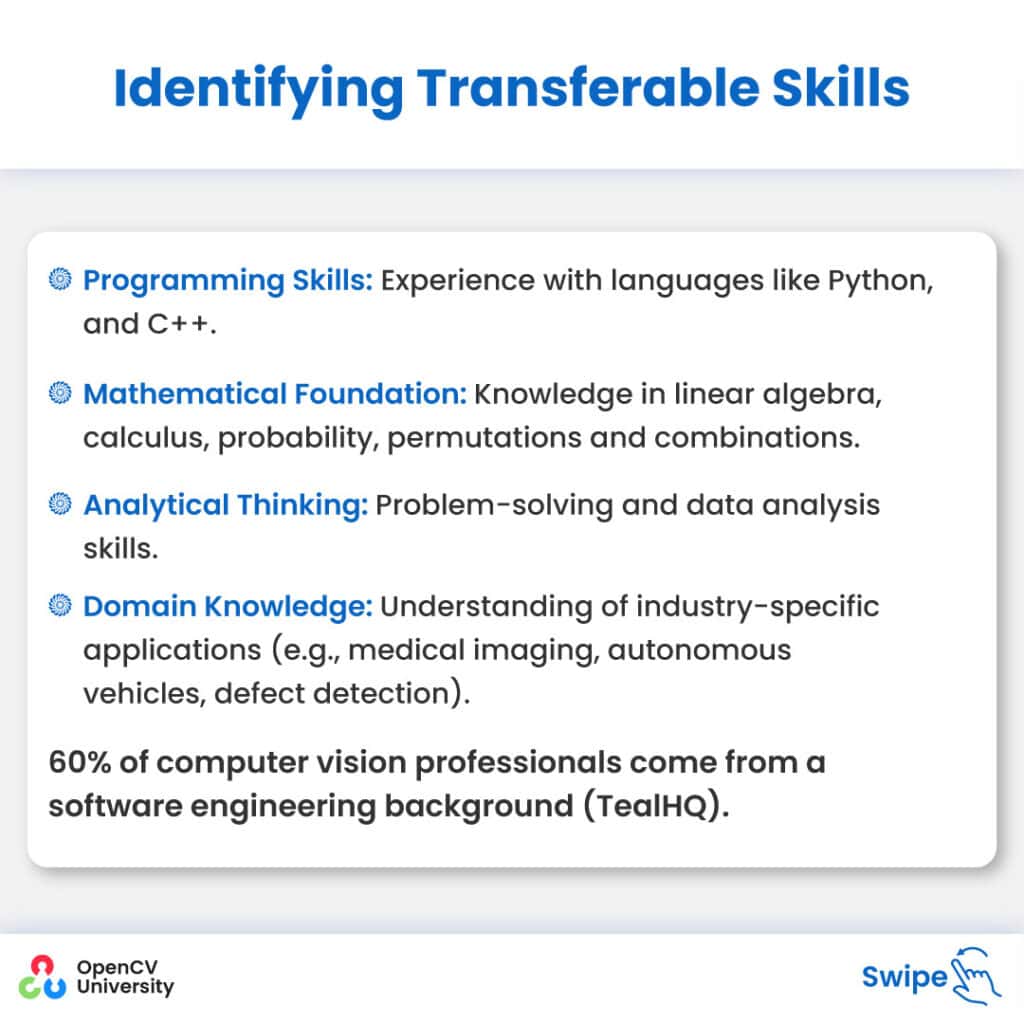

Review Your Transferable Skills: Reflect on the programming, analytical, mathematical, and domain-specific knowledge you already possess. These can form a solid foundation as you move into computer vision.

Review Your Transferable Skills: Reflect on the programming, analytical, mathematical, and domain-specific knowledge you already possess. These can form a solid foundation as you move into computer vision.