Reading view

Revolutionize Your Email Workflow with AI

We are happy to announce the release of Stalwart Mail Server v0.10.3, which introduces support for AI models —a powerful new feature now available to Enterprise Edition users as well as our GitHub and OpenCollective sponsors. With this feature, Stalwart Mail Server can be integrated with both self-hosted and cloud-based Large Language Models (LLMs), bringing advanced email processing capabilities like never before.

This integration allows you to use AI models for a variety of tasks, including enhanced spam filtering, threat detection, and intelligent email classification. Whether you choose to host your own models with LocalAI or leverage cloud-based services like OpenAI or Anthropic, this release provides the flexibility to incorporate cutting-edge AI into your email infrastructure.

Unlocking the Power of AI

With the introduction of AI model integration, Stalwart Mail Server can now analyze email content more deeply than traditional filters ever could. For instance, in the realm of spam filtering and threat detection, AI models are highly effective at identifying patterns and detecting malicious or unsolicited content. The system works by analyzing both the subject and body of incoming emails through the lens of an LLM, providing more accurate detection and filtering.

In addition to bolstering security, AI integration enhances email classification. By configuring customized prompts, administrators can instruct AI models to categorize emails based on their content, leading to more precise filtering and organization. This is particularly useful for enterprises managing a high volume of messages that span various topics and departments, as AI-driven filters can quickly and intelligently sort messages into categories like marketing, personal correspondence, or work-related discussions.

The flexibility of using either self-hosted or cloud-based AI models means that Stalwart can be tailored to your infrastructure and performance needs. Self-hosting AI models ensures full control over data and privacy, while cloud-based models offer ease of setup and access to highly optimized, continuously updated language models.

LLMs in Sieve Scripts

One of the most exciting features of this release is the ability for users and administrators to access AI models directly from Sieve scripts. Stalwart extends the Sieve scripting language by introducing the llm_prompt function, which allows users to send prompts and email content to the AI model for advanced processing.

For example, the following Sieve script demonstrates how an AI model can be used to classify emails into specific folders based on the content:

require ["fileinto", "vnd.stalwart.expressions"];

# Base prompt for email classification

let "prompt" '''You are an AI assistant tasked with classifying personal emails into specific folders.

Your job is to analyze the email's subject and body, then determine the most appropriate folder for filing.

Use only the folder names provided in your response.

If the category is not clear, respond with "Inbox".

Classification Rules:

- Family:

* File here if the message is signed by a Doe family member

* The recipient's name is John Doe

- Cycling:

* File here if the message is related to cycling

* File here if the message mentions the term "MAMIL"

- Work:

* File here if the message mentions "Dunder Mifflin Paper Company, Inc." or any part of this name

* File here if the message is related to paper supplies

* Only classify as Work if it seems to be part of an existing sales thread or directly related to the company's operations

- Junk Mail:

* File here if the message is trying to sell something and is not work-related

* Remember that John lives a minimalistic lifestyle and is not interested in purchasing items

- Inbox:

* Use this if the message doesn't clearly fit into any of the above categories

Analyze the following email and respond with only one of these folder names: Family, Cycling, Work, Junk Mail, or Inbox.

''';

# Prepare the base Subject and Body

let "subject" "thread_name(header.subject)";

let "body" "body.to_text";

# Send the prompt, subject, and body to the AI model

let "llm_response" "llm_prompt('gpt-4', prompt + '\n\nSubject: ' + subject + '\n\n' + body, 0.6)";

# Set the folder name

if eval "contains(['Family', 'Cycling', 'Work', 'Junk Mail'], llm_response)" {

fileinto "llm_response";

}

This example demonstrates how the llm_prompt function can be used to classify emails into different categories such as Family, Cycling, Work, or Junk Mail based on the content. The AI model analyzes the message’s subject and body according to the classification rules defined in the prompt and returns the most appropriate folder name. The email is then automatically filed into the correct folder, making it easier to organize incoming messages based on their content.

Self-Hosted or Cloud-Based

With this new feature, Stalwart Mail Server allows for seamless integration with both self-hosted and cloud-based AI models. If you prefer full control over your infrastructure, you can opt to deploy models on your own hardware using solutions like LocalAI. Self-hosting gives you complete ownership over your data and ensures compliance with privacy policies, but it may require significant computational resources, such as GPU acceleration, to maintain high performance.

Alternatively, you can integrate with cloud-based AI providers like OpenAI or Anthropic, which offer access to powerful, pretrained models with minimal setup. Cloud-based models provide cutting-edge language processing capabilities, but you should be aware of potential costs, as these providers typically charge based on the number of tokens processed. Whether you choose self-hosted or cloud-based models, Stalwart gives you the flexibility to tailor the AI integration to your specific needs.

Available for Enterprise Users and Sponsors

This exciting AI integration feature is exclusively available for Enterprise Edition users as well as GitHub and OpenCollective monthly sponsors. If you want to harness the full potential of AI-powered email processing in Stalwart Mail Server, upgrading to the Enterprise Edition or becoming a sponsor is a great way to access this feature and other advanced capabilities.

Try It Out Today!

The release of Stalwart Mail Server v0.10.3 marks a major milestone in our journey toward building intelligent, highly customizable email management solutions. By combining traditional email filtering with the power of LLMs, Stalwart gives you the tools to take your email infrastructure to the next level, enhancing security, organization, and automation in ways that were previously impossible. We’re excited to see how you’ll use this new feature to optimize your email workflows!

Next-Gen AI Gadgets: Rabbit R1 vs SenseCAP Watcher

Authored by Mengdu and published on Hackster, for sharing purposes only.

AI gadgets Rabbit R1 & SenseCAP Watcher design, UI, user experience compared – hardware/interaction highlights, no application details.

Story

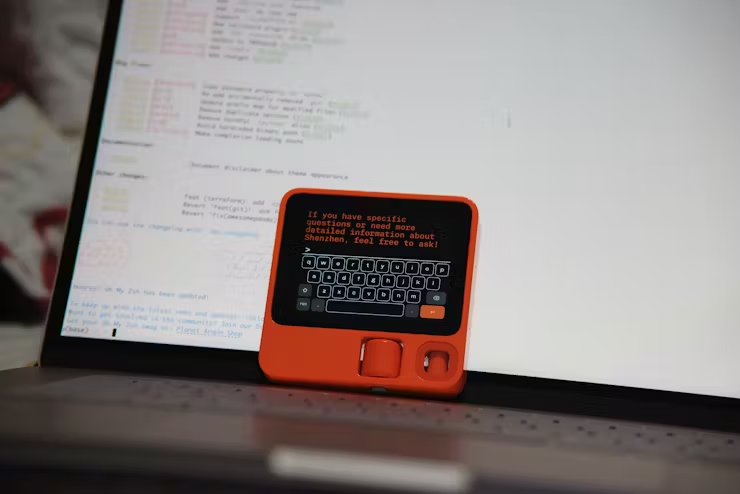

The world of AI gadgets is rapidly evolving, with companies racing to deliver intelligent home companions. Two such devices, the Rabbit R1, and SenseCAP Watcher, recently caught my attention through very different means – brilliant marketing drew me to purchase the former, while the latter was a review unit touted as a “Physical AI Agent” by Seeed Studio.

Intrigued by the potential convergence between these products, I embarked on an immersive user experience testing them side-by-side. This review offers a candid assessment of their design, user interfaces, and core interactions. However, I’ll steer clear of Rabbit’s app ecosystem and third-party software integration capabilities, as Watcher lacks such functionality by design.

My goal is to unravel the unique propositions each gadget brings to the AI gadgets market and uncover any surprising distinctions or similarities. Join me as I separate gimmick from innovation in this emerging product category.

Packaging

Rabbit really went all out with the packaging for the R1. As soon as I got the box, I could tell this wasn’t your average gadget. Instead of cheap plastic, the R1 comes cocooned in a crystal-clear acrylic case. It looks and feels incredibly premium.

It allows you to fully admire the R1’s design and interactive components like the scroll wheel and speakers before even taking it out. Little etched icons map out exactly what each part does.

The acrylic case doesn’t just protect – it also doubles as a display stand for the R1. There’s a molded pedestal that cradles the plastic body, letting you showcase the device like a museum piece.

By the time I finally got the R1 out of its clear jewel case, I was already grinning like a kid on Christmas day. The whole unboxing makes you feel like you’re uncovering a precious gadget treasure.

While the Watcher is priced nearly half that of the Rabbit R1, its eco-friendly cardboard packaging is anything but cheap. Extracting the Watcher unit itself is a simple matter of gently lifting it from the integrated enclosure.

At first glance, like me, you may puzzle over the purpose of the various cutouts, folds, and perforations. But a quick peek at their wiki reveals this unassuming exterior actually transforms into a multi-functional stand!

Echoing the form of a desktop calendar, a central cutout cradles the Watcher body, allowing it to be displayed front-and-center on your desk like a compact objet d’art. A clever and well-considered bit of innovation that deserves kudos for the design team!

Interaction Logic

Despite being equipped with speakers, microphone, camera, scroll wheel, and a touchscreen display – the R1 restricts touch input functionality. The touchscreen remains unresponsive to touch for general commands and controls, only allowing input through an on-screen virtual keyboard in specific scenarios like entering a WiFi password or using the terminal interface.

The primary interaction method is strictly voice-driven, which feels counterintuitive given the prominent touchscreen hardware. It’s puzzling why Rabbit’s design team limited core touch functionality on the included touchscreen display.

The overall operation logic also takes some getting used to. Take the side button dubbed the “PTT” – its function varies situationally.

This unintuitive behavior tripped me up when configuring WiFi. After tapping “connect”, I instinctively tried hitting PTT again to go back, only to accidentally cancel the connection instead. It wasn’t until later that I realized using the scroll wheel to navigate to the very top option, then pressing PTT is the correct “back” gesture.

While not necessarily a flaw, this interaction model defies typical user expectations. Most would assume a core navigation function like “back” to be clearly visible and accessible without obscure gestures. Having to precisely scroll to the top option every single time just to return to the previous menu is quite cumbersome, especially for nested settings trees.

This jarring lack of consistency in the control scheme is truly baffling. The operation logic appears haphazardly scattered across different button combinations and gestures depending on the context. Mastering the R1’s controls feels like an exercise in memorizing arbitrary rules rather than intuitive design principles.

In contrast to the Rabbit R1, the Watcher device seems to have a much simpler and more consistent interaction model. This could be attributed to the fact that the Watcher’s operations are inherently not overly complex, and it relies on a companion smartphone app for assistance in many scenarios.

Like the R1, the Watcher is equipped with a scroll wheel, camera, touchscreen, microphone, and speakers. Additionally, it has various pin interfaces for connecting external sensors, which may appeal to developers looking to tinker.

Commendably, the current version of the Watcher maintains a high degree of unity in its operational logic. Pressing the scroll wheel confirms a selection, scrolling up or down moves the cursor accordingly, and a long press initiates voice interaction with the device. This level of consistency is praiseworthy.

Moreover, the touchscreen is fully functional, allowing for a seamless experience where users can choose to navigate via either the scroll wheel or touch input, maintaining interactivity consistency while providing independent input methods. This versatility is a welcome design choice.

However, one minor drawback is that the interactions lack the “stickiness” found in smartphone interfaces. Both the scroll wheel and touch inputs exhibit a degree of frame drops and latency, which may be a common limitation of microcontroller-based device interactions.

When I mentioned that “it relies on a companion smartphone app for assistance in many scenarios, ” I was referring to the inability to perform tasks like entering long texts, such as WiFi passwords, directly on the Watcher‘s small screen. This reliance is somewhat unfortunate.

However, given the Watcher’s intended positioning as a device meant to be installed in a fixed location, perhaps mounted on a wall, it is understandable that users may not always need to operate it directly. The design team likely factored in the convenience of using a smartphone app for certain operations, as you wouldn’t necessarily be handling the Watcher itself at all times.

What can they do?

At its core, the Rabbit R1 leverages cloud-based large language models and computer vision AI to provide natural language processing, speech recognition, image identification and generation, and more. It has an array of sensors including cameras, microphones and environmental detection to take in multimodal inputs.

One of the Rabbit R1’s marquee features is voice search and question answering. Simply press the push-to-talk button and ask it anything, like “What were last night’s NBA scores?” or “What’s the latest on the TikTok ban?”. The AI will quickly find and recite relevant, up-to-date information drawn from the internet.

The SenseCAP Watcher, while also employing voice interaction and large language models, takes a slightly different approach. By long-pressing the scroll wheel on the top right of the Watcher, you can ask it profound existential questions like “Can you tell me why I was born into this world? What is my value to the universe?” It will patiently provide some insightful, if ambiguous, answers.

However, the key difference lies in contextual awareness: unlike the Rabbit R1, the Watcher can’t incorporate your current time and location into its responses. So while both devices might ponder the meaning of life with you, only the Rabbit R1 could tell you where to find the nearest open café to continue your existential crisis over a cup of coffee.

While both devices offer voice interaction capabilities, their approaches to visual processing showcase even more distinct differences.

Vision mode allows the Rabbit R1’s built-in camera to identify objects you point it towards. I found it was generally accurate at recognizing things like office supplies, food, and electronics – though it did mistake my iPhone 16 Pro Max for older models a couple times. This feature essentially turns the Rabbit R1 into a pocket-sized seeing-eye dog, ready to describe the world around you at a moment’s notice.

Unlike the Rabbit R1’s general-purpose object recognition, the Watcher’s visual capabilities appear to be tailored for a specific task. It’s not designed to be your all-seeing companion, identifying everything from your morning bagel to your office stapler.

Things are starting to get interesting. Seeed Studio calls the SenseCAP Watcher a “Physical AI Agent” – a term that initially puzzled me.

The term “Physical” refers to its tangible presence in the real world, acting as a bridge between our physical environment and Large Language Model.

As a parent of a mischievous toddler, my little one has a habit of running off naked while I’m tidying up the bathroom, often resulting in them catching a chill. I set up a simple task for the Watcher: “Alert me if my child leaves the bathroom without clothes on.” Now, the device uses its AI to recognize my child, determine if they’re dressed, and notify me immediately if they attempt to make a nude escape.

Unlike traditional cameras or smart devices, the Watcher doesn’t merely capture images or respond to voice commands. Its sophisticated AI allows it to analyze and interpret its surroundings, understanding not just what objects are present, but also the context and activities taking place.

I’ve experienced its autonomous capabilities firsthand as a working parent with a hectic schedule. After a long day at the office and tending to my kids, I usually collapse on the couch late at night for some much-needed TV time. However, I often doze off, leaving the TV and lights on all night, much to my wife’s annoyance the next morning.

Enter the Watcher. I’ve set it up to monitor my situation during late-night TV watching. Using its advanced AI, the Watcher can detect when I’ve fallen asleep on the couch. Once it recognizes that I’m no longer awake, it springs into action. Through its integration with my Home Assistant system, the Watcher triggers a series of automated actions: the TV switches off, the living room lights dim and then turn off, and the air conditioning adjusts to a comfortable sleeping temperature.

The “Agent” aspect of the Watcher emphasizes its role as an autonomous assistant. Users can assign tasks to the device, which then operates independently to achieve those goals. This might involve interacting with other smart devices, making decisions based on observed conditions, or providing insights without constant human input. It offers a new level of environmental awareness and task execution, potentially changing how we interact with AI in our daily lives.

You might think that devices like the Rabbit R1 could perform similar tasks. However, you’ll quickly realize that the Watcher’s capabilities are the result of Seeed Studio’s dedicated efforts to optimize large language models specifically for this purpose.

When it comes to analyzing object behaviors, the Rabbit R1 often provides ambiguous answers. For instance, it might suggest that a person “could be smoking” or “might be sleeping.” This ambiguity directly affects their ability to make decisive actions. This is probably a common problem with all devices using AI at the moment, too much nonsense and indecision. We sometimes find them cumbersome, often because they can’t be as decisive as humans.

I think I can now understand all the reasons why Seeed Studio calls it Physical AI Agent. I can use it in many of my scenarios. It could detect if your kid has an accident and wets the bed, then alert you. If it sees your pet causing mischief, it can recognize the bad behavior and give you a heads up.

If a package arrives at your door, the Watcher can identify it’s a delivery and let you know, rather than just sitting there unknowingly. It’s an always-vigilant smart camera that processes what it sees almost like having another set of eyes monitoring your home or office.

As for their distinct focus areas, the ambition on the Rabbit R1 side is to completely replace traditional smartphones by doing everything via voice control. Their wildest dream is that even if you metaphorically chopped off both your hands, you could just tell the R1 “I want to order food delivery” and it would magically handle the entire process from ordering to payment to confirming arrival – all without you having to lift a finger.

Instead of overcomplicating it with technical jargon about sensors and AI models, the key is that the Watcher has enough awareness to comprehend events unfolding in the physical world around it and keep you informed, no fiddling required on your end.

Perhaps this duality of being an intelligent aide with a tangible physical embodiment is the core reason why Seeed Studio dubs the Watcher a “Physical AI Agent.” Unlike disembodied virtual assistants residing in the cloud, the Watcher has a real-world presence – acting as an ever-present bridge that allows advanced AI language models to directly interface with and augment our lived physical experiences. It’s an attentive, thoughtful companion truly grounded in our reality.

Concluding

The Rabbit R1 and SenseCAP Watcher both utilize large language models combined with image analysis, representing innovative ways to bring advanced AI into physical devices. However, their application goals differ significantly.

The Watcher, as a Physical AI Agent, focuses on specific scenarios within our living spaces. It continuously observes and interprets its environment, making decisions and taking actions to assist users in their daily lives. By integrating with smart home systems, it can perform tasks autonomously, effectively replacing repetitive human labor in defined contexts.

Rabbit R1, on the other hand, aims to revolutionize mobile computing. Its goal is to replace traditional smartphones by offering a voice-driven interface that can interact with various digital services and apps. It seeks to simplify and streamline how we engage with technology on the go.

Both devices represent early steps towards a future where AI is more deeply integrated into our daily lives. The Watcher showcases how AI can actively participate in our physical spaces, while the R1 demonstrates AI’s potential to transform our digital interactions. As pioneering products, they offer glimpses into different facets of our AI-enhanced future, inviting us to imagine a world where artificial intelligence seamlessly blends with both our physical and digital realities.

There is no clear “winner” here.

Regardless of how successful these first iterations prove to be, Rabbit and Seeed Studio have staked unique perspectives on unleashing productivity gains from large language AI. Their distinct offerings are pioneering explorations that will undoubtedly hold a place in the historical arc of ambient AI development.

If given the opportunity to experience them first-hand, I wholeheartedly recommend picking up both devices. While imperfect, they provide an enthralling glimpse into the future – where artificial intelligence transcends virtual assistants confined to the cloud, and starts manifesting true cognition of our physical spaces and daily lives through thoughtful hardware/software synergies.

The post Next-Gen AI Gadgets: Rabbit R1 vs SenseCAP Watcher appeared first on Latest Open Tech From Seeed.