Exploring fungal intelligence with biohybrid robots powered by Arduino

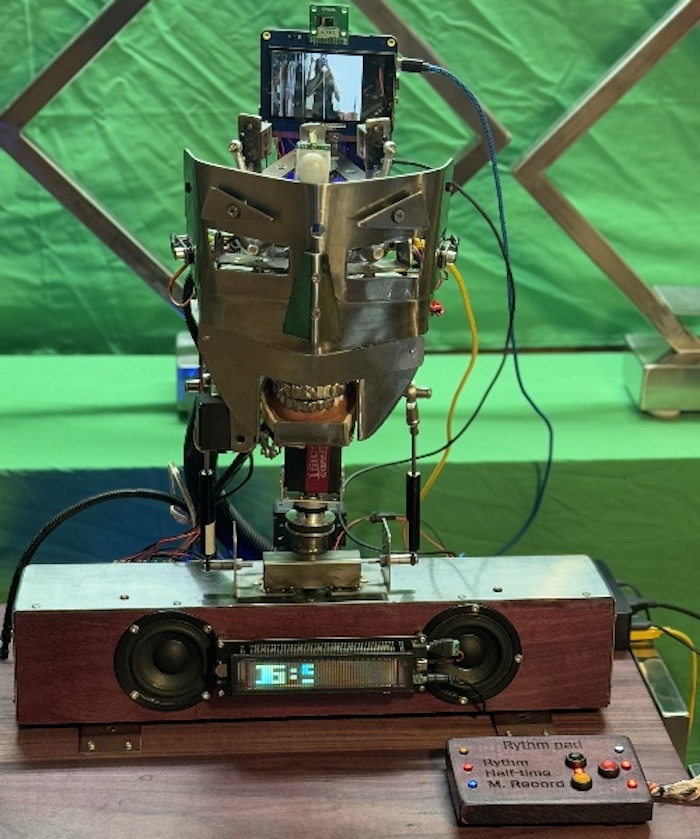

At Cornell University, Dr. Anand Kumar Mishra and his team have been conducting groundbreaking research that brings together the fields of robotics, biology, and engineering. Their recent experiments, published in Science, explore how fungal mycelia can be used to control robots. The team has successfully created biohybrid robots that move based on electrical signals generated by fungi – a fascinating development in the world of robotics and biology.

A surprising solution for robotics: fungi

Biohybrid robots have traditionally relied on animal or plant cells to control movements. However, Dr. Mishra’s team is introducing an exciting new component into this field: fungi – which are resilient, easy to culture, and can thrive in a wide range of environmental conditions. This makes them ideal candidates for long-term applications in biohybrid robotics.

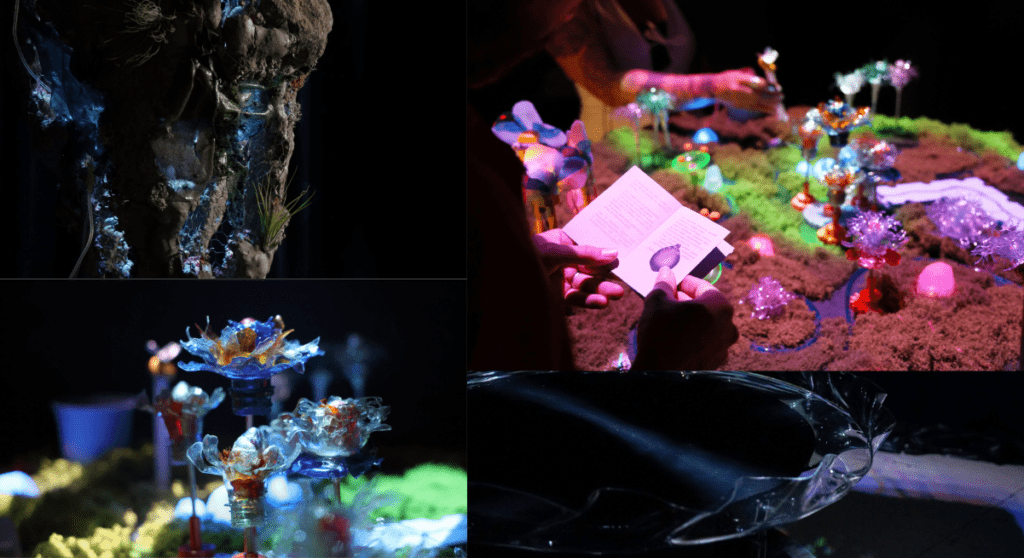

Dr. Mishra and his colleagues designed two robots: a soft, starfish-inspired walking one, and a wheeled one. Both can be controlled using the natural electrophysiological signals produced by fungal mycelia. These signals are harnessed using a specially designed electrical interface that allows the fungi to control the robot’s movement.

The implications of this research extend far beyond robotics. The integration of living systems with artificial actuators presents an exciting new frontier in technology, and the potential applications are vast – from environmental sensing to pollution monitoring.

How it works with Arduino

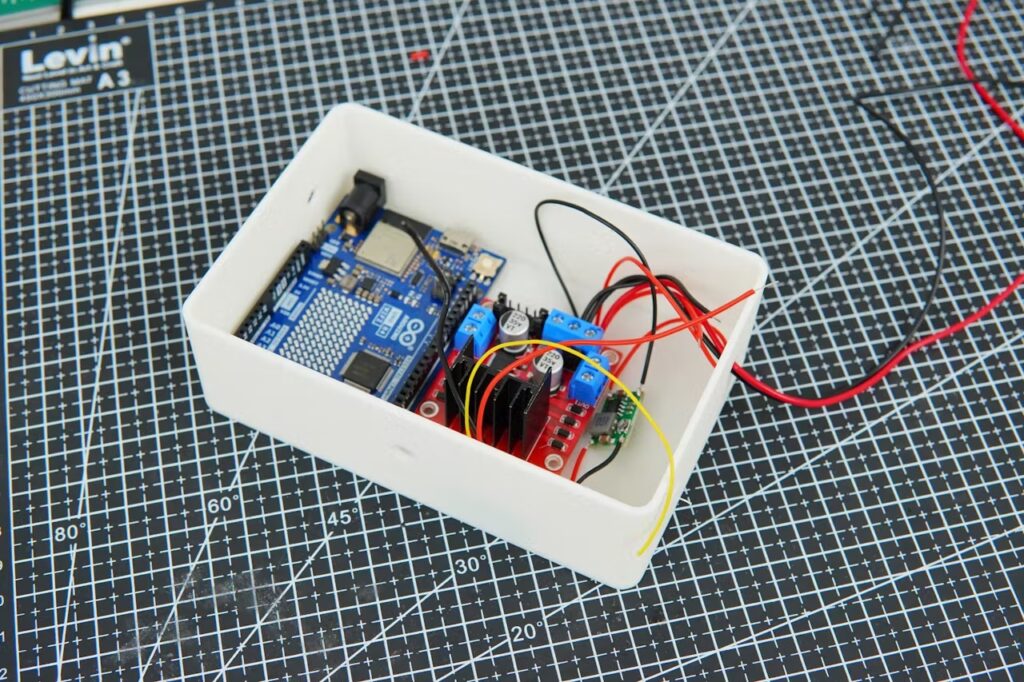

At the heart of this innovative project is the Arduino platform, which served as the main interface to control the robots. As Dr. Mishra explains, he has been using Arduino for over 10 years and naturally turned to it for this experiment: “My first thought was to control the robot using Arduino.” The choice was ideal in terms of accessibility, reliability, and ease of use – and allowed for seamless transition from prototyping with UNO R4 WiFi to final solution with Arduino Mega.

To capture and process the tiny electrical signals from the fungi, the team used a high-resolution 32-bit ADC (analog-to-digital converter) to achieve the necessary precision. “We processed each spike from the fungi and used the delay between spikes to control the robot’s movement. For example, the width of the spike determined the delay in the robot’s action, while the height was used to adjust the motor speed,” Dr. Mishra shares.

The team also experimented with pulse width modulation (PWM) to control the motor speed more precisely, and managed to create a system where the fungi’s spikes could increase or decrease the robot’s speed in real-time. “This wasn’t easy, but it was incredibly rewarding,” says Dr. Mishra.

And it’s only the beginning. Now the researchers are exploring ways to refine the signal processing and enhance accuracy – again relying on Arduino’s expanding ecosystem, making the system even more accessible for future scientific experiments.

All in all, this project is an exciting example of how easy-to-use, open-source, accessible technologies can enable cutting-edge research and experimentation to push the boundaries of what’s possible in the most unexpected fields – even complex biohybrid experiments! As Dr. Mishra says, “I’ve been a huge fan of Arduino for years, and it’s amazing to see how it can be used to drive advancements in scientific research.”

The post Exploring fungal intelligence with biohybrid robots powered by Arduino appeared first on Arduino Blog.