Enhancing Container Security with Docker Scout and Secure Repositories

Docker Scout simplifies the integration with container image repositories, improving the efficiency of container image approval workflows without disrupting or replacing current processes. Positioned outside the repository’s stringent validation framework, Docker Scout serves as a proactive measure to significantly reduce the time needed for an image to gain approval.

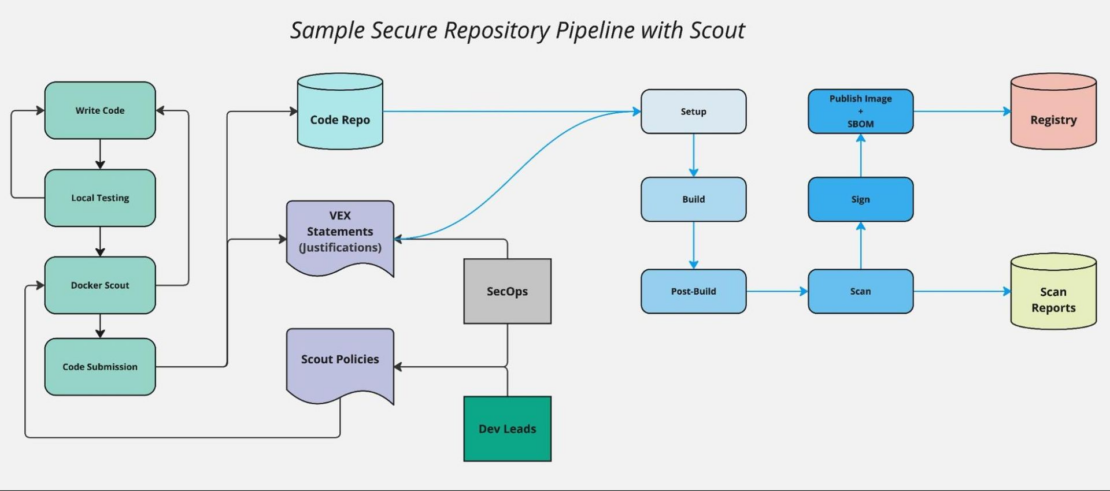

By shifting security checks left and integrating Docker Scout into the early stages of the development cycle, issues are identified and addressed directly on the developer’s machine.

Minimizing vulnerabilities

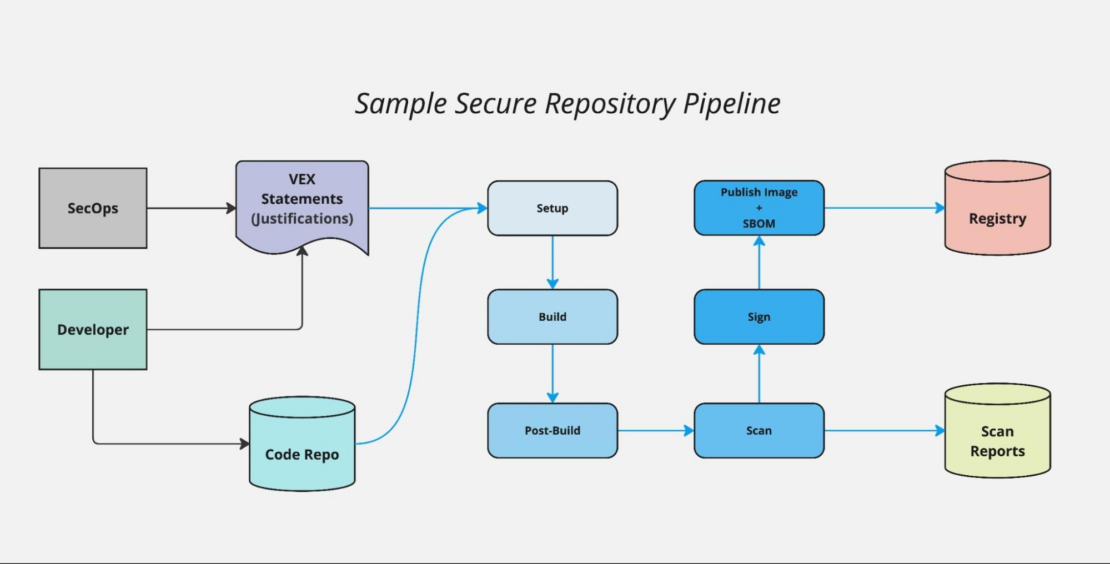

This leftward shift in security accelerates the development process by keeping developers in flow, providing immediate feedback on policy violations at the point of development. As a result, images are secured and reviewed for compliance before being pushed into the continuous integration/continuous deployment (CI/CD) pipeline, reducing reliance on resource-heavy, consumption-based scans (Figure 1). By resolving issues earlier, Docker Scout minimizes the number of vulnerabilities detected during the CI/CD process, freeing up the security team to focus on higher-priority tasks.

Additionally, the Docker Scout console allows the security team to define custom security policies and manage VEX (Vulnerability Exploitability eXchange) statements. VEX is a standard that allows vendors and other parties to communicate the exploitability status of vulnerabilities, allowing for the creation of justifications for including software that has been tied to Common Vulnerabilities and Exposures (CVE).

This feature enables seamless collaboration between development and security teams, ensuring that developers are working with up-to-date compliance guidelines. The Docker Scout console can also feed critical data into existing security tooling, enriching the organization’s security posture with more comprehensive insights and enhancing overall protection (Figure 2).

How to secure image repositories

A secure container image repository provides digitally signed, OCI-compliant images that are rebuilt and rescanned nightly. These repositories are typically used in highly regulated or security-conscious environments, offering a wide range of container images, from open source software to commercial off-the-shelf (COTS) products. Each image in the repository undergoes rigorous security assessments to ensure compliance with strict security standards before being deployed in restricted or sensitive environments.

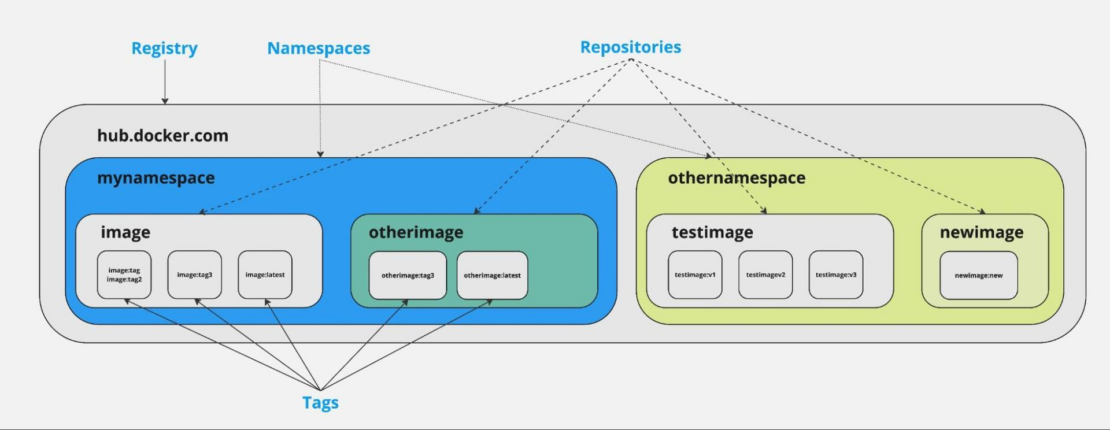

Key components of the repository include a hardened source code repository and an OCI-compliant registry (Figure 3). All images are continuously scanned for vulnerabilities, stored secrets, problematic code, and compliance with various standards. Each image is assigned a score upon rebuild, determining its compliance and suitability for use. Scanning reports and justifications for any potential issues are typically handled using the VEX format.

Why use a hardened image repository?

A hardened image repository mitigates the security risks associated with deploying containers in sensitive or mission-critical environments. Traditional software deployment can expose organizations to vulnerabilities and misconfigurations that attackers can exploit. By enforcing a strict set of requirements for container images, the hardened image repository ensures that images meet the necessary security standards before deployment. Rebuilding and rescanning each image daily allows for continuous monitoring of new vulnerabilities and emerging attack vectors.

Using pre-vetted images from a hardened repository also streamlines the development process, reducing the load on development teams and enabling faster, safer deployment.

In addition to addressing security risks, the repository also ensures software supply chain security by incorporating software bills of materials (SBOMs) with each image. The SBOM of a container image can provide an inventory of all the components that were used to build the image, including operating system packages, application specific dependencies with its versions, and license information. By maintaining a robust vetting process, the repository guarantees that all software components are traceable, verifiable, and tamper-free — essential for ensuring the integrity and reliability of deployed software.

Who uses a hardened image repository?

The main users of a hardened container image repository include internal developers responsible for creating applications, developers working on utility images, and those responsible for building base images for other containerized applications. Note that the titles for these roles can vary by organization.

- Application developers use the repository to ensure that the images their applications are built upon meet the required security and compliance standards.

- DevOps engineers are responsible for building and maintaining the utility images that support various internal operations within the organization.

- Platform developers create and maintain secure base images that other teams can use as a foundation for their containerized applications.

Daily builds

One challenge with using a hardened image repository is the time needed to approve images. Daily rebuilds are conducted to assess each image for vulnerabilities and policy violations, but issues can emerge, requiring developers to make repeated passes through the pipeline. Because rebuilds are typically done at night, this process can result in delays for development teams, as they must wait for the next rebuild cycle to resolve issues.

Enter Docker Scout

Integrating Docker Scout into the pre-submission phase can reduce the number of issues that enter the pipeline. This proactive approach helps speed up the submission and acceptance process, allowing development teams to catch issues before the nightly scans.

Vulnerability detection and management

- Requirement: Images must be free of known vulnerabilities at the time of submission to avoid delays in acceptance.

- Docker Scout contribution:

- Early detection: Docker Scout can scan Docker images during development to detect vulnerabilities early, allowing developers to resolve issues before submission.

- Continuous analysis: Docker Scout continually reviews uploaded SBOMs, providing early warnings for new critical CVEs and ensuring issues are addressed outside of the nightly rebuild process.

- Justification handling: Docker Scout supports VEX for handling exceptions. This can streamline the justification process, enabling developers to submit justifications for potential vulnerabilities more easily.

Security best practices and configuration management

- Requirement: Images must follow security best practices and configuration guidelines, such as using secure base images and minimizing the attack surface.

- Docker Scout contribution:

- Security posture enhancement: Docker Scout allows teams to set policies that align with repository guidelines, checking for policy violations such as disallowed software or unapproved base images.

Compliance with dependency management

- Requirement: All dependencies must be declared, and internet access during the build process is usually prohibited.

- Docker Scout contribution:

- Dependency scanning: Docker Scout identifies outdated or vulnerable libraries included in the image.

- Automated reports: Docker Scout generates security reports for each dependency, which can be used to cross-check the repository’s own scanning results.

Documentation and provenance

- Requirement: Images must include detailed documentation on their build process, dependencies, and configurations for auditing purposes.

- Docker Scout contribution:

- Documentation support: Docker Scout contributes to security documentation by providing data on the scanned image, which can be used as part of the official documentation submitted with the image.

Continuous compliance

- Requirement: Even after an image is accepted into the repository, it must remain compliant with new security standards and vulnerability disclosures.

- Docker Scout contribution:

- Ongoing monitoring: Docker Scout continuously monitors images, identifying new vulnerabilities as they emerge, ensuring that images in the repository remain compliant with security policies.

By utilizing Docker Scout in these areas, developers can ensure their images meet the repository’s rigorous standards, thereby reducing the time and effort required for submission and review. This approach helps align development practices with organizational security objectives, enabling faster deployment of secure, compliant containers.

Integrating Docker Scout into the CI/CD pipeline

Integrating Docker Scout into an organization’s CI/CD pipeline can enhance image security from the development phase through to deployment. By incorporating Docker Scout into the CI/CD process, the organization can automate vulnerability scanning and policy checks before images are pushed into production, significantly reducing the risk of deploying insecure or non-compliant images.

- Integration with build pipelines: During the build stage of the CI/CD pipeline, Docker Scout can be configured to automatically scan Docker images for vulnerabilities and adherence to security policies. The integration would typically involve adding a Docker Scout scan as a step in the build job, for example through a GitHub action. If Docker Scout detects any issues such as outdated dependencies, vulnerabilities, or policy violations, the build can be halted, and feedback is provided to developers immediately. This early detection helps resolve issues long before images are pushed to the hardened image repository.

- Validation in the deployment pipeline: As images move from development to production, Docker Scout can be used to perform final validation checks. This step ensures that any security issues that might have arisen since the initial build have been addressed and that the image is compliant with the latest security policies. The deployment process can be gated based on Docker Scout’s reports, preventing insecure images from being deployed. Additionally, Docker Scout’s continuous analysis of SBOMs means that even after deployment, images can be monitored for new vulnerabilities or compliance issues, providing ongoing protection throughout the image lifecycle.

By embedding Docker Scout directly into the CI/CD pipeline (Figure 1), the organization can maintain a proactive approach to security, shifting left in the development process while ensuring that each image deployed is safe, compliant, and up-to-date.

Defense in depth and Docker Scout’s role

In any organization that values security, adopting a defense-in-depth strategy is essential. Defense in depth is a multi-layered approach to security, ensuring that if one layer of defense is compromised, additional safeguards are in place to prevent or mitigate the impact. This strategy is especially important in environments that handle sensitive data or mission-critical operations, where even a single vulnerability can have significant consequences.

Docker Scout plays a vital role in this defense-in-depth strategy by providing a proactive layer of security during the development process. Rather than relying solely on post-submission scans or production monitoring, Docker Scout integrates directly into the development and CI/CD workflows (Figure 2), allowing teams to catch and resolve security issues early. This early detection prevents issues from escalating into more significant risks later in the pipeline, reducing the burden on the SecOps team and speeding up the deployment process.

Furthermore, Docker Scout’s continuous monitoring capabilities mean that images are not only secure at the time of deployment but remain compliant with evolving security standards and new vulnerabilities that may arise after deployment. This ongoing vigilance forms a crucial layer in a defense-in-depth approach, ensuring that security is maintained throughout the entire lifecycle of the container image.

By integrating Docker Scout into the organization’s security processes, teams can build a more resilient, secure, and compliant software environment, ensuring that security is deeply embedded at every stage from development to deployment and beyond.

Learn more

- Get started with Docker Scout.

- Find a Docker plan that’s right for you.

- Subscribe to the Docker Newsletter.

- Get the latest release of Docker Desktop.

- Have questions? The Docker community is here to help.

- New to Docker? Get started.