Reading view

Reiser5 Would Be Turning Five Years Old But Remains Dead

Pre-Content fanotify / fanotify Hierarchical Storage Management Expected For Linux 6.14

Hash-Based Integrity Checking Proposed For Linux To Help With Reproducible Builds

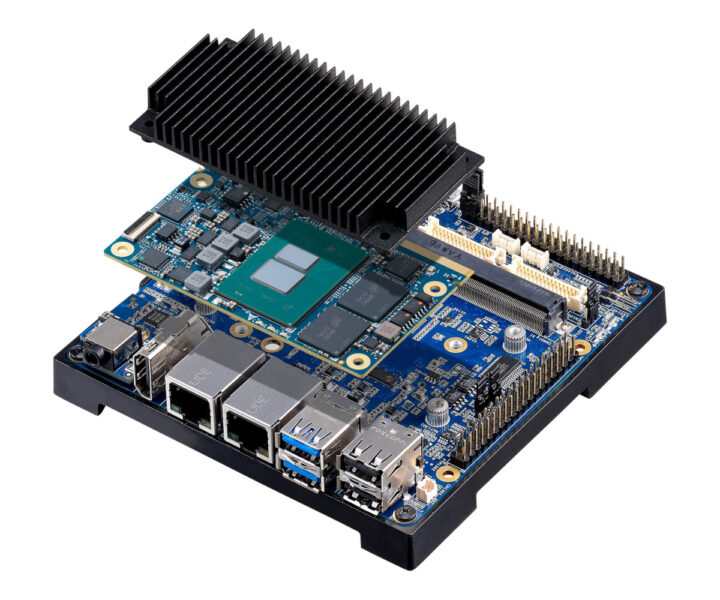

I-Pi SMARC Amston Lake development kit features Intel Atom x7433RE SoC, 8GB LPDDR5, two Raspberry Pi GPIO headers

ADLINK’s I-Pi SMARC Amston Lake is a fanless development kit based on SMARC 2.1-compliant system-on-module with an Intel Atom X7433RE quad-core SoC, 8GB LPDRR5 memory, and up to 256GB eMMC flash, plus a carrier board with dual 2.5GbE with TSN, two Raspberry Pi-compatible GPIO headers, and a range of other interfaces. Those include 4-lane MIPI DSI, HDMI, eDP, and dual-channel LVDS display interfaces, two MIPI CSI camera interfaces, a 3.5mm audio jack, four USB Type-A ports, three PCIe M.2 sockets for storage, wireless, and cellular connectivity. I-Pi SMARC Amston Lake devkit specifications: LEC-ASL SMARC 2.1 module Amston Lake SoC – Intel Atom x7433RE quad-core processor with 6MB cache, 32EU Intel UHD graphics; 9W TDP System Memory – 8GB LPDDR5 Storage – 32GB to 256GB eMMC flash Host interface – 314-pin MXM edge connector Storage – 1x SATA III (6 Gbps) Display – Dual-channel 18-/24-bit LVDS Camera – 2-lane MIPI CSI, [...]

The post I-Pi SMARC Amston Lake development kit features Intel Atom x7433RE SoC, 8GB LPDDR5, two Raspberry Pi GPIO headers appeared first on CNX Software - Embedded Systems News.

2024 reCamera reCap – AI Camera Growing on the Way

Dear All,

It’s been four months since our tiny AI superstar, reCamera, first stepped into the spotlight among our developer community. From its humble beginnings to the milestones we’ve achieved together, reCamera has become the trusted companion, continually inspiring creativity and innovation in edge AI and robotics. Now, as we look back at the journey so far, it’s the perfect moment to revisit how it all started, celebrate the progress we’ve made, and explore the exciting possibilities that await in the next chapter!

Have you heard about reCamera? What makes it unique?

reCamera comes with its processor and camera sensor. It’s the first open-source, tiny AI camera, programmable and customized, powered by an RISC-V SoC, delivering 1TOPS AI performance with video encoding 5MP@30fps. The modular hardware design gives you freedom to change various camera sensors and diverse interfaces baseboard as requirement, offering the most versitile platform for developers tackling vision AI systems.

Hardware checking list

- Core board: CPU, RAM, 8GB/64GB eMMC, and wireless module as customized antenna on-board

- Sensor board: currently compatible with OV5647/IMX335/SC130GS camera sensor, and continuously supporting more on the list, along with other sensors: mic, speaker, actuator, LED.

- Base board: determines the communication ports at reCamera’s bottom, we have USB2.0, ethernet, and serial port by default, open to be customized as you want – PoE/CAN/RS485/Display/Gyro/Type-C/vertical Type-C, etc.

- Core board covering by metal mainframe, along with the rubber ring wrapped inside the grooves for waterproofing and excellent temperature maintainance below 50℃.

OS & Dashboard

Well, the very first and important impression you should get from reCamera is that it’s already a computer. We set up the build root system, a lightweight customized Linux system in multi-thread, running all tasks without worrying about the conflicts. It supports Python and Node.js directly from console. You can also easily deploy the compiled executive files from C/Rust, so, very programming-friendly.

Just in case you’d prefer to forget about all programming scripts and complex configurations  we’ve pre-built Node-RED integration for you, to build up your whole workflow in NO-CODE. It’s completely simple to start by choosing the customized nodes for reCamera pipeline, linking each other to call the camera API, and using the NPU to load AI models directly onto the device. Finally, a web UI or mobile dashboard could show up seamlessly and help verify results effortlessly.

we’ve pre-built Node-RED integration for you, to build up your whole workflow in NO-CODE. It’s completely simple to start by choosing the customized nodes for reCamera pipeline, linking each other to call the camera API, and using the NPU to load AI models directly onto the device. Finally, a web UI or mobile dashboard could show up seamlessly and help verify results effortlessly.

So far, what we’ve done

Ultralytics & reCamera

Besides the standard reCamera as a standalone device only with hardware combination and Node-RED integration, we also provide you another option that can be more seamless to build your vision AI project – reCamera pre-installed with Ultralytics YOLO11 model (YOLO11n)! It comes with the native support and licensing for Ultralytics YOLO, offering real-world solutions such as object counting, heatmap, blurring, and security systems, enhancing efficiency and accuracy in diverse industries.

Application demos

1. reCamera voice control gimbal: we used Llama3 and LLaVA deployed on reComputer Jetson Orin. The whole setup is fully local, reading live video streamed from reCamera to get the basic perception of the current situation, and delivering instructions through Jetson “brain” thinking. Now, you can ask reCamera to turn left to check how many people are there and describe what it sees!

2. reCamera with Wi-Fi HaLow: We’ve tested reCamera with Wi-Fi HaLow long-range connectivity, linear distance could be up to 1km in stable!

3. Live-check reCamera detecting results through any browser: With pre-built Node-RED for on-device workflow configuration, you can quickly build and modify your applications on it, and check out video streams with various platforms.

4. reCamera with LeRobot arm: We used reCamera to scan ArUco markers to identify specific objects and utilized the ROS architecture to control the robotic arm.

Milestone – ready to see you in the real world!

July: prototype in reCamera gimbal

August: First show up: in Seeed “Making Next Gadget” Livestream

September: Introduce reCamera to the world

October: unboxing

November: first batch shipping on the way

December: “gimbal” bells coming – reCamera gimbal alpha test

Where our reCamera has traveled

- California, US – KHacks0.2 humanoid robotics hackathon 12/14-15

- Shanghai, China – ROSCON China 2024 12/08

- Barcelona, France – Smart City Expo World Congress 2024 11/05-07

- California, US – MakerFaire Bay Area 10/18-20

- Madrid, Spain – YOLO Vision 2024 09/27

Wiki & GitHub resources

Seeed Wiki:

- beginner guide – getting started

- Interfaces usage

- Model conversion from other frameworks to ONNX format

- Network connection – while a usb-connected device fails to recognize reCamera during the network configuration for reCamera

Seeed GitHub:

- reCamera hardware: all hardware design references

- reCamera OS: how to flash and build on

Appreciate community contribution on reCamera resources from our Discord group:

- chilu – get started with reCamera on Node-red: how to connect to VS code

- chilu – how the MQTT code works with reCamera

- chilu – video stream processor with reCamera

Listen to users: upon Alpha Test reviews

Based on insights from the Alpha test and feedback during the official product launch, we’ve gained invaluable input from our developer community. Many of you have voiced specific requests, such as waterproofing the entire device, integrating NIR infrared, thermal imaging, and night vision camera sensors with reCamera. These features are crucial, and we’re committed to diving deeper into their development to bring them to life. Your enthusiasm and support mean the world to us—thank you for being part of this journey.

Some adorable moments~

In addition, we’ve selected four Alpha testers to explore fresh ideas and contribute to hardware iterations by trying out the reCamera gimbal. Stay tuned for our updates, and continue to join us as we embark on this exciting path of growth and innovation!

Warm Regards,

AI Robotics Team @ Seeed Studio

The post 2024 reCamera reCap – AI Camera Growing on the Way appeared first on Latest Open Tech From Seeed.

Different Types of Career Goals for Computer Vision Engineers

Computer vision is one of the most exciting fields in AI, offering a range of career paths from technical mastery to leadership and innovation. This article outlines the key types of career goals for computer vision engineers—technical skills, research, project management, niche expertise, networking, and entrepreneurship—and provides actionable tips to help you achieve them.

Diverse Career Goals for Aspiring Computer Vision Engineers

Setting goals is about identifying where you want to go and creating a roadmap. Each of these paths can take your career in exciting directions.

Here are the key types of career goals for computer vision engineers:

- Technical Proficiency Goals: Building expertise in the tools and technologies needed to solve real-world problems.

- Research and Development Goals: Innovating and contributing to cutting-edge advancements in the field.

- Project and Product Management Goals: Taking the lead in driving projects and delivering impactful solutions.

- Industry Specialization Goals: Developing niche expertise in areas like healthcare, autonomous systems, or robotics.

- Networking and Community Engagement Goals: Growing your professional network and staying connected with industry trends.

- Entrepreneurial and Business Goals: Combining technical skills with business insights to start your own ventures.

Technical Proficiency Goals: Mastering Essential Skills

Learn Programming Languages Essential for Computer Vision

- Python: The most popular programming language for AI and computer vision, thanks to its simplicity and a rich ecosystem of libraries and frameworks like OpenCV, TensorFlow, and PyTorch.

- C++: Widely used in performance-critical applications such as robotics and real-time systems. Many industry-grade computer vision libraries, including OpenCV, are written in C++.

Tip: Combine Python’s ease of use with C++ for optimized and efficient solutions. For instance, you can prototype in Python and deploy optimized models in C++ for edge devices.

Master Frameworks for Machine Learning and Deep Learning

- PyTorch: Known for its flexibility and pythonic style, PyTorch is ideal for experimenting with custom neural networks.

A notable example is OpenAI’s ChatGPT, which uses PyTorch as its preferred framework, along with additional optimizations.

- TensorFlow: Preferred for production environments, TensorFlow excels in scalability and deployment, especially on cloud-based platforms like AWS or Google Cloud.

- Hugging Face Transformers: For those exploring multimodal or natural language-driven computer vision tasks like CLIP, Hugging Face offers state-of-the-art models checkpoints and easy-to-use implementations.

Example: Learning PyTorch enables you to implement advanced architectures like YOLOv8 for object detection or Vision Transformers (ViT) for image classification. TensorFlow can then help you scale and deploy these models in production.

Tip: Practice by recreating famous models like ResNet or UNet using PyTorch or TensorFlow.

Gain Expertise in Image Processing Techniques

Understanding image processing is critical for tasks like data pre-processing, feature extraction, and real-time applications. Focus on:

- Feature Detection and Description: Study techniques like SIFT, ORB, and HOG for tasks such as image matching or object tracking.

- Edge Detection: Techniques like Canny or Sobel filters are foundational for many computer vision workflows.

- Fourier Transforms: Learn how frequency-domain analysis can be applied to denoise images or detect repeating patterns.

Example: Combining OpenCV’s edge detection with PyTorch’s neural networks allows you to design hybrid systems that are both efficient and robust, like automating inspection tasks in manufacturing.

Tip: Start with OpenCV, a comprehensive library for image processing. It’s an excellent tool to build a strong foundation before moving on to deep learning-based methods.

Get Familiar with Emerging Technologies and Trends

Staying updated is critical for growth in computer vision. Keep an eye on:

- Transformers for Vision Tasks: Vision Transformers (ViT) and hybrid approaches like Swin Transformers are changing image classification and segmentation.

- Self-Supervised Learning: Frameworks like DINO and MAE are redefining how models learn meaningful representations without extensive labeled data.

- 3D Vision and Depth Sensing: Techniques like 3D Gaussian Splatting and NeRF (Neural Radiance Fields), along with LiDAR-based solutions, are becoming essential in fields like AR/VR and autonomous systems.

Example: While NeRF models have demonstrated incredible potential in generating photorealistic 3D scenes from 2D images, 3D Gaussian Splatting has emerged as a faster and more efficient alternative, making it the current standard for many applications. Specializing in 3D vision can open doors to industries like gaming, AR/VR, and metaverse development.

Tip: Participate in Kaggle competitions to practice applying these emerging technologies to real-world problems.

Develop Cross-Disciplinary Knowledge

Computer vision often overlaps with other domains like natural language processing (NLP) and robotics. Developing cross-disciplinary knowledge can make you more versatile:

- CLIP: A model that combines vision and language, enabling tasks like zero-shot classification or image-text retrieval.

- SLAM (Simultaneous Localization and Mapping): Essential for robotics and AR/VR, where understanding the spatial environment is key.

Example: Using CLIP for a multimodal project like creating a visual search engine for retail can showcase your ability to work across disciplines.

Research and Development Goals: Innovating Through Research

In computer vision, research drives breakthroughs that power new applications and technologies. Whether you’re working in academia or the industry, contributing to cutting-edge advancements can shape your career and position you as an innovator. Here’s how to set impactful research and development goals:

Contribute to Groundbreaking Studies

- Collaborate with academic or industrial research teams to explore new approaches in areas like image segmentation, object detection, or 3D scene reconstruction.

- Stay informed about trending topics like self-supervised learning, generative AI (e.g., diffusion models), or 3D vision using NeRF.

Example: Researchers at Google AI introduced NeRF (Neural Radiance Fields), which has revolutionized 3D rendering from 2D images. Participating in similar projects can set you apart as a thought leader.

Tip: Follow top conferences like CVPR, ICCV, SIGGRAPH and NeurIPS to stay updated on the latest developments and identify research areas where you can contribute.

Publish Papers in Reputable Journals and Conferences

- Focus on publishing high-quality research that offers novel solutions or insights. Start with workshops or smaller conferences before targeting top-tier journals.

- Collaborate with peers to ensure diverse perspectives and rigorous methodologies in your research.

Example: Publishing a paper on improving image segmentation for medical imaging can highlight your ability to create solutions that directly impact lives.

Tip: Use platforms like arXiv to share your work as preprints with the broader community and gain visibility before formal publication.

Develop and Implement New Algorithms

- Aim to design algorithms that solve specific real-world problems, such as reducing computation time for object detection or improving the accuracy of facial recognition systems.

- Benchmark your work against existing methods to demonstrate its value.

Example: YOLO (You Only Look Once) became a widely used object detection algorithm due to its speed and accuracy. A similar contribution can position you as an industry expert.

Tip: Use open-source datasets like COCO or ImageNet to train and test your models, and make your code available on platforms like GitHub to build credibility.

Leverage Tools and Resources

- Use frameworks like PyTorch or TensorFlow to experiment with state-of-the-art models and techniques.

- OpenCV University courses can help you build the foundational and advanced knowledge required to excel in research.

Actionable Tip: Start small by recreating experiments from existing papers, then move on to creating your own enhancements.

Project and Product Management Goals: Leading Projects and Teams

Taking the lead on projects or managing products is a natural next step for many computer vision engineers. These roles allow you to combine your technical expertise with leadership skills, creating opportunities to drive impactful outcomes.

Lead High-Impact Projects

- Take on leadership roles in projects that integrate computer vision into real-world applications. This could range from automating quality control in manufacturing to enabling AI-powered healthcare diagnostics.

Example: Managing a project to deploy a computer vision-based quality inspection system in a factory can showcase your ability to lead end-to-end solutions.

Tip: Start with smaller projects to build confidence, then gradually expand your scope to larger, multi-functional initiatives.

Deliver Products from Concept to Market

- Gain experience in the entire lifecycle of product development:

- Ideation: Identifying the problem and brainstorming solutions.

- Prototyping: Building and testing proof-of-concept solutions.

- Deployment: Scaling and optimizing solutions for real-world use.

Collaborate Across Teams

- Work with data scientists, software engineers, and business teams to align technical goals with business objectives.

- Improve your communication skills to effectively translate complex concepts into actionable tasks.

Example: Collaborating with Software Engineers to integrate a computer vision solution into a retail application can demonstrate your ability to connect technical and non-technical domains.

Tip: Practice explaining your projects to non-technical audiences—it’s a skill that will serve you well in leadership roles.

Learn Project Management Frameworks

- Familiarize yourself with methodologies like Agile or Scrum to manage tasks and timelines effectively.

- Consider certifications like PMP or training in tools like Kanban for added credibility.

Tip: Balancing technical responsibilities with management duties can be challenging. Start with hybrid roles, such as technical lead, before fully transitioning into management.

Leading projects or managing products not only enhances your impact but also helps you develop skills that are valuable across industries. Whether you’re building the next big AI product or managing a team of researchers, these goals can take your career to the next level.

Industry Specialization Goals: Becoming a Niche Expert

Specializing in a particular industry can set you apart as an expert in your field. Computer vision is a versatile discipline with applications across sectors like healthcare, autonomous systems, and retail. Focusing on a niche allows you to deepen your knowledge and create a strong personal brand.

Identify High-Demand Industries

- Autonomous Vehicles: Dive into technologies like 3D vision, object detection, and path planning. Companies like Tesla and Waymo are actively seeking experts in this field.

- Healthcare: Work on applications like medical image segmentation or diagnostic tools for diseases such as cancer or diabetic retinopathy.

- Robotics: Explore vision-guided robots for warehouse automation, agriculture, or advanced manufacturing.

Example: Specializing in autonomous vehicles can open opportunities to work with industry giants or startups shaping the future of mobility.

Tip: Follow industry trends to identify growing demand. For instance, the rise of generative AI in 3D modeling is creating opportunities in AR/VR and gaming.

Develop Domain-Specific Knowledge

- Learn industry-specific challenges and regulations. For example, healthcare solutions must comply with standards like HIPAA.

- Focus on tools and techniques relevant to your chosen niche. For robotics, understanding SLAM (Simultaneous Localization and Mapping) is crucial.

Example: A deep understanding of SLAM can make you indispensable in robotics or AR/VR development.

Tip: Participate in sector-focused hackathons or competitions to build hands-on experience and grow your portfolio.

Collaborate with Industry Leaders

- Attend conferences and workshops tailored to your chosen field. Build a professional network by connecting with industry experts on platforms like LinkedIn or during industry events.

Example: Networking at conferences can help you find mentors and collaborators in your specialization area.

Networking and Community Engagement Goals: Building Professional Connections

Building a strong network is vital for career growth. Engaging with the computer vision community can help you stay updated on trends, discover job opportunities, and find project collaborators.

Attend Conferences and Workshops

- Participate in renowned events like CVPR, ECCV, or NeurIPS. These gatherings provide opportunities to learn from industry leaders and showcase your work.

- Explore local meetups or hackathons to connect with peers and exchange ideas.

Example: Presenting your project at a CVPR workshop can establish your credibility and expand your professional circle.

Tip: If you can’t attend in person, look for virtual events and webinars that offer similar benefits.

Contribute to the Community

- Share your knowledge by writing blogs, creating tutorials, or contributing to open-source projects. Platforms like Medium, GitHub, and LinkedIn are excellent places to start.

- Mentor aspiring computer vision engineers or participate in community forums to give back and establish your presence.

Example: Writing a step-by-step guide on implementing YOLOv8 can help you gain recognition in the community.

Tip: Regularly contribute to open-source libraries like OpenCV to demonstrate your skills and commitment to the community.

Build Meaningful Connections

- Connect with thought leaders and peers on LinkedIn or Twitter. Engage with their work by sharing insights or asking thoughtful questions.

- Join online communities like Kaggle, Stack Overflow, or GitHub Discussions to collaborate with like-minded professionals.

Example: Participating in Kaggle competitions improves your skills and helps you connect with top-tier talent in the field.

Tip: Networking isn’t just about meeting people—it’s about maintaining relationships. Follow up after conferences or collaborations to keep the connection alive.

Grow Through Collaborative Learning

- Join study groups or enroll in courses that promote interaction with other learners. Sharing experiences can deepen your understanding of complex topics.

Tip: Actively participate in course discussions or forums to maximize your engagement and learning.

Entrepreneurial and Business Development Goals: Innovating and Leading

For entrepreneurial people, computer vision offers exciting opportunities to combine technical expertise with business skills. By starting your own venture or contributing to business development, you can shape innovative solutions and address market needs directly.

Start Your Own Tech Venture

- Identify real-world problems that computer vision can solve. Common areas include:

- Retail: Inventory tracking or virtual try-ons.

- Healthcare: AI-powered diagnostic tools.

- Agriculture: Crop monitoring and pest detection.

- Develop a minimum viable product (MVP) to demonstrate your solution’s potential.

Example: Launching a startup offering computer vision solutions for retail, such as automated shelf analysis, can attract investors and clients.

Tip: Use frameworks like OpenCV for prototyping and streamline development with cloud services like AWS or Google Cloud.

Secure Funding and Partnerships

- Pitch your ideas to venture capitalists or apply for grants to secure funding.

- Collaborate with established companies to accelerate development and market entry.

Example: Many successful startups, such as Scale AI, began by identifying niche challenges and partnering with industry leaders.

Tip: Prepare a solid business plan with a clear value proposition to stand out to investors.

Develop Proprietary Applications

- Build solutions that cater to specific industries. Proprietary applications can give you a competitive edge and generate revenue through licensing or subscriptions.

Example: Creating a vision-based inspection tool for manufacturing could streamline quality control and open doors to recurring business.

Tip: Stay user-focused. Build intuitive interfaces and prioritize features that address client pain points.

Combine Technical and Business Skills

- Expand your knowledge beyond technical expertise. Learn about market analysis, customer acquisition, and scaling strategies.

- Consider taking business courses or certifications to enhance your entrepreneurial skillset.

Tip: Use your technical background to solve problems that non-technical founders might overlook, giving your business a unique edge.

Achieve Your Career Goals with OpenCV University

Whether you aim to master technical skills, lead groundbreaking projects, or launch your own startup, OpenCV University has the resources to support you. Our curated courses are designed to help you at every career stage.

Free Courses

- Get started with the fundamentals of computer vision and machine learning.

- Explore foundational courses like the OpenCV Bootcamp or TensorFlow Bootcamp to build your skills without breaking the bank.

Premium Courses

- For a more in-depth learning experience, our premium course ‘Computer Vision Deep Learning Master Budle’ are tailored to help you excel in advanced topics like deep learning, computer vision applications, and AI-driven solutions.

- Learn directly from industry experts and gain practical skills that are immediately applicable in professional settings.

The post Different Types of Career Goals for Computer Vision Engineers appeared first on OpenCV.

Inky Frame 7.3″ is a 7-color ePaper display powered by a Raspberry Pi Pico 2 W

The Inky Frame 7.3″ is a Pico 2 W ePaper display featuring a 7.3-inch E Ink screen with 800 x 480 resolution and 7-color support. Other features include five LED-equipped buttons, two Qwiic/STEMMA QT connectors, a microSD card slot, and a battery connector with power-saving functionality. This Pico 2 W ePaper display is ideal for low-power applications such as home automation dashboards, sensor data visualization, and static image displays. E Ink technology ensures energy efficiency by consuming power only during screen refreshes while retaining images when unpowered. Flexible mounting options and included metal legs make it suitable for various setups. Previously, we covered the Waveshare 4-inch Spectra, a six-color ePaper display, along with other modules like the Inkycal v3, Inkplate 4, EnkPi, Inkplate 2, and more. Check them out if you’re interested in exploring these products. The Inky Frame 7.3″ specifications: Wireless module – Raspberry Pi Pico 2 W SoC [...]

The post Inky Frame 7.3″ is a 7-color ePaper display powered by a Raspberry Pi Pico 2 W appeared first on CNX Software - Embedded Systems News.

MYIR Introduces Low-Cost SoM Powered by Allwinner T536 Processor

DietPi August 2024 News (Version 9.7)

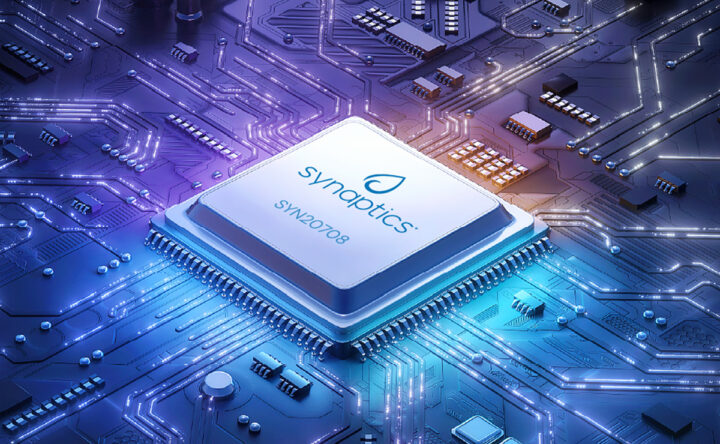

Synaptics SYN20708 low-power IoT SoC features Bluetooth, Zigbee, Thread, Matter, and advanced coexistence

Synaptics has recently introduced the SYN20708 low-power IoT SoC designed to handle simultaneous Bluetooth 5.4 Classic/Low Energy and IEEE 802.15.4 radios with Zigbee, Thread, and Matter protocols. The SoC integrates power and low-noise amplifiers and two separate radios enable simultaneous multiprotocol operations. The SoC is based on a 160 MHz Arm Cortex-M4 processor with user-accessible OTP memory for configuration. Built using a 16-nm FinFET process, it has very low power consumption, and advanced features like high-accuracy distance measurement (HADM), angle-of-arrival (AoA), and angle-of-departure (AoD). These features with versatile antenna support make this SoC suitable for industrial, consumer, and IoT applications. Synaptics SYN20708 specifications CPU – Arm Cortex-M4 processor @ 160 MHz Memory/Storage 544 KB System RAM 1664 KB Code RAM 1640 KB ROM 256 bytes OTP Connectivity Dual-radio Bluetooth 5.4 Bluetooth Classic, Bluetooth Low Energy Supports Bluetooth 6.0 features like HADM (high accuracy distance measurement) Bluetooth Class 1 and Class [...]

The post Synaptics SYN20708 low-power IoT SoC features Bluetooth, Zigbee, Thread, Matter, and advanced coexistence appeared first on CNX Software - Embedded Systems News.

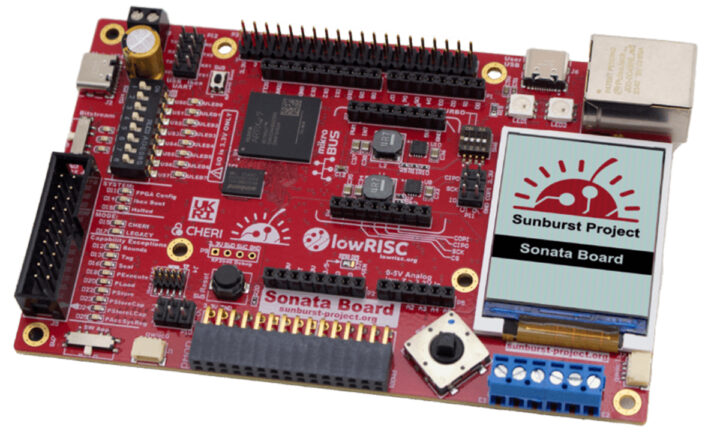

Sonata v1.0 RISC-V platform combines AMD Artix-7 FPGA and Raspberry Pi RP2040 MCU, features CHERIoT technology for secure embedded systems

lowRISC has released Sonata v1.0, a stable platform developed under the Sunburst project. Designed for embedded systems engineers, Sonata supports CHERIoT technology, enabling features like compartmentalization and enhanced memory safety. It provides a reliable foundation for building secure embedded systems. CHERIoT is a security-focused technology built on lowRISC’s RISC-V Ibex core, based on CHERI research from the University of Cambridge and SRI International. It addresses memory safety issues like buffer overflows and use-after-free errors using CHERI’s capability-based architecture. The CHERIoT capability format includes permissions for memory access, object types for compartmentalization, and bounds to restrict accessible memory regions. These features enable scalable and efficient compartmentalization, making it suitable for securely running untrusted software in embedded systems. Sonata v1.0 leverages this architecture to isolate components like network stacks and kernels within the CHERIoT RTOS. The lowRISC Sonata v1.0 specifications: FPGA – AMD Xilinx Artix-7 (XC7A35T-1CSG324C) CPU – AMD MicroBlaze soft-core based on [...]

The post Sonata v1.0 RISC-V platform combines AMD Artix-7 FPGA and Raspberry Pi RP2040 MCU, features CHERIoT technology for secure embedded systems appeared first on CNX Software - Embedded Systems News.

Linux RNDIS Removal Branch Updated For Disabling Microsoft RNDIS Protocol Drivers

systemd Highlights For 2024 From Run0 To Varlink To Advancing systemd-homed

Intel Mesa Code Lands Big Patch Series For Treating Convergent Values As SIMD8

Ruby 3.4 Programming Language Brings "it", Better Performance For YJIT

Did you dream of a Raspberry Pi Christmas?

Season’s greetings! I set this up to auto-publish while I’m off sipping breakfast champagne, so don’t yell at me in the comments — I’m not really here.

I hope you’re having the best day, and if you unwrapped something made by Raspberry Pi for Christmas, I hope the following helps you navigate the first few hours with your shiny new device.

Power and peripherals

If you’ve received, say, a Raspberry Pi 5 or 500 on its own and have no idea what you need to plug it in, the product pages on raspberrypi.com often feature sensible suggestions for additional items you might need.

Scroll to the bottom of the Raspberry Pi 5 product page, for example, and you’ll find a whole ‘Accessories’ section featuring affordable things specially designed to help you get the best possible performance from your computer.

You can find all our hardware here, so have a scroll to find your particular Christmas gift.

Dedicated documentation

There are full instructions on how everything works if you know where to look. Our fancy documentation site holds the keys to all of your computing dreams.

For beginners, I recommend our ‘Getting started’ guide as your entry point.

I need a book

If, like me, you prefer to scoot through a printed book, then Raspberry Pi Press has you covered.

The Official Raspberry Pi Beginner’s Guide 5th Edition is a good idea if you’re a newbie. If you already know what you’re doing but are in need of some inspiration, then the Book of Making 2025 and The Official Raspberry Pi Handbook 2025 are packed with suggestions for Pi projects to fill the year ahead.

We’ve also published bespoke titles to help with Raspberry Pi Camera projects or to fulfil your classic games coding desires.

Your one-stop shop for all your Raspberry Pi questions

If all the suggestions above aren’t working out for you, there are approx. one bajillion experts eagerly awaiting your questions on the Raspberry Pi forums. Honestly, I’ve barely ever seen a question go unanswered. You can throw the most esoteric, convoluted problem out there and someone will have experienced the same issue and be able to help. Lots of our engineers hang out in the forums too, so you may even get an answer direct from Pi Towers.

Be social

Outside of our official forums, you’ve all cultivated an excellent microcosm of Raspberry Pi goodwill on social media. Why not throw out a question or a call for project inspiration on our official Facebook, Threads, Instagram, TikTok, or “Twitter” account? There’s every chance someone who knows what they’re talking about will give you a hand.

Also, tag us in photos of your festive Raspberry Pi gifts! I will definitely log on to see and share those.

Again, we’re not really here, it’s Christmas!

I’m off again now to catch the new Wallace and Gromit that’s dropping on Christmas Day (BIG news here in the UK), but we’ll be back in early January to hang out with you all in the blog comments and on social.

Glad tidings, joy, and efficient digestion wished on you all.

The post Did you dream of a Raspberry Pi Christmas? appeared first on Raspberry Pi.

New Intel Mesa Driver Patches Implement AV1 Decode For Vulkan Video

CachyOS Had A Really Great Year Advancing This Performance-Optimized Arch Linux Platform

The Matrix Holiday Special 2024

Hi all,

Once again we celebrate the end of another year with the traditional Matrix Holiday Special! (see also 2023, 2022, 2021, 2020, 2019, 2018, 2017, 2016 and 2015 just in case you missed them).

This year, it is an incredible relief to be able to sit down and write an update which is overwhelmingly positive - in stark contrast to the rather mixed bags of 2022 and 2023. This is not to say that things are perfect: most notably, The Matrix.org Foundation has not yet hit its funding goals, and urgently needs more organisations who depend on Matrix to join as members in order to be financially sustainable. However, in terms of progress of Matrix towards outperforming the centralised alternatives; growth of the ecosystem; the success of the first ever Matrix Conference; we couldn’t be happier - and hopefully the more Matrix matures, the more folks will want to join the Foundation to help fund it.

So, precisely why are we feeling so happy right now?

🔗Matrix 2.0

Matrix 2.0 is the project to ensure that Matrix can be used to build apps which outcompete the incumbent legacy mainstream communication apps. Since announcing the project at FOSDEM 2023, we’ve been hard at work iterating on:

- Sliding Sync, providing instant sync, instant login and instant launch.

- Next Generation Auth via OIDC, to support instant login by QR code and consistent secure auth no matter the client.

- Native Multiparty VoIP via MatrixRTC, to provide consistent end-to-end-encrypted calling conferencing within Matrix using Matrix’s encryption and security model.

- Invisible Cryptography, to ensure that encryption in Matrix is seamless and no longer confuses users with unable-to-decrypt errors, scary shields and warnings, or other avoidable UX fails.

All of these projects are big, and we’ve been taking the time to iterate and get things right rather than cut corners – the whole name of the game has been to take Matrix from 1.0 (it works) to 2.0 (it works fast and delightfully, and outperforms the others). However, in September at the Matrix Conference we got to the point of shipping working implementations of all of the Matrix 2.0 MSCs, with the expectation of using these implementations to prove the viability of the MSCs and so propose them for merging into the spec proper.

Sliding Sync ended up evolving into MSC4186: Simplified Sliding Sync, and is now natively integrated into Synapse (no more need to run a sliding sync proxy!) and deployed on matrix.org, and implemented in matrix-rust-sdk and matrix-js-sdk. MatrixRTC is MSC4143 and dependents and is also deployed on matrix.org and call.element.io. Invisible Cryptography is a mix of MSCs: MSC4161 (Crypto terminology for non-technical users), MSC4153 (Exclude non-cross-signed devices), MSC3834 (Opportunistic user key pinning (TOFU)), and is mostly now implemented in matrix-rust-sdk - and Unable To Decrypt problems have been radically reduced (see Kegan’s excellent Matrix Conference talk for details). Finally, Next Gen Auth is MSC3861 and is planned to be deployed on matrix.org via matrix-authentication-service in Feb 2025.

It’s been controversial to ship Matrix 2.0 implementations prior to the MSCs being fully finalised and merged, but given the MSCs are backwards compatible with Matrix 1.0, and there’s unquestionable benefit to the ecosystem in getting these step-changes in the hands of users ASAP, we believe the aggressive roll-out is justified. Meanwhile, now the implementations are out and post-launch teething issues have largely been resolved, the MSCs will progress forwards.

One of the things we somehow failed to provide when announcing the implementations at the Matrix Conference was a playground for folks to experiment with Matrix 2.0 themselves. There’s now one based on Element’s stack of Synapse + matrix-authentication-service + Element Web + Element Call available at element-docker-demo in case you want to do a quick docker compose up to see what all the fuss is about! Meanwhile, matrix.org should support all the new MSCs in February – which might even coincide with the MSCs being finalised, you never know!

Rather than going through Matrix 2.0 in detail again, best bet is to check out the launch talk from The Matrix Conference…

Today's Matrix Live: https://youtube.com/watch?v=ZiRYdqkzjDU…and in terms of seeing a Matrix 2.0 client in action, the Element X launch talk shows what you can do with it!

Today's Matrix Live: https://youtube.com/watch?v=gHyHO3xPfQUHonestly, it is insanely exciting to see Matrix having evolved from the “good enough for enthusiastic geeks” to the “wow, this feels better than Signal” phase that we’re entering now. Meanwhile, matrix-rust-sdk is tracking all the latest Matrix 2.0 work, so any client built on matrix-rust-sdk (Fractal, Element X, iamb, etc) can benefit from it immediately. There’s also some really exciting matrix-rust-sdk improvements on the near horizon in the form of the long-awaited persistent event cache, which will accelerate all event operations enormously by avoiding needless server requests, as well as providing full offline support.

🔗The Matrix Conference

Talking of The Matrix Conference - this was by far the highlight of the year; not just due to being an excellent excuse to get Matrix 2.0 implementations launched, but because it really showed the breadth and maturity of the wider Matrix ecosystem.

One of the most interesting dynamics was that by far the busiest track was the Public Sector talk track (sponsored by Element) – standing room only, with folks queuing outside or watching the livefeed, whether this was Gematik talking about Matrix powering communications for the German healthcare industry, SwissPost showing off their nationwide Matrix deployment for Switzerland, DINUM showing off Tchap for France, NATO explaining NI²CE (their Matrix messenger), Försäkringskassan showing off Matrix for Sweden with SAFOS, Tele2 showcasing Tele2 Samarbete (Matrix based collaboration from one of Sweden’s main telcos), FITKO explaining how to do Government-to-Citizen communication with Matrix in Germany, ZenDiS using Matrix for secure communication in the German sovereign workspace openDesk project, or IBM showing off their Matrix healthcare deployments.

This felt really surprising: not only are we in an era where Matrix appears to be completely dominating secure communication and collaboration in the public sector – but it’s not just GovTech folks interested, but the wider Matrix community too.

I think it’s fair to say that when we created Matrix, we didn’t entirely anticipate this super-strong interest from government deployments – although in retrospect it makes perfect sense, given that more than anyone, nations wish to control their own infrastructure and run it securely without being operationally dependent on centralised solutions run out of other countries. A particular eye-opener recently has been seeing US Senators Ron Wyden (D) and Eric Schmitt (R) campaigning for the US Government to deploy Matrix in a way similar to France, Germany, Sweden and others. If this comes to pass, then it will surely create a whole new level of Matrix momentum!

It’s worth noting that while many Matrix vendors like Element, Nordeck, Famedly, connect2x and others have ended up mainly focusing commercially on public sector business (given that’s empirically where the money is right now) – the goal for Matrix itself continues to be mainstream uptake.

Matrix’s goal has always been to be the missing communication layer of the web for everyone, providing a worthy modern open replacement to both centralised messaging silos as well as outdated communication networks like email and the PSTN. It would be a sore failure of Matrix’s potential if it “only” ended up being successful for public sector communication! As it happens, our FOSDEM 2025 mainstage talk was just accepted, and happens to be named “The Road To Mainstream Matrix.” So watch this space to find out in February how all the Matrix 2.0 work might support mainstream Matrix uptake in the long-run, and how we plan to ensure Matrix expands beyond GovTech!

🔗The Governing Board

Another transformative aspect of 2024 was the formation of The Matrix.org Foundation Governing Board. Over to Josh with the details…

The election of our first ever Governing Board this year has gone a long way to ensuring we can truly call Matrix a public good, as something that is not only shared under an open source license and developed in the open, but also openly governed by elected representatives from across the ecosystem.

It took forming the Spec Core Team and the Foundation, both critical milestones on a journey of openness and independence, to pave the way. And with the Governing Board, we have a greater diversity of perspectives and backgrounds looking after Matrix than ever before!

The Governing Board is in the process of establishing its norms and processes and just last week published the first Governing Board report. Soon it’ll have elected committee chairs and vice chairs, and it appears to be on track to introduce our first working groups – official bodies to work together on initiatives in support of Matrix – at FOSDEM. Working groups will be another massive step forward, as they enable us to harmonize work across the ecosystem, such as on Trust & Safety and community events.

One last note on this, I want to shout out Greg Sutcliffe and Kim Brose, our first duly elected Chair and Vice Chair of the Governing Board, who have been doing great work to keep things in motion.

🔗Growing Membership

A key part of building the Governing Board has been recruiting to our membership program, which brings together organizations, communities, and individuals who are invested in Matrix. Our members illustrate the breadth of the ecosystem, and many of them are funders who help sustain our mission.

The Foundation has gone from being completely subsidized by Element in 2022, to having nearly half of its annual budget covered by its 11 funding members.

Of course, only being able to sustain half our annual budget is not nearly good enough, and it means that we live hand-to-mouth, extending our financial runway a bit at a time. It’s a nail biter of a ride for the hardworking staff who labor under this uncertainty, but we savor every win and all the progress we’ve made.

I’d like to take this opportunity to thank our funding members, including Element, our Gold Members, Automattic and Futurewei Technologies, our Silver Members, ERCOM, Fairkom, Famedly, Fractal Networks, Gematik, IndieHosters, Verji Tech, and XWiki.

We look forward to welcoming two new funding members in the coming weeks!

Our community-side members also play an important role, and we’re grateful for their work and participation. This includes our Associate Members, Eclipse Foundation, GNOME Foundation, and KDE, and our Ecosystem Members: Cinny, Community Moderation Effort, Conduit, Draupnir, Elm SDK, FluffyChat, Fractal, Matrix Community Events, NeoChat, Nheko-Reborn, Polychat, Rory&::LibMatrix, Thunderbird, and Trixnity.

If you’d like to see Matrix continue its momentum and the Foundation to further its work in ensuring Matrix is an independently and collectively governed protocol, please join the Foundation today. We need your help!

Back to you, Matthew!

🔗Focus

In 2023, we went through the nightmarishly painful process of ruthlessly focusing the core team exclusively on stabilising and polishing the foundations of Matrix – shelving all our next-generation showcases and projects and instead focusing purely on refining and evolving today’s Matrix core use cases for chat and VoIP.

In 2024, I’m proud to say that we’ve kept that focus – and indeed improved on it (for instance, we’ve stepped back on DMA work for much of the year in order to focus instead on the Trust & Safety work which has gone into Matrix 1.11, 1.12, and 1.13). As a result, despite a smaller team, we’ve made huge progress with Matrix 2.0, and the results speak for themselves. Anecdotally, I now wince whenever I have to use another messaging system – not because of loyalty to Matrix, but because the experience is going to be worse: WhatsApp has more "Waiting for message, this may take a while" errors (aka Unable To Decrypts or UTDs) than Matrix does, takes longer to launch and sync and has no threads; iMessage’s multidevice support can literally take hours to sync up; Signal just feels clunky and my message history is fragmented all over the place. It feels so good to be in that place, at last.

Meanwhile, it seems that Element’s move to switch development of Synapse and other projects to AGPL may have been for the best – it’s helped concretely address the issue of lack of commercial support from downstream projects, and Element is now in a much better position to continue funding Synapse and other core Matrix work. It’s also reassuring to see that 3rd party contributions to Synapse are as active as ever, and all the post-AGPL work on Synapse such as native sliding sync shows Element’s commitment to improving Synapse. Finally, while Dendrite dev is currently slow, the project is not abandoned, and critical fixes should keep coming – and if/when funding is available P2P Matrix & Dendrite work should resume as before. It wouldn’t be the first time Dendrite has come back from stasis!

🔗The Future

Beyond locking down Matrix 2.0 in the spec and getting folks using it, there are two big new projects on the horizon: MLS and State Res v3.

MLS is Messaging Layer Security (RFC 9420), the IETF standard for group end-to-end-encryption, and we’ve been experimenting with it for years now, starting around 2019, to evaluate it for use in Matrix alongside or instead of our current Double Ratchet implementation (Olm/Megolm). The complication on MLS is that it requires a logically centralised function to coordinate changes to the membership of the MLS group – whereas Matrix is of course fully decentralised; there’s never a central coordination point for a given conversation. As a result, we’ve been through several iterations of how to decentralise MLS to make it play nice with Matrix – essentially letting each server maintain its own MLS group, and then defining merge operations to merge those groups together. You can see the historical work over at https://arewemlsyet.com.

However, the resulting dialect of MLS (DMLS) has quite different properties to vanilla RFC 9420 MLS – for instance, you have to keep around some historical key data in case it’s needed to recover from a network partition, which undermines forward secrecy to some extent. Also, by design, Matrix network partitions can last forever, which means that the existing formal verification work that has been done around MLS’s encryption may not apply, and would need to be redone for DMLS.

Meanwhile, we’ve been participating in MIMI (More Instant Messaging Interoperability), an IETF working group focused on building a new messaging protocol to pair with MLS’s encryption. A hard requirement for MIMI is to utilise MLS for E2EE, and we went through quite a journey to see if Matrix could be used for MIMI, and understand how Matrix could be used with pure MLS (e.g. by having a centralised Matrix dialect like Linearized Matrix). Right now, MIMI is heading off in its own direction, but we’re keeping an eye on it and haven’t given up on converging somehow with it in future. And if nothing else, the exercise taught us a lot about marrying up Matrix and MLS!

Over the last few months there has been more and more interest in using MLS in Matrix, and at The Matrix Conference we gave an update on the latest MLS thinking, following a workshop at the conference with Franziskus from Cryspen (local MLS formal-verification experts in Berlin). Specifically, the idea is that it might be possible to come up with a dialect of Matrix which used pure RFC 9420 MLS rather than DMLS, potentially using normal Matrix rather than Linearized Matrix… albeit with MLS group changes mediated by a single ‘hub’ server in the conversation. The good news is that Cryspen proposed a mechanism where in the event of a network partition, both sides of the partition could elect a new hub and then merge the groups back together if the partition healed (handling history-sharing as an out-of-band problem, similar to the problem of sharing E2EE history when you join a new room in Matrix today). This would then significantly reduce the disadvantages of rooms having to have a centralised hub, given if the hub broke you could seamlessly continue the conversation on a new one.

So, we’ve now had a chance to sketch this out as MSC4244 - RFC 9420 MLS for Matrix, with two dependent MSCs (MSC4245 - Immutable encryption, and MSC4246 - Sending to-device messages as/to a server) and it’s looking rather exciting. This is essentially the protocol that Travis & I would have proposed to MIMI had the WG not dismissed decentralisation and dismissed Matrix interop - showing how it’s possible to use MLS for cryptographic group membership of the devices in a conversation, while still using Matrix for the user membership and access control around the room (complete with decentralisation). Best of all, it should also provide a solution to the longstanding problem of “Homeserver Control of Room Membership” highlighted by Albrecht & co from RHUL in 2022, by using MLS to prove that room membership changes are initiated by clients rather than malicious servers.

Now, we’re deliberately releasing this as a fairly early draft from the Spec Core Team in order to try to ensure that MLS spec work is done in the open, and to give everyone interested the opportunity to collaborate openly and avoid fragmentation. In the end, the SCT has to sign off on MSCs which are merged into Matrix, and we are responsible for ensuring Matrix has a coherent and secure architecture at the protocol layer – and for something as critical as encryption, the SCT’s role in coordinating the work is doubly important. So: if you’re interested in this space, we’d explicitly welcome collaboration and feedback on these MSCs in order to get the best possible outcome for Matrix – working together in the open, as per the Foundation’s values of ‘collaboration rather than competition’, and ‘transparency rather than stealth’.

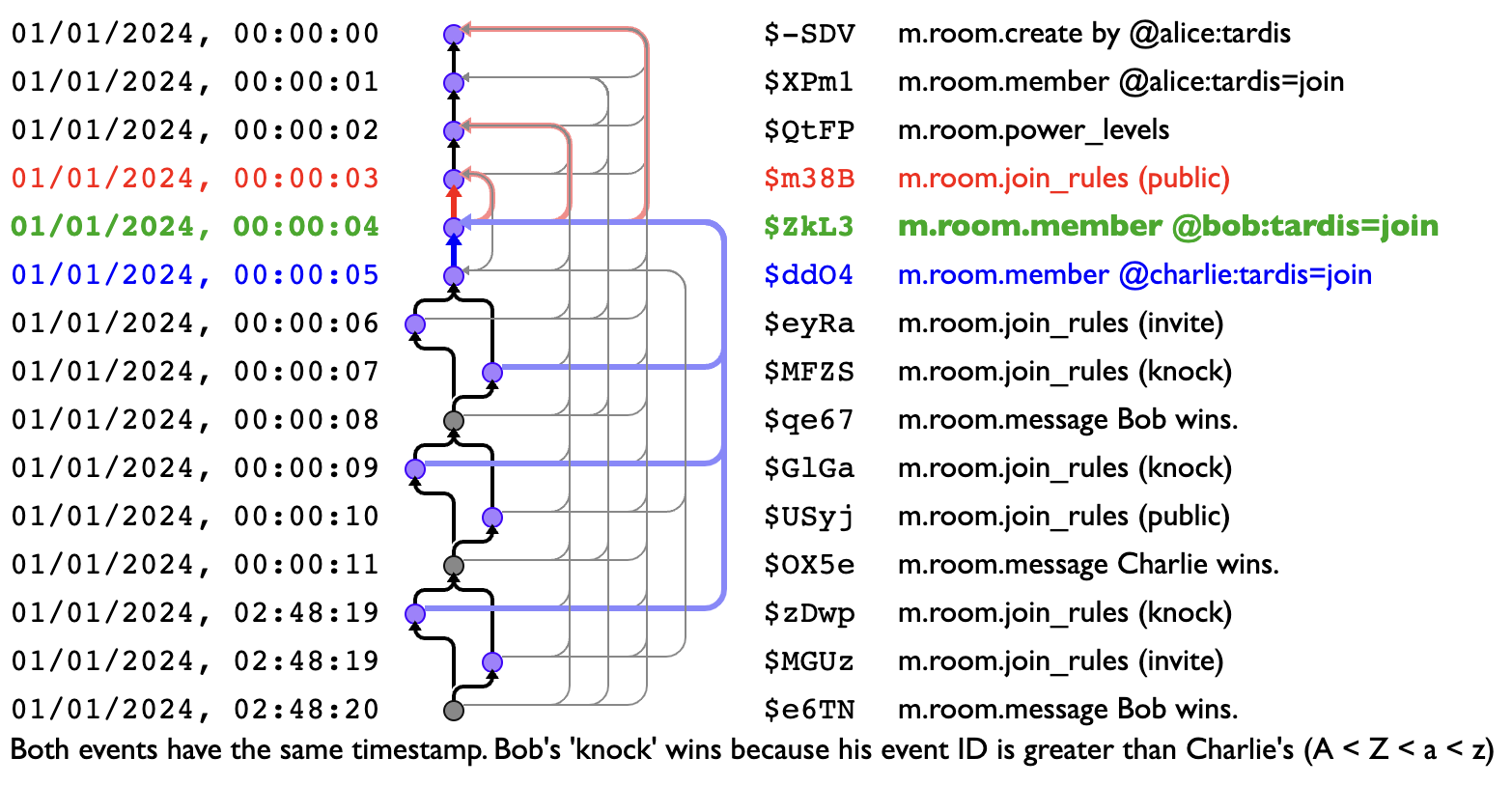

Meanwhile, the other big new project on the horizon is State Resolution v3. Old-timers may remember that when we launched Matrix 1.0, one of the big changes was the arrival of State Resolution v2 (MSC1442), which fixed various nasty issues in the original merge conflict resolution algorithm Matrix uses to keep servers in sync with each other. Now, State Res v2 has subsequently served us relatively well (especially relative to State Res v1), but there have still been a few situations where rooms have state reset unexpectedly – and we’re currently in the process of chasing them down and proposing some refinements to the algorithm. There’s nothing to see yet, although part of the work here has been to dust off TARDIS, our trusty Time Agnostic Room DAG Inspection Service, to help visualise different scenarios and compare different resolution algorithms. So watch this space for some very pretty explanations once v3 lands!

🔗Happy New Year!

Matrix feels like it entered a whole new era in 2024 – with the Foundation properly spreading its wings, hosting The Matrix Conference, operationalising the Governing Board, and Matrix uptake exploding across the public sector of 20+ countries. Funding continues to be an existential risk, but as Matrix continues to accelerate we’re hopeful that more organisations who depend on Matrix will lean in to support the Foundation and ensure Matrix continues to prosper.

Meanwhile, 2025 is shaping up to be really exciting. It feels like we’ve come out of the darkness of the last few years with a 2.0 which is better than we could have possibly hoped, and I can’t wait to see where it goes from here!

Thanks to everyone for supporting the project - especially if you are a member of the Foundation (and if not, please join here!). We hope you have a fantastic end of the year; see you on the other side, and thanks for flying Matrix :)