Cardiography signal measuring device built on Raspberry Pi Pico W

Having looked to see how blood pressure monitors operate, Miloš Rašić has been hard at work trying to improve their accuracy. David Crookes conducted this interview for the special 150th anniversary issue of our official magazine.

Keeping track of blood pressure is crucial for maintaining good health, especially when managing heart-related conditions. Electrical engineer Miloš Rašić knows this only too well. “Like most older people, my grandma suffers from elevated blood pressure, so a digital pressure monitor is something that is being used daily in the household,” he says. But he also noticed the machines can be flawed.

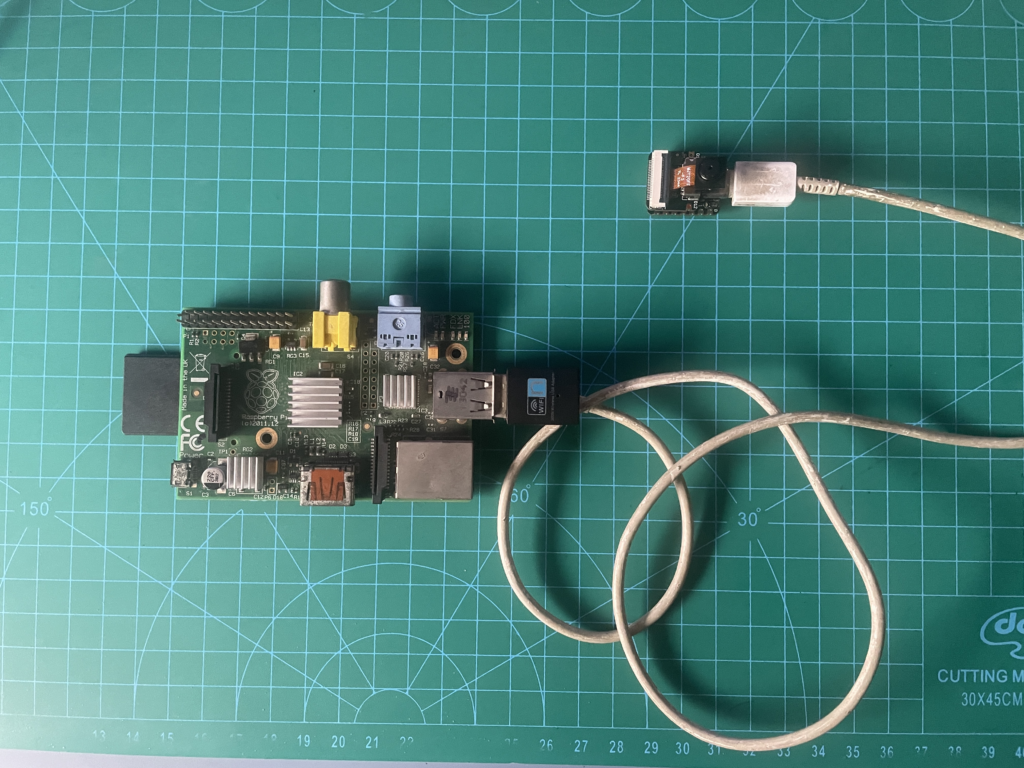

“Different monitors have provided widely different measurements and their performance was highly dependent on their battery level, which is not a good thing,” he explains. “So for my master’s thesis project, I wanted to explore digital blood pressure monitors and discover how they work.” This led him to develop a cardiography signal measuring device based around a Raspberry Pi Pico W.

Conducting experiments

When Miloš approached his project, he had a list of requirements in mind, chief among them being safety. “The device had to have optical isolation when connected to a PC and be battery-powered or have an isolated power supply,” he says.

As a priority, it needed to measure blood pressure. “This included measuring the air pressure inside an arm cuff, controlling a small air pump, and controlling an electromagnetic valve,” he adds. Miloš also wanted the device to use a well-supported microcontroller unit with wireless capabilities, hence the use of a Raspberry Pi Pico W. “It provided everything I needed in a small package and was supported by a large community, which meant everything would be easy to troubleshoot,” he says.

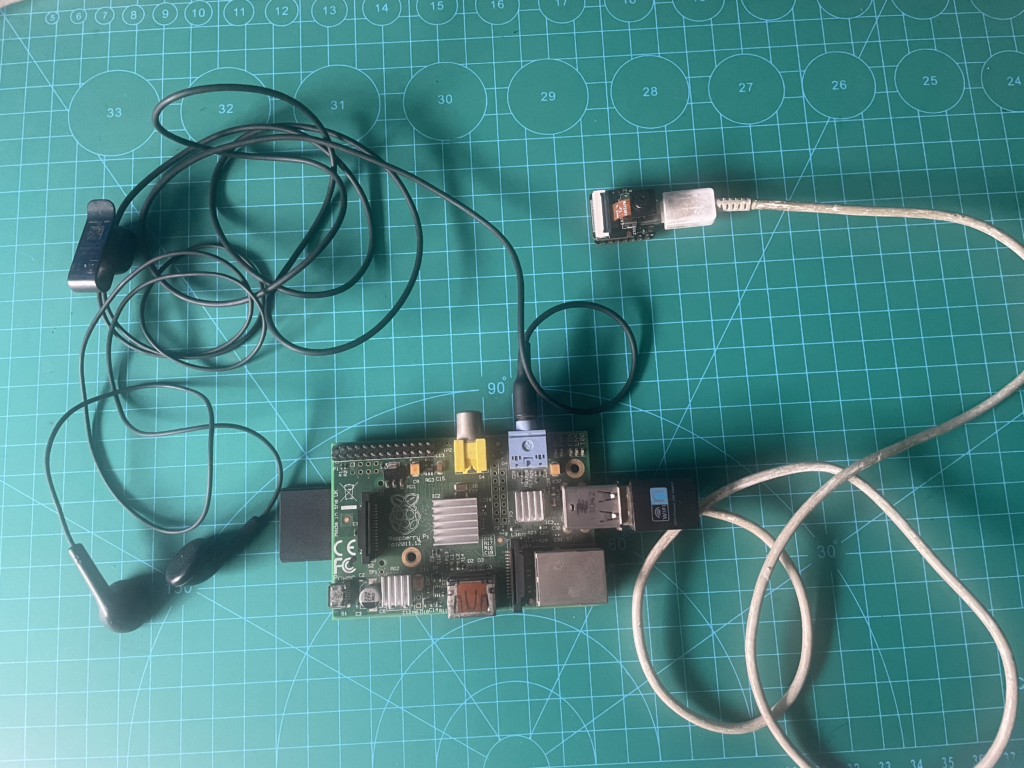

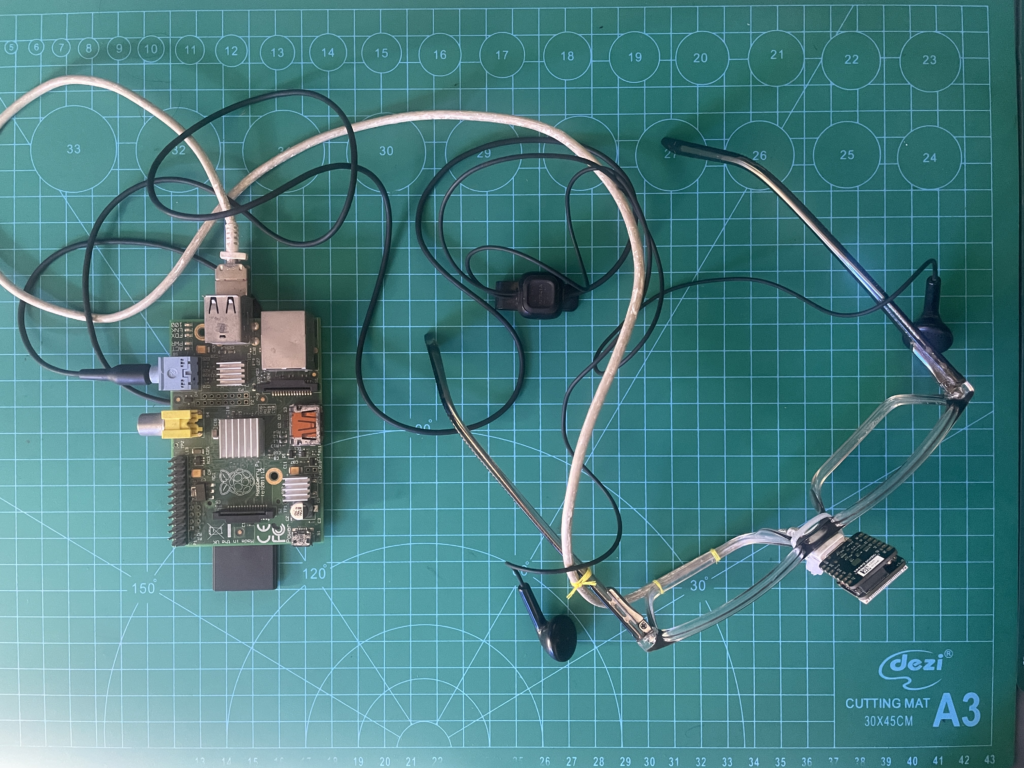

Along the way, Miloš began to add more features, including a stethoscope and the ability to take an ECG measurement. By using a photoplethysmography (PPG) clamp, he also figured the device could detect blood volume changes in the microvascular bed of tissue and that, combined, these sensors would be able to give a better insight into a person’s heart health.

And yet he was clear from the start that he wasn’t going to create a medical device. Instead, the ultimate aim was to take readings and conduct experiments to discover an optimal algorithm for measuring blood pressure. “The whole area of blood pressure monitors was a curiosity for me and I wanted to demystify it a bit and generally have a platform which other people can experiment with,” he explains. “So I created a setup that can be used for experimenting with new methods of analysing cardiography signals.”

Heart of the build

To fulfil his ambition, he got to work designing the PCB before looking at the other necessary components, such as the pump, valve, battery, and connectors. Some parts were simple enough — for example, the air pressure cuff, which you’ve likely seen on a visit to a GP or hospital. “This is the only sensor most commercial devices use, and the estimations using it are good enough for most cases,” Miloš says. But others required more work.

The ECG sensor to record heart activity was an important part of the build. “I wanted to extract the pulses from the air pressure signal and for the ECG to be my reference measurement so that I knew the algorithm was working properly,” he says. For this, Miloš included a custom layout of the AD8232 IC on the PCB (AD8232 is an integrated signal conditioning block for ECG measurement applications), allowing measurements to be taken.

Miloš also made a PPG clamp using a MikroE Oxi5 Click board that communicated with the rest of the system over I2C. “The PPG clamp is often used to measure blood oxygen saturation, but since it works by detecting the changes in blood flow in the finger, it’s a very useful sensor when it’s used in combination with the arm cuff,” Miloš says. “Since the arm cuff cuts off circulation in the arm, and then slowly lowers the air pressure inside until the circulation is established again, by using the PPG we can have a precise detection of when the laminar flow has been established again, which is the moment that the air pressure inside the arm cuff is equal to the diastolic air pressure.”

Finally, an old analogue stethoscope was added. Miloš combined this with a small piezo microphone, turning the stethoscope into an electronic device. “A stethoscope is used when doing manual blood pressure measurements, and since [this] is still the gold standard for non-invasive methods, I wanted to see how the signal on the stethoscope looks during this process and if I could draw any conclusions from it,” Miloš reveals.

Pressure’s on

To make sense of the data, Miloš decided the project would need a graphical interface. “This would have a live view of all of the measured signals and the capability of recording all of the data into a CSV file,” he says. It required a hefty dose of programming; Python was used to code the GUI, handling the graphical interface, the communication with the device, and the data logging capabilities. Python was also used to analyse the recorded signals, while the firmware was written in C++, “so that it runs as fast as possible on the Pico,” Miloš explains.

With everything working, Miloš designed a case. “I needed to see the rough space required for everything, which allowed me to design a case with mounting points for each of those things,” he says. “On the top, there is a lid that has NeoPixel LEDs and a small OLED display that can be programmed to show information to the user.”

Since then, he’s been using the project to conduct many tests, and you can see the results of those on Miloš’ GitHub page. The project has also been made open source because he hopes it will help others with their own projects. “It can give them a head start so they don’t have to develop their electronics from scratch if all they want to do is, for example, signal analysis,” he says. “This is why I’ve also included some data that I’ve recorded with this device if anyone wants to use just that without ever having any contact points with the hardware!”

Of course, you shouldn’t use home-made tools to diagnose medical problems; Miloš made it clear from the start that he wasn’t creating a medical device.

The post Cardiography signal measuring device built on Raspberry Pi Pico W appeared first on Raspberry Pi.